[ad_1]

SkyHive is an end-to-end reskilling platform that automates abilities evaluation, identifies future expertise wants, and fills talent gaps via focused studying suggestions and job alternatives. We work with leaders within the house together with Accenture and Workday, and have been acknowledged as a cool vendor in human capital administration by Gartner.

We’ve already constructed a Labor Market Intelligence database that shops:

- Profiles of 800 million (anonymized) staff and 40 million firms

- 1.6 billion job descriptions from 150 international locations

- 3 trillion distinctive talent combos required for present and future jobs

Our database ingests 16 TB of information on daily basis from job postings scraped by our internet crawlers to paid streaming information feeds. And we’ve finished lots of advanced analytics and machine studying to glean insights into world job developments at the moment and tomorrow.

Due to our ahead-of-the-curve expertise, good word-of-mouth and companions like Accenture, we’re rising quick, including 2-4 company prospects on daily basis.

Pushed by Information and Analytics

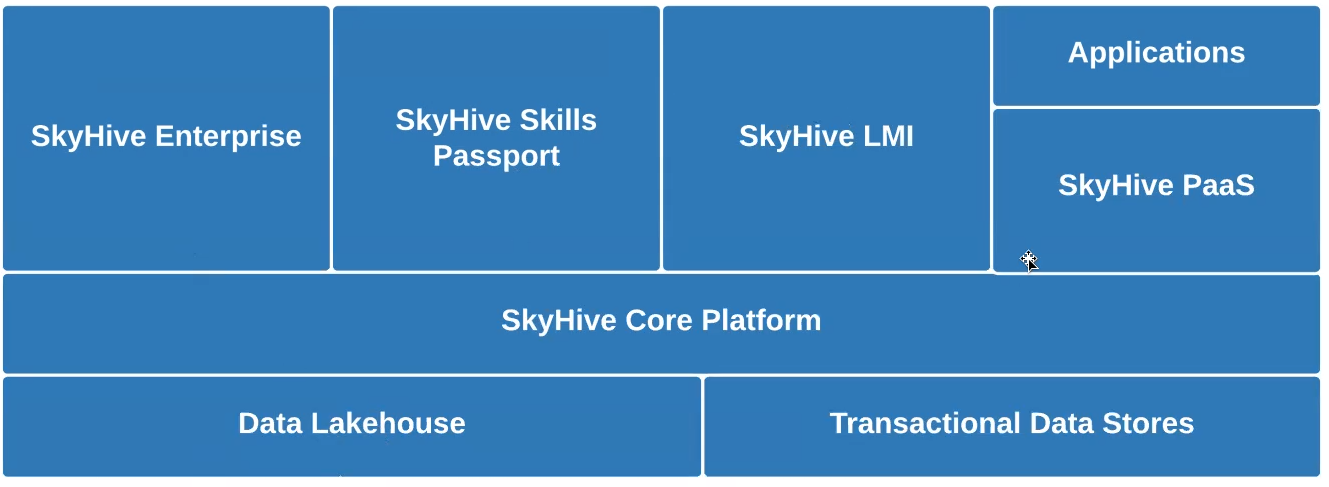

Like Uber, Airbnb, Netflix, and others, we’re disrupting an business – the worldwide HR/HCM business, on this case – with data-driven companies that embody:

- SkyHive Talent Passport – a web-based service educating staff on the job abilities they should construct their careers, and sources on get them.

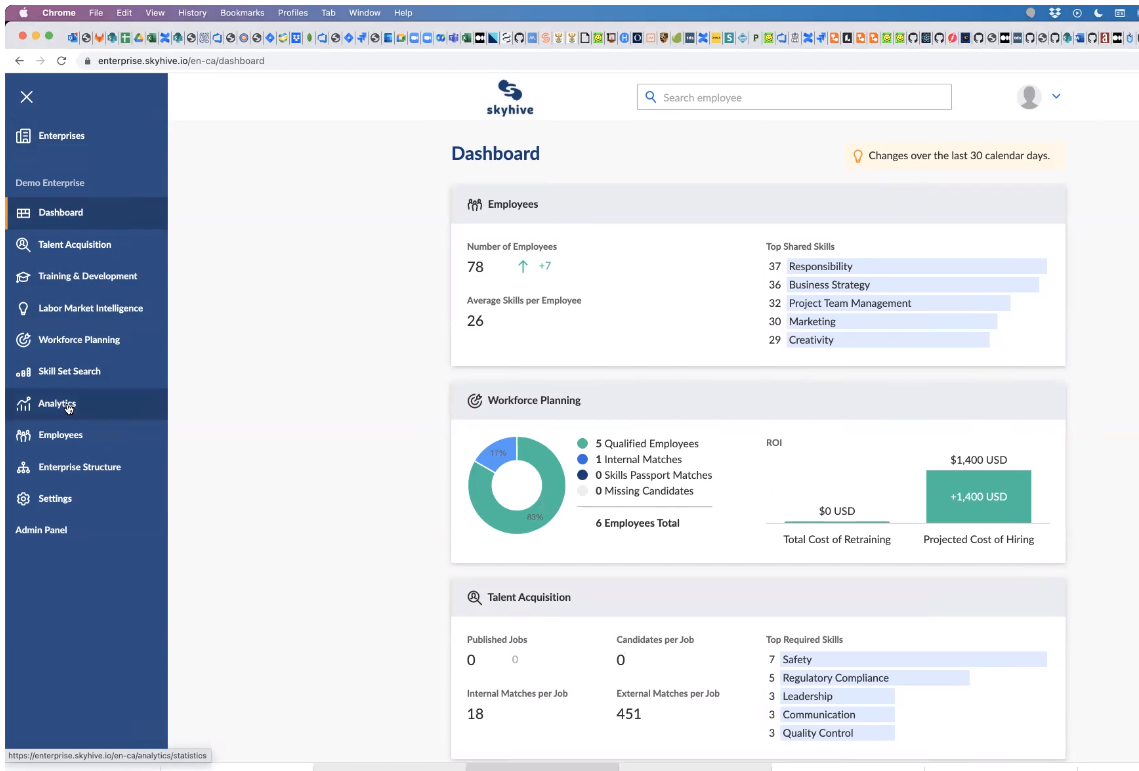

- SkyHive Enterprise – a paid dashboard (under) for executives and HR to research and drill into information on a) their staff’ aggregated job abilities, b) what abilities firms want to achieve the long run; and c) the talents gaps.

- Platform-as-a-Service by way of APIs – a paid service permitting companies to faucet into deeper insights, akin to comparisons with opponents, and recruiting suggestions to fill abilities gaps.

Challenges with MongoDB for Analytical Queries

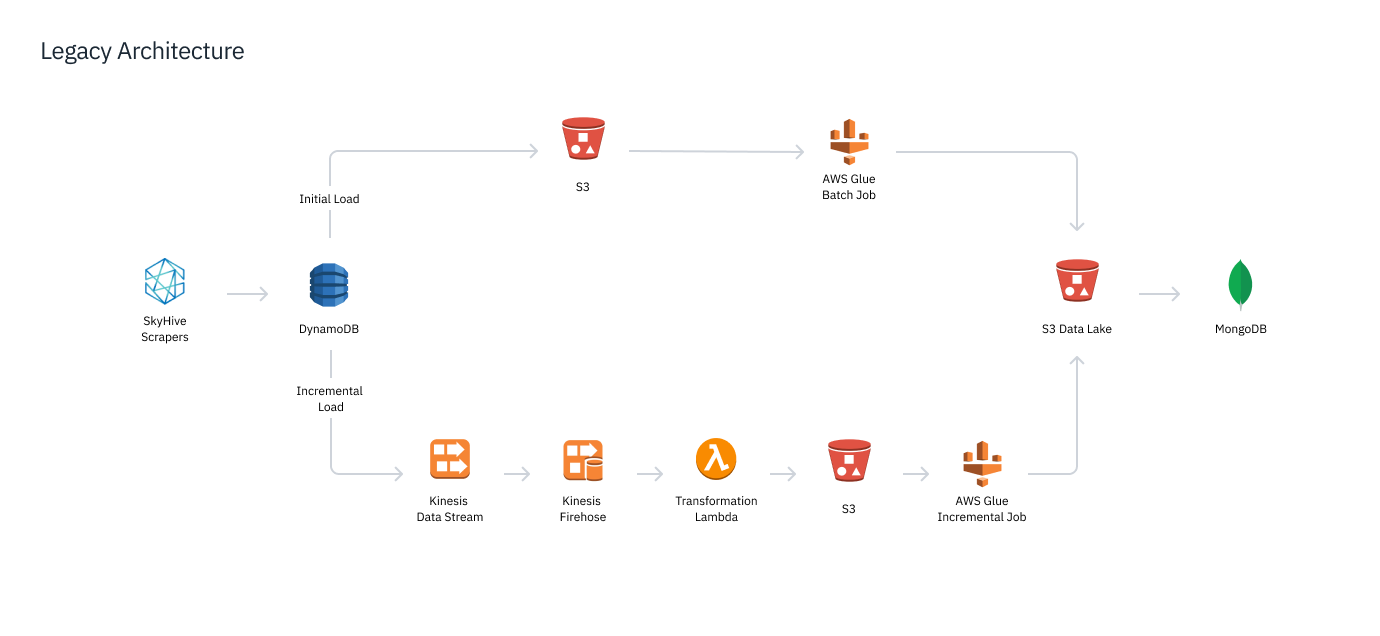

16 TB of uncooked textual content information from our internet crawlers and different information feeds is dumped every day into our S3 information lake. That information was processed after which loaded into our analytics and serving database, MongoDB.

MongoDB question efficiency was too gradual to help advanced analytics involving information throughout jobs, resumes, programs and completely different geographics, particularly when question patterns weren’t outlined forward of time. This made multidimensional queries and joins gradual and dear, making it unimaginable to supply the interactive efficiency our customers required.

For instance, I had one massive pharmaceutical buyer ask if it might be doable to seek out the entire information scientists on the planet with a scientific trials background and three+ years of pharmaceutical expertise. It could have been an extremely costly operation, however after all the client was in search of instant outcomes.

When the client requested if we might increase the search to non-English talking international locations, I needed to clarify it was past the product’s present capabilities, as we had issues normalizing information throughout completely different languages with MongoDB.

There have been additionally limitations on payload sizes in MongoDB, in addition to different unusual hardcoded quirks. As an illustration, we couldn’t question Nice Britain as a rustic.

All in all, we had important challenges with question latency and getting our information into MongoDB, and we knew we wanted to maneuver to one thing else.

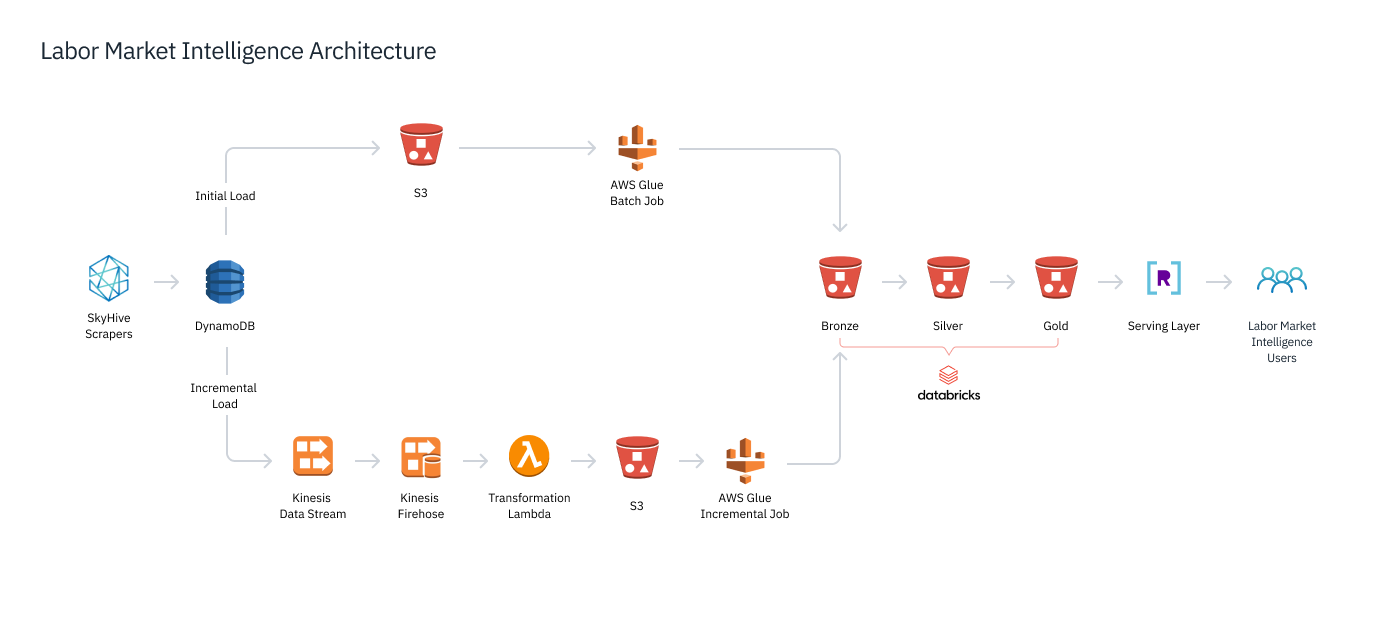

Actual-Time Information Stack with Databricks and Rockset

We wanted a storage layer able to large-scale ML processing for terabytes of recent information per day. We in contrast Snowflake and Databricks, selecting the latter due to Databrick’s compatibility with extra tooling choices and help for open information codecs. Utilizing Databricks, we’ve deployed (under) a lakehouse structure, storing and processing our information via three progressive Delta Lake phases. Crawled and different uncooked information lands in our Bronze layer and subsequently goes via Spark ETL and ML pipelines that refine and enrich the information for the Silver layer. We then create coarse-grained aggregations throughout a number of dimensions, akin to geographical location, job perform, and time, which can be saved within the Gold layer.

We’ve got SLAs on question latency within the low lots of of milliseconds, at the same time as customers make advanced, multi-faceted queries. Spark was not constructed for that – such queries are handled as information jobs that will take tens of seconds. We wanted a real-time analytics engine, one which creates an uber-index of our information with a purpose to ship multidimensional analytics in a heartbeat.

We selected Rockset to be our new user-facing serving database. Rockset constantly synchronizes with the Gold layer information and immediately builds an index of that information. Taking the coarse-grained aggregations within the Gold layer, Rockset queries and joins throughout a number of dimensions and performs the finer-grained aggregations required to serve consumer queries. That permits us to serve: 1) pre-defined Question Lambdas sending common information feeds to prospects; 2) advert hoc free-text searches akin to “What are the entire distant jobs in the US?”

Sub-Second Analytics and Sooner Iterations

After a number of months of improvement and testing, we switched our Labor Market Intelligence database from MongoDB to Rockset and Databricks. With Databricks, we’ve improved our skill to deal with enormous datasets in addition to effectively run our ML fashions and different non-time-sensitive processing. In the meantime, Rockset permits us to help advanced queries on large-scale information and return solutions to customers in milliseconds with little compute price.

As an illustration, our prospects can seek for the highest 20 abilities in any nation on the planet and get outcomes again in close to actual time. We are able to additionally help a a lot greater quantity of buyer queries, as Rockset alone can deal with tens of millions of queries a day, no matter question complexity, the variety of concurrent queries, or sudden scale-ups elsewhere within the system (akin to from bursty incoming information feeds).

We are actually simply hitting all of our buyer SLAs, together with our sub-300 millisecond question time ensures. We are able to present the real-time solutions that our prospects want and our opponents can not match. And with Rockset’s SQL-to-REST API help, presenting question outcomes to functions is straightforward.

Rockset additionally accelerates improvement time, boosting each our inner operations and exterior gross sales. Beforehand, it took us three to 9 months to construct a proof of idea for patrons. With Rockset options akin to its SQL-to-REST-using-Question Lambdas, we will now deploy dashboards custom-made to the potential buyer hours after a gross sales demo.

We name this “product day zero.” We don’t should promote to our prospects anymore, we simply ask them to go and check out us out. They’ll uncover they will work together with our information with no noticeable delay. Rockset’s low ops, serverless cloud supply additionally makes it simple for our builders to deploy new companies to new customers and buyer prospects.

We’re planning to additional streamline our information structure (above) whereas increasing our use of Rockset into a few different areas:

- geospatial queries, in order that customers can search by zooming out and in of a map;

- serving information to our ML fashions.

These initiatives would possible happen over the subsequent 12 months. With Databricks and Rockset, we’ve already remodeled and constructed out a fantastic stack. However there may be nonetheless way more room to develop.

[ad_2]