[ad_1]

For the ultimate a part of our Greatest Practices and Steerage for Cloud Engineers to Deploy Databricks on AWS sequence, we’ll cowl an necessary matter, automation. On this weblog publish, we’ll break down the three endpoints utilized in a deployment, undergo examples in frequent infrastructure as code (IaC) instruments like CloudFormation and Terraform, and wrap with some normal greatest practices for automation.

Nevertheless, should you’re now simply becoming a member of us, we suggest that you just learn by way of half one the place we define the Databricks on AWS structure and its advantages for a cloud engineer. In addition to half two, the place we stroll by way of a deployment on AWS with greatest practices and proposals.

The Spine of Cloud Automation:

As cloud engineers, you may be effectively conscious that the spine of cloud automation is software programming interfaces (APIs) to work together with numerous cloud companies. Within the fashionable cloud engineering stack, a corporation could use tons of of various endpoints for deploying and managing numerous exterior companies, inside instruments, and extra. This frequent sample of automating with API endpoints isn’t any totally different for Databricks on AWS deployments.

Varieties of API Endpoints for Databricks on AWS Deployments:

A Databricks on AWS deployment could be summed up into three sorts of API endpoints:

- AWS: As mentioned partly two of this weblog sequence, a number of sources could be created with an AWS endpoint. These embrace S3 buckets, IAM roles, and networking sources like VPCs, subnets, and safety teams.

- Databricks – Account: On the highest degree of the Databricks group hierarchy is the Databricks account. Utilizing the account endpoint, we will create account-level objects corresponding to configurations encapsulating cloud sources, workspace, identities, logs, and so forth.

- Databricks Workspace: The final kind of endpoint used is the workspace endpoint. As soon as the workspace is created, you should use that host for all the pieces associated to that workspace. This consists of creating, sustaining, and deleting clusters, secrets and techniques, repos, notebooks, jobs, and so forth.

Now that we have lined every kind of endpoint in a Databricks on AWS deployment. Let’s step by way of an instance deployment course of and name out every endpoint that shall be interacted with.

Deployment Course of:

In a typical deployment course of, you may work together with every endpoint listed above. I prefer to kind this from high to backside.

- The primary endpoint shall be AWS. From the AWS endpoints you may create the spine infrastructure of the Databricks workspace, this consists of the workspace root bucket, the cross-account IAM function, and networking sources like a VPC, subnets, and safety group.

- As soon as these sources are created, we’ll transfer down a layer to the Databricks account API, registering the AWS sources created as a sequence of configurations: credential, storage, and community. As soon as these objects are created, we use these configurations to create the workspace.

- Following the workspace creation, we’ll use that endpoint to carry out any workspace actions. This consists of frequent actions like creating clusters and warehouses, assigning permissions, and extra.

And that is it! An ordinary deployment course of could be damaged out into three distinct endpoints. Nevertheless, we do not wish to use PUT and GET calls out of the field, so let’s speak about a few of the frequent infrastructure as code (IaC) instruments that clients use for deployments.

Generally used IaC Instruments:

As talked about above, making a Databricks workspace on AWS merely calls numerous endpoints. Because of this whereas we’re discussing two instruments on this weblog publish, you aren’t restricted to those.

For instance, whereas we cannot speak about AWS CDK on this weblog publish, the identical ideas would apply in a Databricks on AWS deployment.

When you’ve got any questions on whether or not your favourite IaC instrument has pre-built sources, please contact your Databricks consultant or publish on our group discussion board.

HashiCorp Terraform:

Launched in 2014, Terraform is presently one of the common IaC instruments. Written in Go, Terraform provides a easy, versatile solution to deploy, destroy, and handle infrastructure throughout your cloud environments.

With over 13.2 million installs, the Databricks supplier means that you can seamlessly combine together with your current Terraform infrastructure. To get you began, Databricks has launched a sequence of instance modules that can be utilized.

These embrace:

- Deploy A number of AWS Databricks Workspaces with Buyer-Managed Keys, VPC, PrivateLink, and IP Entry Lists – Code

- Provisioning AWS Databricks with a Hub & Spoke Firewall for Information Exfiltration Safety – Code

- Deploy Databricks with Unity Catalog – Code: Half 1, Half 2

See a whole checklist of examples created by Databricks right here.

We often get requested about greatest practices for Terraform code construction. For many circumstances, Terraform’s greatest practices will align with what you utilize to your different sources. You can begin with a easy foremost.tf file, then separate logically into numerous environments, and eventually begin incorporating numerous off-the-shelf modules used throughout every atmosphere.

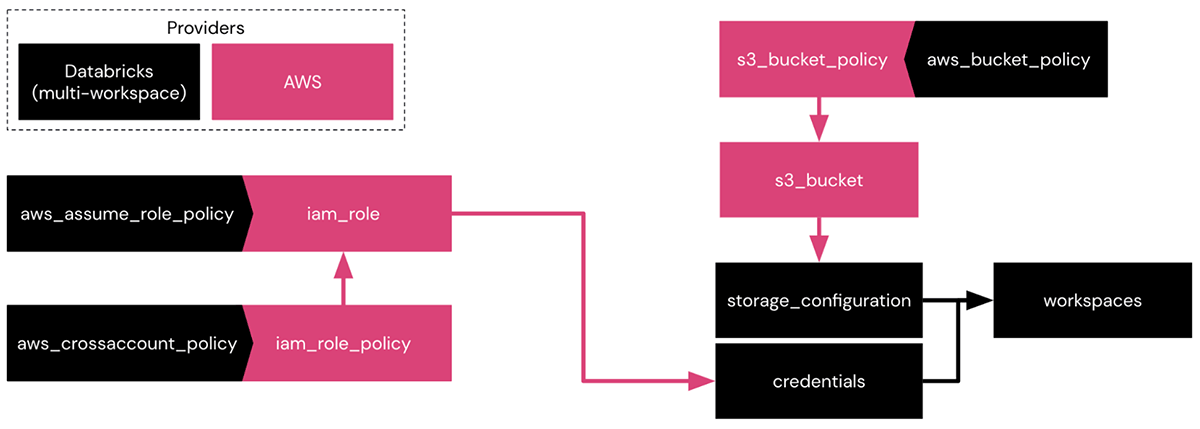

Within the above picture, we will see the interplay between the assorted sources present in each the Databricks and AWS suppliers when making a workspace with a Databricks-managed VPC.

- Utilizing the AWS supplier, you may create an IAM function, IAM coverage, S3 bucket, and S3 bucket coverage.

- Utilizing the Databricks supplier, you may name knowledge sources for the IAM function, IAM coverage, and the S3 bucket coverage.

- As soon as these sources are created, you log the bucket and IAM function as a storage and credential configuration for the workspace with the Databricks supplier.

It is a easy instance of how the 2 suppliers work together with one another and the way these interactions can develop with the addition of latest AWS and Databricks sources.

Final, for current workspaces that you just’d prefer to Terraform, the Databricks supplier has an Experimental Exporter that can be utilized to generate Terraform code for you.

Databricks Terraform Experimental Exporter:

The Databricks Terraform Experimental Exporter is a worthwhile instrument for extracting numerous elements of a Databricks workspace into Terraform. What units this instrument aside is its capacity to offer insights into structuring your Terraform code for the workspace, permitting you to make use of it as is or make minimal modifications. The exported artifacts can then be utilized to arrange objects or configurations in different Databricks environments shortly.

These workspaces could function decrease environments for testing or staging functions, or they are often utilized to create new workspaces in numerous areas, enabling excessive availability and facilitating catastrophe restoration situations.

To exhibit the performance of the exporter, we have offered an instance GitHub Actions workflow YAML file. This workflow makes use of the experimental exporter to extract particular objects from a workspace and routinely pushes these artifacts to a brand new department inside a designated GitHub repository every time the workflow is executed. The workflow could be additional personalized to set off supply repository pushes or scheduled to run at particular intervals utilizing the cronjob performance inside GitHub Actions.

With the designated GitHub repository, the place exports are differentiated by department, you’ll be able to select the precise department you want to import into an current or new Databricks workspace. This lets you simply choose and incorporate the specified configurations and objects from the exported artifacts into your workspace setup. Whether or not organising a recent workspace or updating an current one, this function simplifies the method by enabling you to leverage the precise department containing the required exports, guaranteeing a clean and environment friendly import into Databricks.

That is one instance of using the Databricks Terraform Experimental Exporter. When you’ve got extra questions, please attain out to your Databricks consultant.

Abstract: Terraform is a superb selection for deployment when you’ve got familiarity with it, are already utilizing it with pre-existing pipelines, seeking to make your deployment course of extra sturdy, or managing a multi-cloud set-up.

AWS CloudFormation:

First introduced in 2011, AWS CloudFormation is a solution to handle your AWS sources as in the event that they had been cooking recipes.

Databricks and AWS labored collectively to publish our AWS Fast Begin leveraging CloudFormation. On this open supply code, AWS sources are created utilizing native features, then a Lambda perform will execute numerous API calls to the Databricks’ account and workspace endpoints.

For patrons utilizing CloudFormation, we suggest utilizing the open supply code from the Fast Begin as a baseline and customizing it in keeping with your workforce’s particular necessities.

Abstract: For groups with little DevOps expertise, CloudFormation is a superb GUI-based option to get Databricks workspaces shortly spun up given a set of parameters.

Greatest Practices:

To wrap up this weblog, let’s speak about greatest practices for utilizing IaC, whatever the instrument you are utilizing.

- Iterate and Iterate: Because the outdated saying goes, “do not let good be the enemy of fine”. The method of deployment and refining code from proof of idea to manufacturing will take time and that is totally superb! This is applicable even should you deploy your first workspace by way of the console, an important half is simply getting began.

- Modules not Monoliths: As you proceed down the trail of IaC, it is beneficial that you just get away your numerous sources into particular person modules. For instance, if you recognize that you will use the identical cluster configuration in three totally different environments with full parity, create a module of this and name it into every new atmosphere. Creating and sustaining a number of equivalent sources can develop into burdensome to take care of.

- Scale IaC Utilization in Greater Environments: IaC just isn’t all the time uniformly used throughout improvement, QA, and manufacturing environments. You’ll have frequent modules used in all places, like making a shared cluster, however you might enable your improvement customers to create guide jobs whereas in manufacturing they’re absolutely automated. A standard pattern is to permit customers to work freely inside improvement then as they go into production-ready, use an IaC instrument to package deal it up and push it to increased environments like QA and manufacturing. This retains a degree of standardization, however offers your customers the liberty to discover the platform.

- Correct Supplier Authentication: As you undertake IaC to your Databricks on AWS deployments, you must all the time use service principals for the account and workspace authentication. This lets you keep away from hard-coded person credentials and handle service principals per atmosphere.

- Centralized Model Management: As talked about earlier than, integrating IaC is an iterative course of. This is applicable for code upkeep and centralization as effectively. Initially, you might run your code out of your native machine, however as you proceed to develop, it is necessary to maneuver this code right into a central repository corresponding to GitHub, GitLab, BitBucket, and so forth. These repositories and backend Terraform configurations can enable your total workforce to replace your Databricks workspaces.

In conclusion, automation is essential to any profitable cloud deployment, and Databricks on AWS isn’t any exception. You’ll be able to guarantee a clean and environment friendly deployment course of by using the three endpoints mentioned on this weblog publish and implementing greatest practices for automation. So, suppose you are a cloud engineer seeking to deploy Databricks on AWS, on this case, we encourage you to include the following tips into your deployment technique and reap the benefits of the advantages that this highly effective platform has to supply.

[ad_2]