[ad_1]

Posted by Miguel Guevara, Product Supervisor, Privateness and Information Safety Workplace

At Google, it’s our duty to maintain customers protected on-line and guarantee they’re in a position to benefit from the services and products they love whereas realizing their private info is personal and safe. We’re in a position to do extra with much less information by the event of our privacy-enhancing applied sciences (PETs) like differential privateness and federated studying.

And all through the worldwide tech trade, we’re excited to see adoption of PETs is on the rise. The UK’s Info Commissioner’s Workplace (ICO) not too long ago printed steering for a way organizations together with native governments can begin utilizing PETs to assist with information minimization and compliance with information safety legal guidelines. Consulting agency Gartner predicts that throughout the subsequent two years, 60% of all massive organizations can be deploying PETs in some capability.

We’re on the cusp of mainstream adoption of PETs, which is why we additionally imagine it’s our duty to share new breakthroughs and functions from our longstanding growth and funding on this area. By open sourcing varied PETs over the previous few years, we’ve made our instruments freely accessible for anybody – builders, researchers, governments, enterprise and extra – to make use of in their very own work, serving to unlock the facility of knowledge units with out revealing private details about customers.

As a part of this dedication, we open-sourced a first-of-its-kind Totally Homomorphic Encryption (FHE) transpiler two years in the past, and have continued to take away boundaries to entry alongside the best way. FHE is a strong know-how that lets you carry out computations on encrypted information with out with the ability to entry delicate or private info and we’re excited to share our newest developments that had been born out of collaboration with our developer and analysis group to assist increase what might be carried out with FHE.

Furthering the adoption of Totally Homomorphic Encryption

At the moment, we’re introducing new instruments that allow anybody to use FHE applied sciences to video information. This development is vital as a result of video adoption can usually be costly and incur future occasions, limiting the power to scale FHE use to bigger information and new codecs.

This launch will encourage builders to check out extra advanced functions with FHE. Traditionally, FHE has been considered an intractable know-how for large-scale functions. Our outcomes processing massive video information present it’s potential to do FHE in beforehand unimaginable domains. Say you’re a developer at an organization and are considering of processing a big file (within the TBs order of magnitude – could be a video, or a sequence of characters) for a given job (e.g., convolution round particular information factors to do a blurry filter on a video or detect object motion). Now you can full this job utilizing FHE.

To take action, we’re increasing our FHE toolkit in three new methods to make it simpler for builders to make use of FHE for a wider vary of functions, akin to personal machine studying, textual content evaluation, and the aforementioned video processing. As a part of our toolkit, we’re releasing new {hardware}, a software program crypto library and an open supply compiler toolchain. Our objective is to offer these new instruments to researchers and builders to assist advance how FHE is used to guard privateness whereas concurrently decreasing prices.

Increasing our toolkit

We imagine—with extra optimization and specialty {hardware} — there can be a wider quantity of use circumstances for a myriad of comparable personal machine studying duties, like privately analyzing extra advanced information, akin to lengthy movies, or processing textual content paperwork. Which is why we’re releasing a TensorFlow-to-FHE compiler that can permit any developer to compile their skilled TensorFlow Machine Studying fashions right into a FHE model of these fashions.

As soon as a mannequin has been compiled to FHE, builders can use it to run inference on encrypted consumer information with out getting access to the content material of the consumer inputs or the inference outcomes. As an illustration, our toolchain can be utilized to compile a TensorFlow Lite mannequin to FHE, producing a personal inference in 16 seconds for a 3-layer neural community. This is only one method we’re serving to researchers analyze massive datasets with out revealing private info.

As well as, we’re releasing Jaxite, a software program library for cryptography that enables builders to run FHE on a wide range of {hardware} accelerators. Jaxite is constructed on high of JAX, a high-performance cross-platform machine studying library, which permits Jaxite to run FHE packages on graphics processing items (GPUs) and Tensor Processing Items (TPUs). Google initially developed JAX for accelerating neural community computations, and we have now found that it may also be used to hurry up FHE computations.

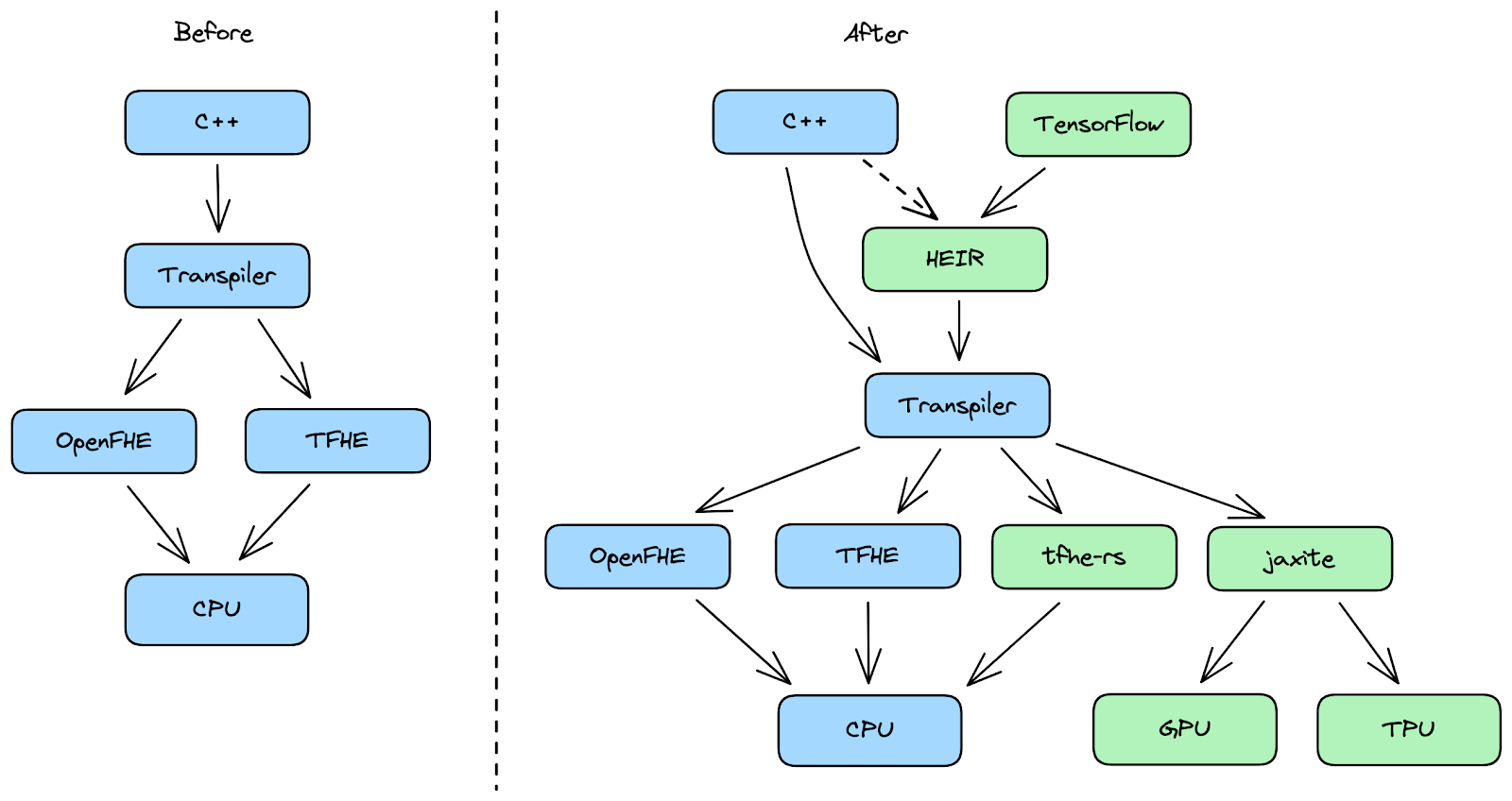

Lastly, we’re saying Homomorphic Encryption Intermediate Illustration (HEIR), an open-source compiler toolchain for homomorphic encryption. HEIR is designed to allow interoperability of FHE packages throughout FHE schemes, compilers, and {hardware} accelerators. Constructed on high of MLIR, HEIR goals to decrease the boundaries to privateness engineering and analysis. We can be engaged on HEIR with a wide range of trade and tutorial companions, and we hope it is going to be a hub for researchers and engineers to attempt new optimizations, examine benchmarks, and keep away from rebuilding boilerplate. We encourage anybody inquisitive about FHE compiler growth to come back to our common conferences, which might be discovered on the HEIR web site.

|

Constructing superior privateness applied sciences and sharing them with others

Organizations and governments around the globe proceed to discover the way to use PETs to sort out societal challenges and assist builders and researchers securely course of and shield consumer information and privateness. At Google, we’re persevering with to enhance and apply these novel methods throughout lots of our merchandise, by our Protected Computing, which is a rising toolkit of applied sciences that transforms how, when and the place information is processed to technically guarantee its privateness and security. We’ll additionally proceed to democratize entry to the PETs we’ve developed as we imagine that each web consumer deserves world-class privateness.

[ad_2]