[ad_1]

Right this moment, we’re blissful to announce the provision of Apache Spark™ 3.5 on Databricks as a part of Databricks Runtime 14.0. We prolong our honest appreciation to the Apache Spark neighborhood for his or her invaluable contributions to the Spark 3.5 launch.

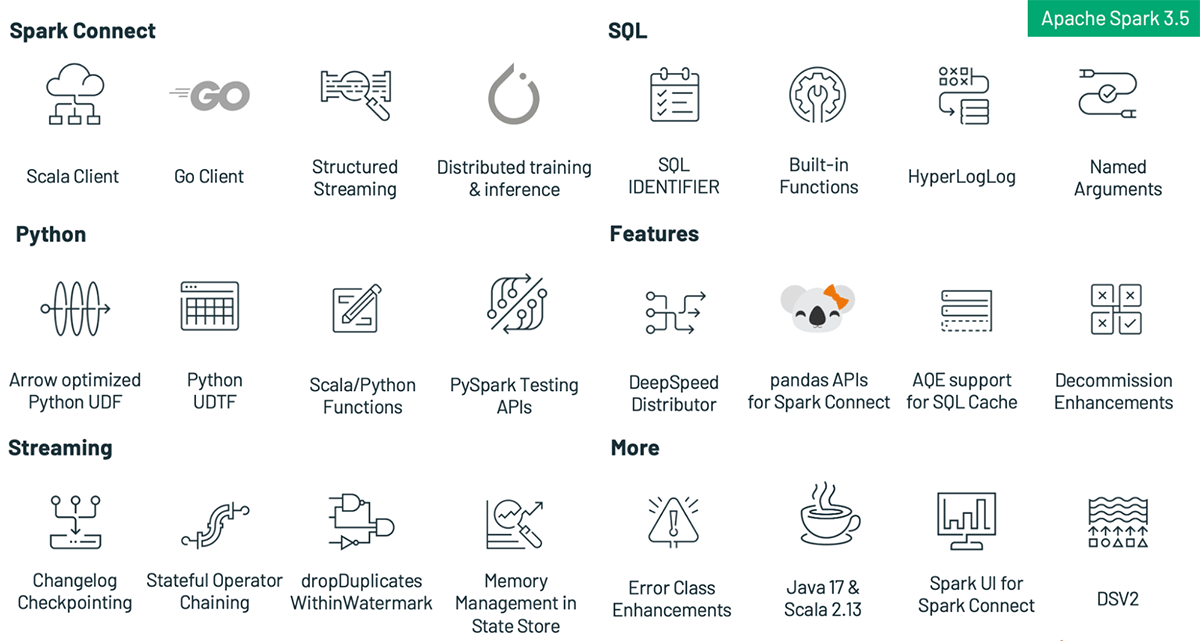

Aligned with our mission to make Spark extra accessible, versatile, and environment friendly than ever earlier than, this replace is full of new options and enhancements, together with:

- Spark Join helps extra situations with basic availability of the Scala shopper, assist for distributed coaching and inference, parity of Pandas API on SPARK, and improved compatibility for structured streaming

- Enhance developer productiveness with new PySpark and SQL performance like built-in SQL features for manipulating arrays, SQL IDENTIFIER clause, expanded SQL perform assist for the Scala, Python and R APIs, named argument assist for SQL perform calls, SQL perform assist for HyperLogLog approximate aggregations, in addition to Arrow-optimized Python UDFs, Python user-defined desk features, PySpark testing API, and Enhanced error lessons in PySpark

- Simplify distributed coaching with DeepSpeed on Spark clusters.

- Efficiency and stability enhancements within the RocksDB state retailer supplier, which cut back trade-offs when in comparison with in-memory state retailer suppliers.

- The English SDK for Apache Spark permits customers to make the most of plain English as their programming language, making information transformations extra accessible and user-friendly.

This weblog submit will stroll you thru the highlights of Apache Spark 3.5, supplying you with a snapshot of its game-changing options and enhancements. For extra details about these thrilling updates, hold a watch out for our upcoming weblog posts. To study concerning the nitty-gritty particulars, we advocate going by way of the excellent Apache Spark 3.5 launch notes, which embrace a full record of main options and resolved JIRA tickets throughout all Spark parts.

Spark Join

For the reason that launch of Spark 3.4.0, there have been roughly 680 commits related to the Spark Join implementation. Be happy to browse the modifications right here.

The important thing deliverable for Spark 3.5 and the Spark Join element is the final availability of the Scala shopper for Spark Join (SPARK-42554). A part of this work was a significant refactoring of the sql submodule to separate it into shopper (sql-api) and server-compatible (sql) modules to cut back the set of dependencies wanted on the shopper for classpath isolation (SPARK-44273).

Till the discharge of Spark 3.5, it was not potential to make use of Apache Spark’s MLlib straight with Spark Join because it depends on the Py4J gateway requiring a co-located shopper utility. In Spark 3.5 we introduce the power to do distributed coaching and inference utilizing Spark Join utilizing a brand new distributed execution framework primarily based on PyTorch (SPARK-42471). At the moment, this module helps logistic regression classifiers, fundamental function transformers, fundamental mannequin evaluators, ML pipelines and, cross validation. This framework seamlessly integrates with the vectorized Python UDF framework in Spark extending it with the aptitude of executing UDFs utilizing barrier execution mode.

Over the course of the final launch, we have now labored on offering parity of the Pandas API on Spark utilizing Spark Join (SPARK-42497), and continued to enhance the compatibility of the Spark Join shopper for structured streaming workloads each in Python and Scala (SPARK-49238).

Lastly, the neighborhood began engaged on a shopper for Spark Join in Golang (SPARK-43351) that’s developed in a separate repository right here: https://github.com/apache/spark-connect-go

PySpark Options

This launch introduces important enhancements to PySpark together with Arrow-optimized Python Consumer Outlined Features (UDFs), Python Consumer Outlined Desk Features (UDTFs), improved error messages, and a brand new testing API that significantly improves usability, efficiency, and testability in PySpark.

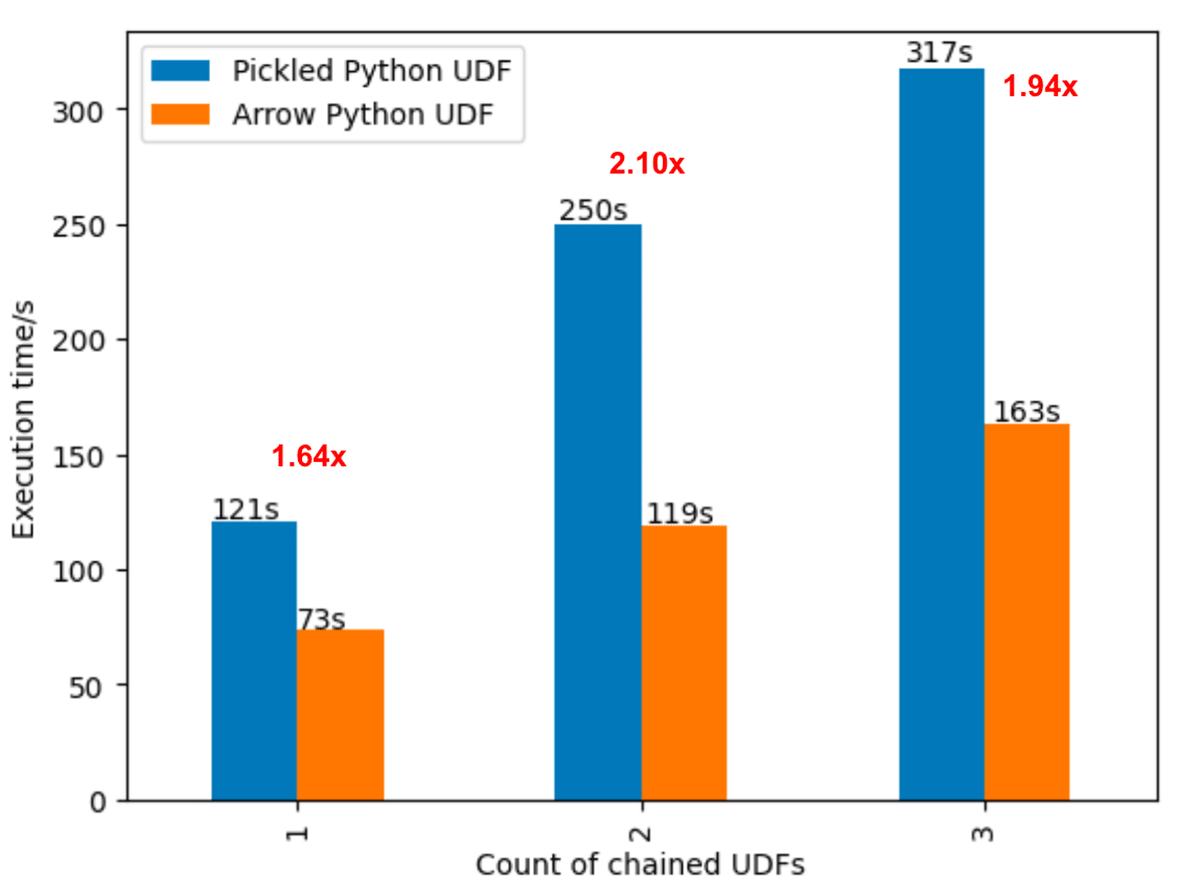

Arrow-optimized Python UDFs (SPARK-40307): Python UDFs will leverage the Arrow columnar format to enhance efficiency when both the spark.sql.execution.pythonUDF.arrow.enabled configuration is about to True, or when useArrow is about to True utilizing the UDF decorator, as proven within the following instance. With this optimization, Python UDFs can carry out as much as 2 occasions sooner than pickled Python UDFs on trendy CPU architectures, due to vectorized I/O.

spark.conf.set("spark.sql.execution.pythonUDF.arrow.enabled", True)

@udf("integer", useArrow=True)

def my_len_udf(s: str) -> int:

return len(s)

Python user-defined desk features (SPARK-43798): A user-defined desk perform (UDTF) is a sort of user-defined perform that returns a complete output desk as a substitute of a single scalar consequence worth. PySpark customers can now write their very own UDTFs integrating their Python logic and use them in PySpark and SQL.

from pyspark.sql.features import udtf

class MyHelloUDTF:

def eval(self, *args):

yield "hi there", "world"

# in PySpark

test_udtf = udtf(TestUDTF, returnType="c1: string, c2: string")

test_udtf().present()

+-----+-----+

| c1| c2|

+-----+-----+

|hi there|world|

+-----+-----+

# in SQL

spark.udtf.register(title="test_udtf", f=test_udtf)

spark.sql("SELECT * FROM test_udtf()").present()

+-----+-----+

| c1| c2|

+-----+-----+

|hi there|world|

+-----+-----+

Testing API (SPARK-44042): Apache Spark™ 3.5 introduces new DataFrame equality check utility features together with detailed, color-coded check error messages, which clearly point out variations between DataFrame schemas and information inside DataFrames. It permits builders to simply add equality exams that produce actionable outcomes for his or her purposes to boost productiveness. The brand new APIs are as follows:

pyspark.testing.assertDataFrameEqualpyspark.testing.assertPandasOnSparkEqualpyspark.testing.assertSchemaEqual

pyspark.errors.exceptions.base.PySparkAssertError: [DIFFERENT_ROWS] Outcomes do not match: ( 33.33333 % )

*** precise ***

Row(title='Amy', languages=['C++', 'Rust'])

! Row(title='Jane', languages=['Scala', 'SQL', 'Java'])

Row(title='John', languages=['Python', 'Java'])

*** anticipated ***

Row(title='Amy', languages=['C++', 'Rust'])

! Row(title='Jane', languages=['Scala', 'Java'])

Row(title='John', languages=['Python', 'Java'])

Enhanced error messages in PySpark (SPARK-42986): Beforehand, the set of exceptions thrown from the Python Spark driver didn’t leverage the error lessons launched in Apache Spark™ 3.3. The entire errors from DataFrame and SQL have been migrated, and comprise the suitable error lessons and codes.

SQL Options

Apache Spark™ 3.5 provides plenty of new SQL options and enhancements, making it simpler for folks to construct queries with SQL/DataFrame APIs in Spark, and for folks emigrate from different well-liked databases to Spark.

New built-in SQL features for manipulating arrays (SPARK-41231): Apache Spark™ 3.5 consists of many new built-in SQL features to assist customers simply manipulate array values. Utilizing built-in features for that is simpler and infrequently extra environment friendly than establishing user-defined features for a similar function.

IDENTIFIER clause (SPARK-41231): The brand new IDENTIFIER clause supplies flexibility for constructing new SQL question templates safely, with out the danger of SQL injection assaults. For instance, utilizing the IDENTIFIER clause with string literals to specify desk/column/perform names could be very highly effective when paired with the question parameter function added within the earlier Spark launch.

spark.sql(

"CREATE TABLE IDENTIFIER(:tbl)(col INT) USING json",

args = {

"tbl": "my_schema.my_tbl"

}

)

spark.sql(

"SELECT IDENTIFIER(:col) FROM IDENTIFIER(:tbl)",

args = {

"col": "col",

"tbl": "my_schema.my_tbl"

}

).present()

Expanded SQL perform assist for the Scala, Python, and R APIs (SPARK-43907): Earlier than Spark 3.5, there have been many SQL features that weren’t accessible within the Scala, Python, or R DataFrame APIs. This introduced difficulties invoking the features inside DataFrames as customers discovered it essential to sort the perform title in string literals with none assist from auto-completion. Spark 3.5 removes this downside by making 150+ SQL features accessible within the DataFrame APIs.

Named argument assist for SQL perform calls (SPARK-44059): Just like Python, Spark’s SQL language now permits customers to invoke features with parameter names previous their values. This matches the specification from the SQL commonplace and leads to clearer and extra strong question language when the perform has many parameters and/or some parameters have default values.

SELECT masks(

'AbCD123-@$#',

lowerChar => 'q',

upperChar => 'Q',

digitChar => 'd')

New SQL perform assist for HyperLogLog approximate aggregations primarily based on Apache Datasketches (SPARK-16484): Apache Spark™ 3.5 consists of new SQL features for counting distinctive values inside teams with precision and effectivity, together with storing the results of intermediate computations to sketch buffers which may be persistent into storage and loaded again later. These implementations use the Apache Datasketches library for consistency with the open-source neighborhood and straightforward integration with different instruments. For instance:

> SELECT hll_sketch_estimate(

hll_sketch_agg(col, 12))

FROM VALUES (50), (60), (60), (60), (75), (100) tab(col);

4

> SELECT hll_sketch_estimate(

hll_sketch_agg(col))

FROM VALUES ('abc'), ('def'), ('abc'), ('ghi'), ('abc') tab(col);

3

DeepSpeed Distributor

On this launch, the DeepspeedTorchDistributor module is added to PySpark to assist customers simplify distributed coaching with DeepSpeed on Spark clusters (SPARK-44264). It’s an extension of the TorchDistributor module that was launched in Apache Spark 3.4™. Beneath the hood, the DeepspeedTorchDistributor initializes the setting and the communication channels required for DeepSpeed. The module helps distributing coaching jobs on each single-node multi-GPU and multi-node GPU clusters. Right here is an instance code snippet of how one can use it:

from pyspark.ml.deepspeed.deepspeed_distributor import DeepspeedTorchDistributor

def practice():

# required boilerplate code

import deepspeed

parser = argparse.ArgumentParser(description="DeepSpeed Coaching")

parser.add_argument('--deepspeed',

'--ds',

motion='store_true',

assist='Allow DeepSpeed')

parser.add_argument('--deepspeed_config',

'--ds_config',

sort=str,

assist='DeepSpeed config file')

args = parser.parse_args()

system = int(os.environ["LOCAL_RANK"])

# outline the mannequin

mannequin = build_model().to(system)

mannequin, *_ = deepspeed.initialize(args=args, mannequin=mannequin,

model_parameters=mannequin.parameters())

dataset = make_dataset()

loader = DataLoader(dataset)

# run coaching

output = run_training(mannequin, loader, learning_rate=1e-3)

return output

deepspeed_distributor = DeepspeedTorchDistributor(numGpus=2, nnodes=2, use_gpu=True, localMode=False, deepspeedConfig={...})

deepspeed_distributor.run(practice)

For extra particulars and instance notebooks, see https://docs.databricks.com/en/machine-learning/train-model/distributed-training/deepspeed.html

Streaming

Apache Spark™ 3.5 introduces quite a lot of enhancements to streaming, together with the completion of assist for a number of stateful operators, and enhancements to the RocksDB state retailer supplier.

Completion of assist for a number of stateful operators (SPARK-42376): In Apache Spark™ 3.4, Spark permits customers to carry out stateful operations (aggregation, deduplication, stream-stream joins, and so forth) a number of occasions in the identical question, together with chained time window aggregations. Stream-stream time interval be a part of adopted by one other stateful operator wasn’t supported in Apache Spark™ 3.4, and Apache Spark™ 3.5 lastly helps this to allow extra complicated workloads e.g. becoming a member of streams of adverts and clicks, and aggregating over time window.

Changelog checkpointing for RocksDB state retailer supplier (SPARK-43421): Apache Spark™ 3.5 introduces a brand new checkpoint mechanism for the RocksDB state retailer supplier named “Changelog Checkpointing”, which persists the changelog (updates) of the state. This reduces the commit latency considerably which additionally reduces finish to finish latency considerably. You possibly can set the config spark.sql.streaming.stateStore.rocksdb.changelogCheckpointing.enabled property to true to allow this function. Observe which you can additionally allow this function with current checkpoints as effectively.

RocksDB state retailer supplier reminiscence administration enhancements (SPARK-43311): Though the RocksDB state retailer supplier is well-known to be helpful to handle reminiscence points on the state, there was no fine-grained reminiscence administration and there have nonetheless been some occurrences of reminiscence points with RocksDB. Apache Spark™ 3.5 introduces extra fine-grained reminiscence administration which permits customers to cap the overall reminiscence utilization throughout RocksDB situations in the identical executor course of, enabling customers to purpose about and configure the reminiscence utilization per executor course of.

Introduces dropDuplicatesWithinWatermark (SPARK-42931): In response to accrued expertise from utilizing dropDuplicates() with streaming queries, Apache Spark™ 3.5 introduces a brand new API dropDuplicatesWithinWatermark() which deduplicates occasions with out requiring the timestamp for occasion time to be the identical, so long as the timestamp for these occasions are shut sufficient to suit inside the watermark delay. With this new function, customers can deal with the case like “Timestamp for occasion time might differ even for occasions to be thought of as duplicates.” For instance, one sensible case is when the consumer ingests to Kafka with out an idempotent producer, and makes use of the automated timestamp within the report because the occasion time.

English SDK

The English SDK for Apache Spark is a groundbreaking device that revolutionizes your information engineering and analytics workflow through the use of English as your programming language. Designed to streamline complicated operations, this SDK minimizes code complexity, enabling you to focus on extracting priceless insights out of your information.

Rework DataFrames with Plain English

The `df.ai.remodel()` technique means that you can manipulate DataFrames utilizing easy English phrases. For instance:

transformed_df = revenue_df.ai.remodel('What are the best-selling and the second best-selling merchandise in each class?')Internally, this command is translated to the next SQL question, which is then executed and the result’s saved in a brand new DataFrame:

WITH ranked_products AS (

SELECT

product,

class,

income,

ROW_NUMBER() OVER (PARTITION BY class ORDER BY income DESC) as rank

FROM spark_ai_temp_view_d566e4

)

SELECT product, class, income

FROM ranked_products

WHERE rank IN (1, 2)

Visualize Information with Plain English

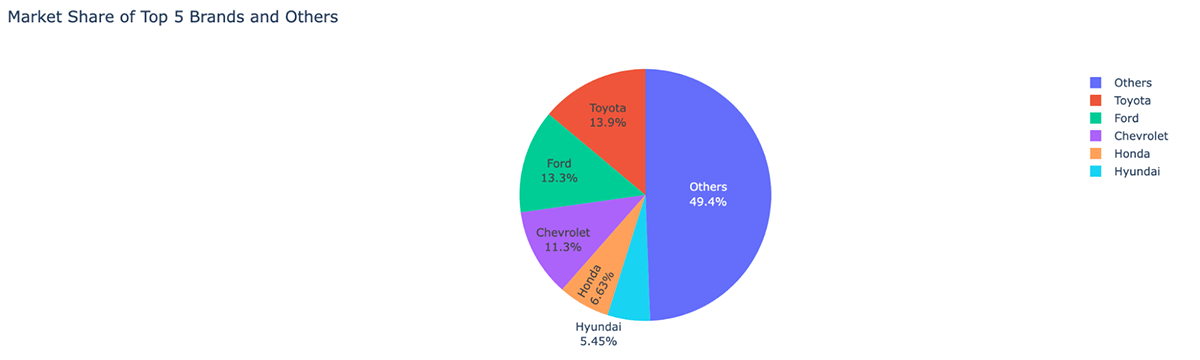

The `df.ai.plot()` technique gives a easy option to visualize your information. You possibly can specify the kind of plot and the information to incorporate, all in plain English. For instance:

auto_df.ai.plot('pie chart for US gross sales market shares, present the highest 5 manufacturers and the sum of others')

Extra Sources

For extra in-depth info and examples, go to our GitHub repository and weblog submit.

Past the Headlines: Extra in Apache Spark™ 3.5

Whereas the highlight typically falls on groundbreaking options, the true hallmark of a permanent platform is its deal with usability, stability, and incremental enchancment. To that finish, Apache Spark 3.5 has tackled and resolved an astonishing 1324 points, due to the collaborative efforts of over 198 contributors. These aren’t simply people, however groups from influential corporations like Databricks, Apple, Nvidia, Linkedin, UBS, Baidu, and lots of extra. Though this weblog submit has honed in on the headline-grabbing developments in SQL, Python, and streaming, Spark 3.5 gives a plethora of different enhancements not mentioned right here. These embrace adaptive question execution for SQL cache, decommission enhancements and new DSV2 extensions — to call only a few. Dive into the launch notes for a full account of those further capabilities.

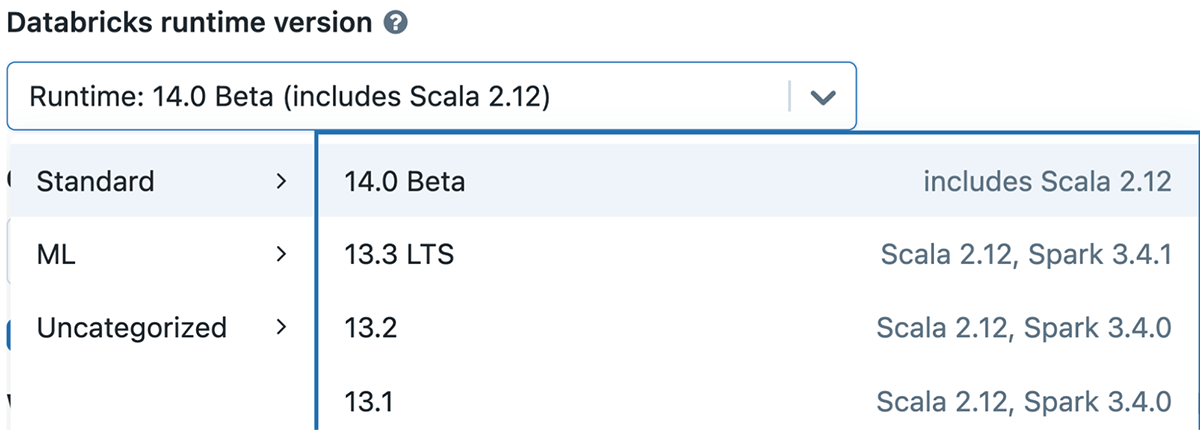

Get Began with Spark 3.5 Right this moment

If you wish to experiment with Apache Spark 3.5 on Databricks Runtime 14.0, you may simply achieve this by signing up for both the free Databricks Group Version or the Databricks Trial. When you’re in, firing up a cluster with Spark 3.5 is as straightforward as choosing model “14.0” You may be up and operating, exploring all that Spark 3.5 has to supply, in only a few minutes.

[ad_2]