[ad_1]

Because the lakehouse turns into more and more mission-critical to data-forward organizations, so too grows the danger that sudden occasions, outages, and safety incidents could derail their operations in new and unexpected methods. Databricks provides a number of key observability options to assist clients get forward of this new set of threats and provides them visibility into their lakehouse like by no means earlier than.

From a safety standpoint, one of many ways in which organizations have tailored to the trendy world, is to depend on the precept of “By no means Belief, All the time Confirm” by following a Zero Belief Structure (ZTA) mannequin. On this weblog, we’ll present you get you began with a ZTA in your Databricks Lakehouse Platform, and share a Databricks Pocket book that can routinely generate a sequence of SQL queries and alerts for you. In case you usually use Terraform for this type of factor we have got you coated too, merely take a look at the code right here.

What are System Tables?

System Tables function a centralized operational information retailer, backed by Delta Lake and ruled by Unity Catalog. System tables might be queried in any language, permitting them for use as the idea for a variety of various use circumstances, from BI to AI and even Generative AI. A few of the most typical use circumstances we have began to see clients implement on high of System Tables are:

- Utilization analytics

- Consumption/price forecasting

- Effectivity evaluation

- Safety & compliance audits

- SLO (Service degree goal) analytics and reporting

- Actionable DataOps

- Knowledge high quality monitoring and reporting

Though plenty of completely different schemas can be found, on this weblog we’re largely going to concentrate on the system.entry.audit desk, and extra particularly how it may be used to reinforce a Zero Belief Structure on Databricks.

Audit Logs

The system.entry.audit desk serves as your system of file for the entire materials occasions occurring in your Databricks Lakehouse Platform. A few of the use circumstances that is likely to be powered by this desk are:

- Safety and authorized compliance

- Audit analytics

- Acceptable Use Coverage (AUP) monitoring and investigations

- Safety and Incident Response Workforce (SIRT) investigations

- Forensic evaluation

- Outage investigations and postmortems

- Indicators of Compromise (IoC) detection

- Indicators of Assault (IoA) detection

- Risk modeling

- Risk searching

To investigate these sorts of logs previously, clients wanted to arrange cloud storage, configure cloud principals and insurance policies, after which construct and schedule an ETL pipeline to course of and put together the information. Now, with the announcement of System Tables, all you might want to do is opt-in and the entire information you want shall be routinely made accessible to you. Better of all, it really works precisely the identical throughout all supported clouds.

“By no means Belief, All the time Confirm” in your lakehouse with System Tables

A few of the key ideas of a Zero Belief Structure (ZTA) are:

- Steady verification of customers and entry

- Establish your most privileged customers and repair accounts

- Map your information flows

- Assign entry rights based mostly on the precept of least privilege

- Monitoring is essential

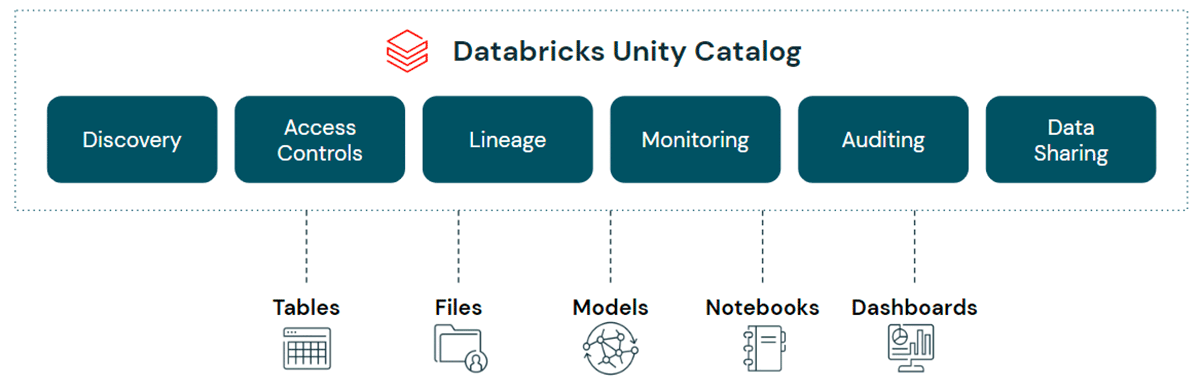

On this weblog we’ll primarily concentrate on Monitoring is essential, however it’s price briefly mentioning how Unity Catalog helps data-forward organizations implement a Zero Belief Structure throughout its wider characteristic set too:

- Steady verification of customers and entry:

- Unity Catalog validates permissions towards each request, granting short-lived, down-scoped tokens to approved customers.

- While id entry administration in UC provides the primary proactive line of protection, as a way to “By no means Belief, All the time Confirm” we’ll must couple that with retrospective monitoring. Entry administration by itself will not assist us detect and resolve misconfigured privileges or insurance policies, or permissions drift when somebody leaves or adjustments roles inside a corporation.

- Establish your most privileged customers and repair accounts:

- Unity Catalog’s built-in

system.information_schemagives a centralized view of who has entry to which securables, permitting directors to to simply establish their most privileged customers. - While the

information_schemagives a present view, this may be mixed withsystem.entry.auditlogs to observe grants/revocations/privileges over time.

- Unity Catalog’s built-in

- Map your information flows:

- Unity Catalog gives automated information lineage monitoring in real-time, right down to the column degree.

- While lineage might be explored through the UI (see the docs for AWS and Azure) because of System tables it will also be queried programmatically too. Take a look at the docs on AWS and Azure and look out for extra blogs on this subject quickly!

- Assign entry rights based mostly on the precept of least privilege:

- Unity Catalog’s unified interface significantly simplifies the administration of entry insurance policies to information and AI property, in addition to constantly making use of these insurance policies on any cloud or information platform.

- What’s extra, System Tables observe the precept of least privilege out of the field too!

Monitoring is essential

Efficient monitoring is likely one of the key foundations of an efficient Zero Belief Structure. All too typically, folks might be lured into the lure of considering that for efficient monitoring it is ample to seize the logs that we’d want and solely question them within the occasion of an investigation or incident. However as a way to align to the “By no means Belief, All the time Confirm” precept, we’ll must be extra proactive than that. Fortunately with Databricks SQL it is simple to write down SQL queries towards the system.entry.audit desk, after which schedule them to run routinely, immediately notifying you of probably suspicious occasions.

Quickstart pocket book

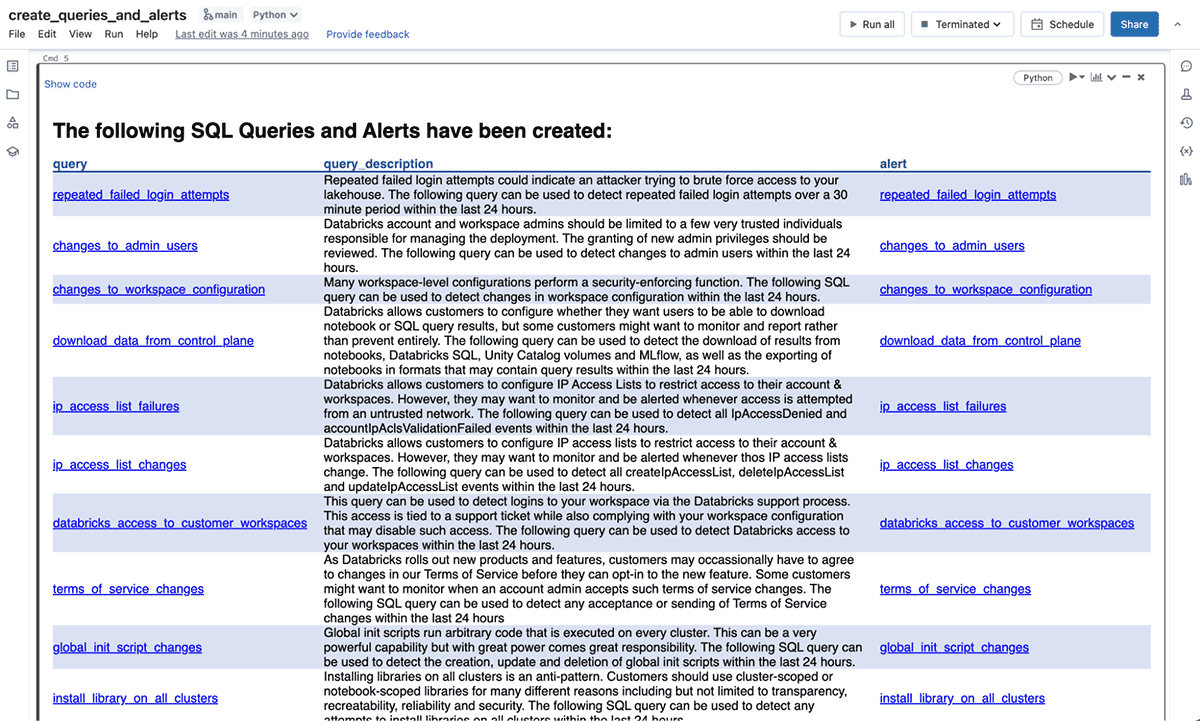

Clone the repo into your Databricks workspace (see the docs for AWS and Azure) and run the create_queries_and_alerts pocket book. Some examples of the 40+ queries and alerts that shall be routinely generated for you might be:

| Question / Alert Title | Question / Alert Description |

|---|---|

| Repeated Failed Login Makes an attempt | Repeated failed login makes an attempt over a 60-minute interval inside the final 24 hours. |

| Knowledge Downloads from the Management Aircraft | Excessive numbers of downloads of outcomes from notebooks, Databricks SQL, Unity Catalog volumes and MLflow, in addition to the exporting of notebooks in codecs that will comprise question outcomes inside the final 24 hours. |

| IP Entry Listing Failures | All makes an attempt to entry your account or workspace(s) from untrusted IP addresses inside the final 24 hours. |

| Databricks Entry to Buyer Workspaces | All logins to your workspace(s) through the Databricks help course of inside the final 24 hours. This entry is tied to a help ticket whereas additionally complying along with your workspace configuration that will disable such entry. |

| Damaging Actions | Excessive variety of delete occasions over a 60-minute interval inside the final 24 hours. |

| Potential Privilege Escalation | Excessive variety of permissions adjustments over a 60-minute interval inside the final 24 hours. |

| Repeated Entry to Secrets and techniques | Repeated makes an attempt to entry secrets and techniques over a 60-minute interval inside the final 24 hours. This may very well be used to detect makes an attempt to steal credentials. |

| Repeated Unauthorized Knowledge Entry Requests | Repeated unauthorized makes an attempt to entry Unity Catalog securables over a 60-minute interval inside the final 24 hours. Repeated failed requests might point out privilege escalation, information exfiltration makes an attempt or an attacker attempting to brute pressure entry to your information. |

| Antivirus Scan Contaminated Information Detected | For patrons utilizing our Enhanced Safety and Compliance Add-On, detect any contaminated recordsdata discovered on the hosts inside the final 24 hours. |

| Suspicious Host Exercise | For patrons utilizing our Enhanced Safety and Compliance Add-On, detect suspicious occasions flagged by the behavior-based safety monitoring agent inside the final 24 hours. |

After you have run the pocket book, when you scroll to the underside you will see an HTML desk with hyperlinks to every of the SQL queries and alerts:

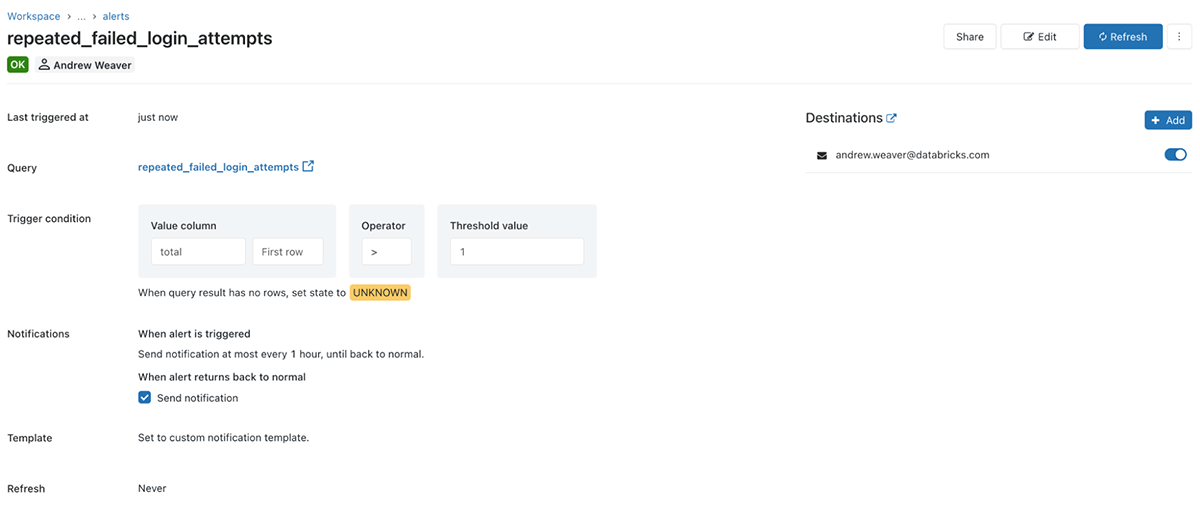

To check the alert, merely observe the hyperlink and choose refresh. If it hasn’t been triggered you will see a inexperienced OK within the high left:

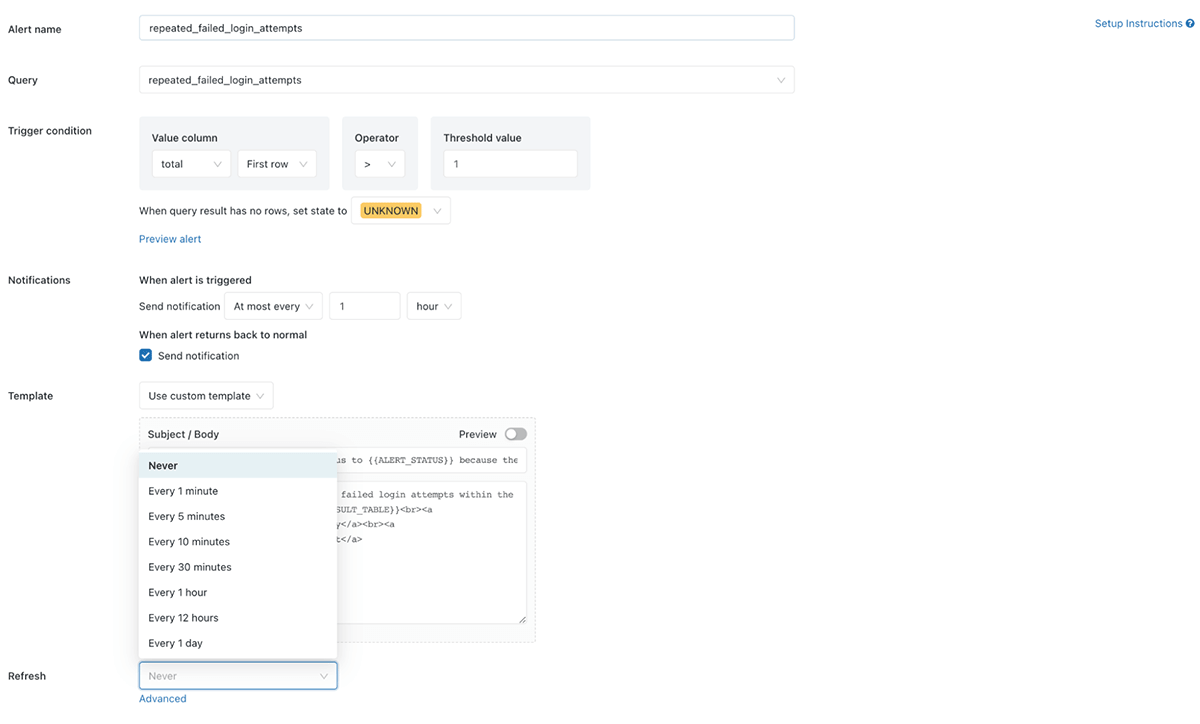

If it has been triggered you will obtain an e-mail with a desk together with the entire occasions which have triggered the alert. To set the alert to run on a schedule, simply choose edit after which Refresh and select how typically you need the alert to run:

You possibly can customise the alert additional as wanted, together with by including extra notification locations comparable to e-mail addresses, Slack channels or MS Groups (amongst others!) Take a look at our documentation for AWS and Azure for full particulars on the entire choices.

Superior Use Instances

The monitoring and detection use circumstances we have checked out up to now are comparatively easy, however as a result of System Tables might be queried in any language, the choices are primarily perimeter-less! Take into account the next concepts:

- We might carry out static evaluation of pocket book instructions for detecting suspicious habits or unhealthy practices comparable to hard-coded secrets and techniques, credential leaks, and different examples, as described in our earlier weblog

- We might mix the information from System Tables with extra information sources like HR methods – for instance to routinely flag when individuals are on trip, sabbatical, and even have been marked as having left the corporate however we see sudden actions

- We might mix the information from System Tables with information sources like

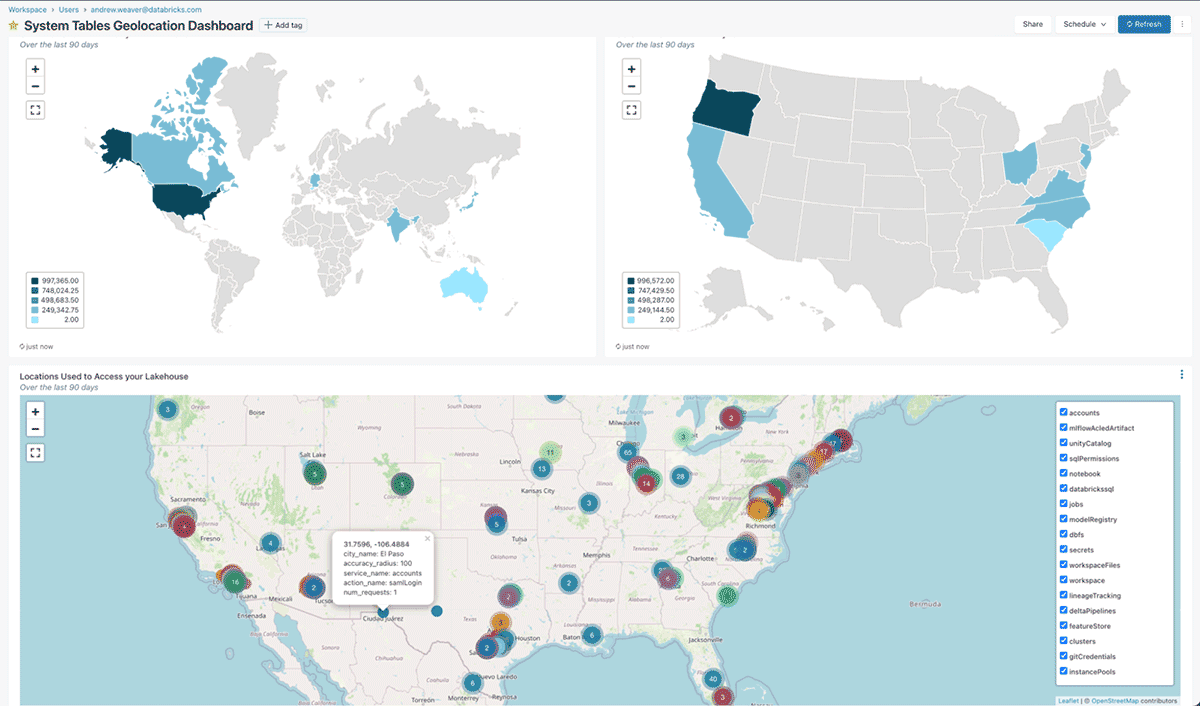

system.information_schemato tailor our monitoring to concentrate on our most privileged customers - We might mix the information from System Tables with Geolocation datasets to observe compliance towards our entry and information residency necessities. Check out the

geolocation_function_and_queries.sqlpocket book for an instance of what this would possibly appear like:

- We might fine-tune an LLM mannequin on verbose audit logs to supply a coding-assistant that’s tailor-made to our group’s coding practices

- We might fine-tune an LLM mannequin on a mix of System Tables and information sources like

system.information_schemato supply solutions to questions on our information in pure language, to each information groups and enterprise customers alike - We might prepare Unsupervised Studying fashions to detect anomalous occasions

Conclusion

Within the yr since our final weblog about audit logging, each the Databricks Lakehouse Platform and the world have modified considerably. Again then, simply gaining access to the information you wanted required plenty of steps, earlier than you might even take into consideration generate actionable insights. Now, because of System Tables the entire information that you simply want is only a button click on away. A Zero Belief Structure (or By no means Belief, All the time Confirm because it has come to be identified) is only one of many use circumstances that may be powered by System Tables. Take a look at the docs for AWS and Azure to allow System Tables in your Databricks account at this time!

[ad_2]