[ad_1]

My summer season internship on the PySpark group was a whirlwind of thrilling occasions. The PySpark group develops the Python APIs of the open supply Apache Spark library and Databricks Runtime. Over the course of the 12 weeks, I drove a challenge to implement a new built-in PySpark check framework. I additionally contributed to an open supply Databricks Labs challenge referred to as English SDK for Apache Spark, which I introduced in-person on the 2023 Knowledge + AI Summit (DAIS).

From enhancing the PySpark check expertise to decreasing the barrier of entry to Spark with English SDK, my summer season was all about making Spark extra accessible.

PySpark Take a look at Framework

My major internship challenge targeted on beginning a built-in PySpark check framework (SPARK-44042), and it’s included within the upcoming Apache Spark launch. The code can be out there within the open supply Spark repo.

Why a built-in check framework?

Previous to our built-in PySpark check framework, there was an absence of Spark-provided instruments to assist builders write their very own exams. Builders might use weblog posts and on-line boards, however it was troublesome to piece collectively so many disparate sources. This challenge aimed to consolidate testing sources beneath the official Spark repository to simplify the PySpark developer expertise.

The great thing about open supply is that that is just the start! We’re excited for the open supply group to contribute to the check framework, enhancing the prevailing options and in addition including new ones. Please take a look at the PySpark Take a look at Framework SPIP and Spark JIRA board!

Key Options

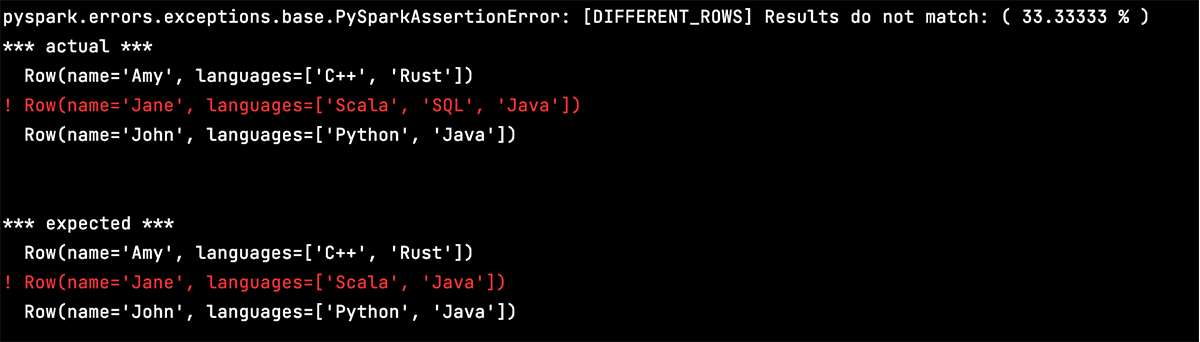

DataFrame Equality Take a look at Util (SPARK-44061): The assertDataFrameEqual util operate permits for equality comparability between two DataFrames, or between lists of rows. Some configurable options embody the power to customise approximate precision degree for float values, and the power to decide on whether or not to take row order under consideration. This util operate may be helpful for testing DataFrame transformation capabilities.

Schema Equality Take a look at Util (SPARK-44216): The assertSchemaEqual operate permits for comparability between two DataFrame schemas. The util helps nested schema varieties. By default, it ignores the “nullable” flag in complicated varieties (StructType, MapType, ArrayType) when asserting equality.

Improved Error Messages (SPARK-44363): One of the vital generally occurring ache factors amongst Spark builders is debugging complicated error messages.

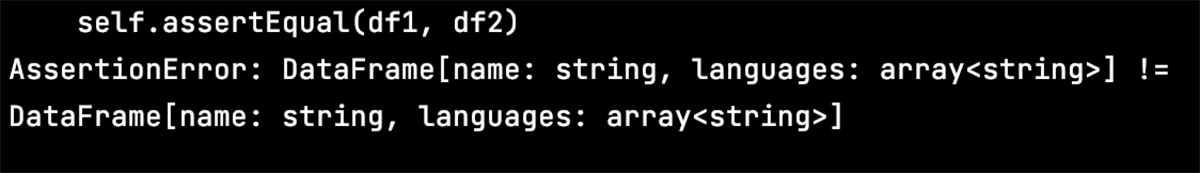

Take the next error message for instance, the place I strive asserting equality between unequal schemas utilizing the built-in unittest assertEqual methodology. From the error message, we are able to inform that the schemas are unequal, however it’s complicated to see precisely the place they differ and how one can appropriate them.

The brand new check util capabilities embody detailed, color-coded check error messages, which clearly point out variations between unequal DataFrame schemas and knowledge in DataFrames.

English SDK for Apache Spark

Overview of English SDK

One thing I realized this summer season is that issues transfer in a short time at Databricks. Certain sufficient, I spent a lot of the summer season on a brand new challenge that was truly solely began throughout the internship: the English SDK for Apache Spark!

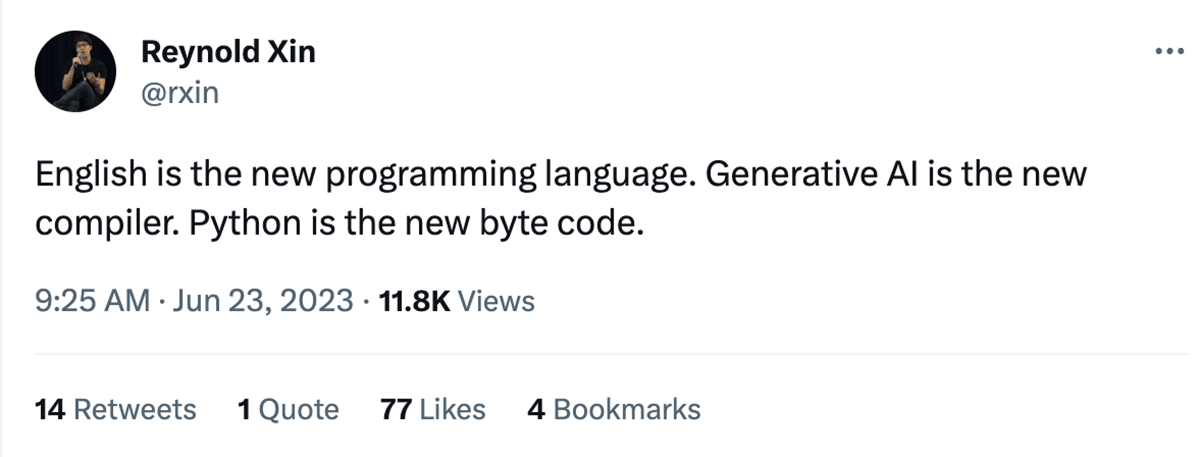

The thought behind the English SDK is summed up by the tweet above from Reynold Xin, Chief Architect at Databricks and a co-founder of Spark. With latest developments in generative AI, what if we are able to use English as a programming language and generative AI because the compiler to get PySpark and SQL code? By doing this, we decrease the barrier of entry to Spark growth, democratizing entry to highly effective knowledge analytic instruments. English SDK simplifies complicated coding duties, permitting knowledge analysts to focus extra on deriving knowledge insights.

English SDK additionally has many highly effective functionalities, comparable to producing plots, looking out the online to ingest knowledge right into a DataFrame, and describing DataFrames in plain English.

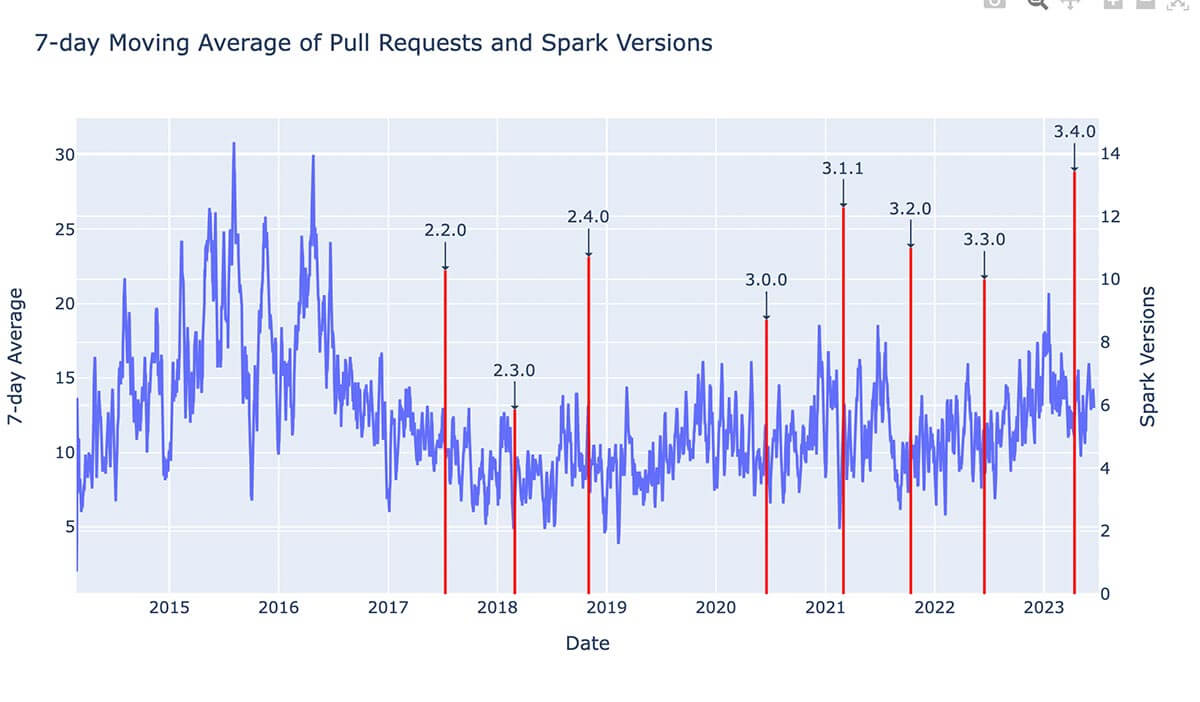

For instance, say I’ve a DataFrame referred to as github_df with knowledge about PRs within the OSS Spark repo. Say I need to see the typical variety of PRs over time, and the way they relate to Spark launch dates. All I’ve to do is ask English SDK:

github_df.ai.plot("present the 7 day shifting common and annotate the spark model with a purple line")And it returns the plot for me:

The English SDK challenge was unveiled on the 2023 Knowledge + AI Summit. When you’re considering studying extra about it, please take a look at the full weblog publish. That is additionally an ongoing open supply challenge, and we welcome all contributions and suggestions; simply open a problem on the GitHub repo!

Internship Expertise

DAIS Presentation

One of many coolest components of my summer season was presenting a demo of the English SDK on the annual Databricks Knowledge + AI Summit (DAIS). This yr’s occasion was held in San Francisco from June 26-29, and there have been over 30,000 digital and in-person attendees!

The convention was a multi-day occasion, and I additionally attended the opposite summit classes. I attended keynote classes (and was a bit starstruck seeing some audio system!), collected numerous Dolly-themed swag, and realized from world-class consultants at different breakout classes. The vitality on the occasion was infectious, and I am so grateful I had this expertise.

Open Supply Design Course of

For the reason that PySpark Take a look at Framework was a brand new initiative, I noticed the ins and outs of the software program design course of, from writing a design doc to attending buyer conferences. I additionally acquired to expertise distinctive elements of the open supply Apache Spark design course of, together with writing a Spark Venture Enchancment Proposals (SPIP) doc and internet hosting on-line discussions in regards to the initiative. I acquired numerous nice suggestions from the open supply group, which helped me iterate on and enhance the preliminary design. Fortunately, when the voting interval got here round, the initiative handed with ten +1s!

This internship challenge was a singular look into the full-time OSS developer expertise, because it not solely bolstered my technical coding abilities but in addition delicate abilities, comparable to communication, writing, and teamwork.

Crew Bonding Occasions

The Spark OSS group may be very worldwide, with group members spanning the world over. Our group stand-up conferences have been all the time very vigorous, and I cherished attending to know everybody all through the summer season. Thankfully, I additionally acquired to satisfy lots of my worldwide group members in individual throughout the week of DAIS!

My intern cohort additionally occurred to grow to be a few of my favourite individuals ever. All of us acquired actually shut over the summer season, from consuming lunch collectively on the workplace to exploring San Francisco on the weekends. A few of my favourite recollections from the summer season embody climbing Lands Finish Path, getting scrumptious dim sum in Chinatown, and taking a weekend journey to Yosemite.

Conclusion

My 12-week internship at Databricks was a tremendous expertise. I used to be surrounded by exceptionally sensible but humble group members, and I am going to all the time bear in mind the numerous classes they shared with me.

Particular because of my mentor Allison Wang, my supervisor Xiao Li, and all the Spark group for his or her invaluable mentorship and steerage. Thanks additionally to Hyukjin Kwon, Gengliang Wang, Matthew Powers, and Allan Folting, who I labored carefully with on the English SDK for Apache Spark and PySpark Take a look at Framework tasks.

Most of my work from the summer season is open supply, so you possibly can test it out if you happen to’re ! (See: Spark, English SDK)

If you wish to work on cutting-edge tasks alongside business leaders, I extremely encourage you to use to work at Databricks! Go to the Databricks Careers web page to be taught extra about job openings throughout the corporate.

[ad_2]