[ad_1]

Actual-time AI is the longer term, and AI fashions have demonstrated unimaginable potential for predicting and producing media in numerous enterprise domains. For one of the best outcomes, these fashions have to be knowledgeable by related knowledge. AI-powered functions virtually all the time want entry to real-time knowledge to ship correct ends in a responsive consumer expertise that the market has come to count on. Stale and siloed knowledge can restrict the potential worth of AI to your clients and your corporation.

Confluent and Rockset energy a crucial structure sample for real-time AI. On this submit, we’ll talk about why Confluent Cloud’s knowledge streaming platform and Rockset’s vector search capabilities work so nicely to allow real-time AI app growth and discover how an e-commerce innovator is utilizing this sample.

Understanding real-time AI utility design

AI utility designers observe certainly one of two patterns when they should contextualize fashions:

- Extending fashions with real-time knowledge: Many AI fashions, just like the deep learners that energy Generative AI functions like ChatGPT, are costly to coach with the present cutting-edge. Usually, domain-specific functions work nicely sufficient when the fashions are solely periodically retrained. Extra typically relevant fashions, such because the Giant Language Fashions (LLMs) powering ChatGPT-like functions, can work higher with applicable new data that was unavailable when the mannequin was skilled. As sensible as ChatGPT seems to be, it could’t summarize present occasions precisely if it was final skilled a yr in the past and never instructed what’s taking place now. Software builders can’t count on to have the ability to retrain fashions as new data is generated always. Slightly, they enrich inputs with a finite context window of probably the most related data at question time.

- Feeding fashions with real-time knowledge: Different fashions, nevertheless, could be dynamically retrained as new data is launched. Actual-time data can improve the question’s specificity or the mannequin’s configuration. Whatever the algorithm, one’s favourite music streaming service can solely give one of the best suggestions if it is aware of all your latest listening historical past and what everybody else has performed when it generalizes classes of consumption patterns.

The problem is that it doesn’t matter what sort of AI mannequin you might be working with, the mannequin can solely produce invaluable output related to this second in time if it is aware of in regards to the related state of the world at this second in time. Fashions could must learn about occasions, computed metrics, and embeddings primarily based on locality. We intention to coherently feed these various inputs right into a mannequin with low latency and with no complicated structure. Conventional approaches rely on cascading batch-oriented knowledge pipelines, which means knowledge takes hours and even days to circulation by the enterprise. Consequently, knowledge made accessible is stale and of low constancy.

Whatnot is a corporation that confronted this problem. Whatnot is a social market that connects sellers with consumers through dwell auctions. On the coronary heart of their product lies their house feed the place customers see suggestions for livestreams. As Whatnot states, “What makes our discovery drawback distinctive is that livestreams are ephemeral content material — We are able to’t advocate yesterday’s livestreams to at the moment’s customers and we want contemporary indicators.”

Guaranteeing that suggestions are primarily based on real-time livestream knowledge is crucial for this product. The advice engine wants consumer, vendor, livestream, computed metrics, and embeddings as a various set of real-time inputs.

“At the start, we have to know what is occurring within the livestreams — livestream standing modified, new auctions began, engaged chats and giveaways within the present, and so on. These issues are taking place quick and at a large scale.”

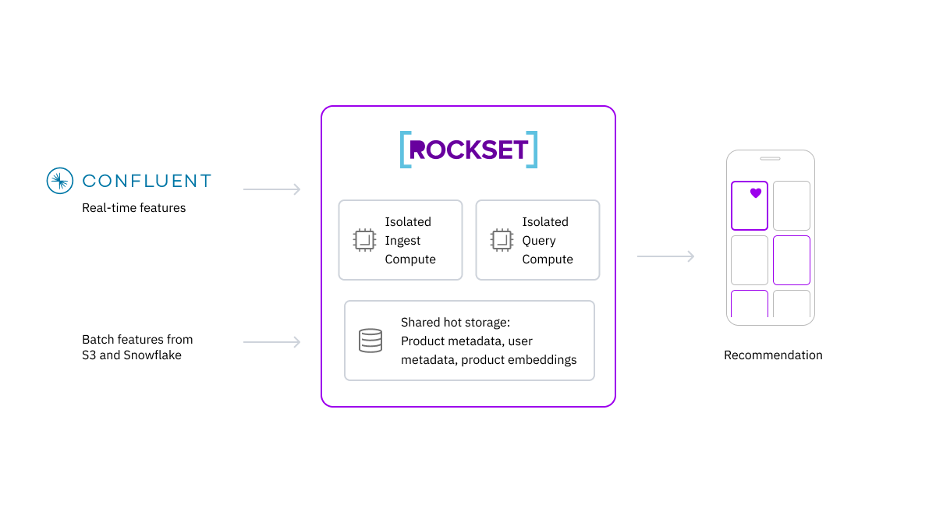

Whatnot selected a real-time stack primarily based on Confluent and Rockset to deal with this problem. Utilizing Confluent and Rockset collectively supplies dependable infrastructure that delivers low knowledge latency, assuring knowledge generated from wherever within the enterprise could be quickly accessible to contextualize machine studying functions.

Confluent is a knowledge streaming platform enabling real-time knowledge motion throughout the enterprise at any arbitrary scale, forming a central nervous system of knowledge to gas AI functions. Rockset is a search and analytics database able to low-latency, high-concurrency queries on heterogeneous knowledge equipped by Confluent to tell AI algorithms.

Excessive-value, trusted AI functions require real-time knowledge from Confluent Cloud

With Confluent, companies can break down knowledge silos, promote knowledge reusability, enhance engineering agility, and foster larger belief in knowledge. Altogether, this enables extra groups to securely and confidently unlock the total potential of all their knowledge to energy AI functions. Confluent allows organizations to make real-time contextual inferences on an astonishing quantity of knowledge by bringing nicely curated, reliable streaming knowledge to Rockset, the search and analytics database constructed for the cloud.

With easy accessibility to knowledge streams by Rockset’s integration with Confluent Cloud, companies can:

- Create a real-time data base for AI functions: Construct a shared supply of real-time reality for all of your operational and analytical knowledge, irrespective of the place it lives for stylish mannequin constructing and fine-tuning.

- Convey real-time context at question time: Convert uncooked knowledge into significant chunks with real-time enrichment and regularly replace your vector embeddings for GenAI use instances.

- Construct ruled, secured, and trusted AI: Set up knowledge lineage, high quality and traceability, offering all of your groups with a transparent understanding of knowledge origin, motion, transformations and utilization.

- Experiment, scale and innovate sooner: Cut back innovation friction as new AI apps and fashions turn out to be accessible. Decouple knowledge out of your knowledge science instruments and manufacturing AI apps to check and construct sooner.

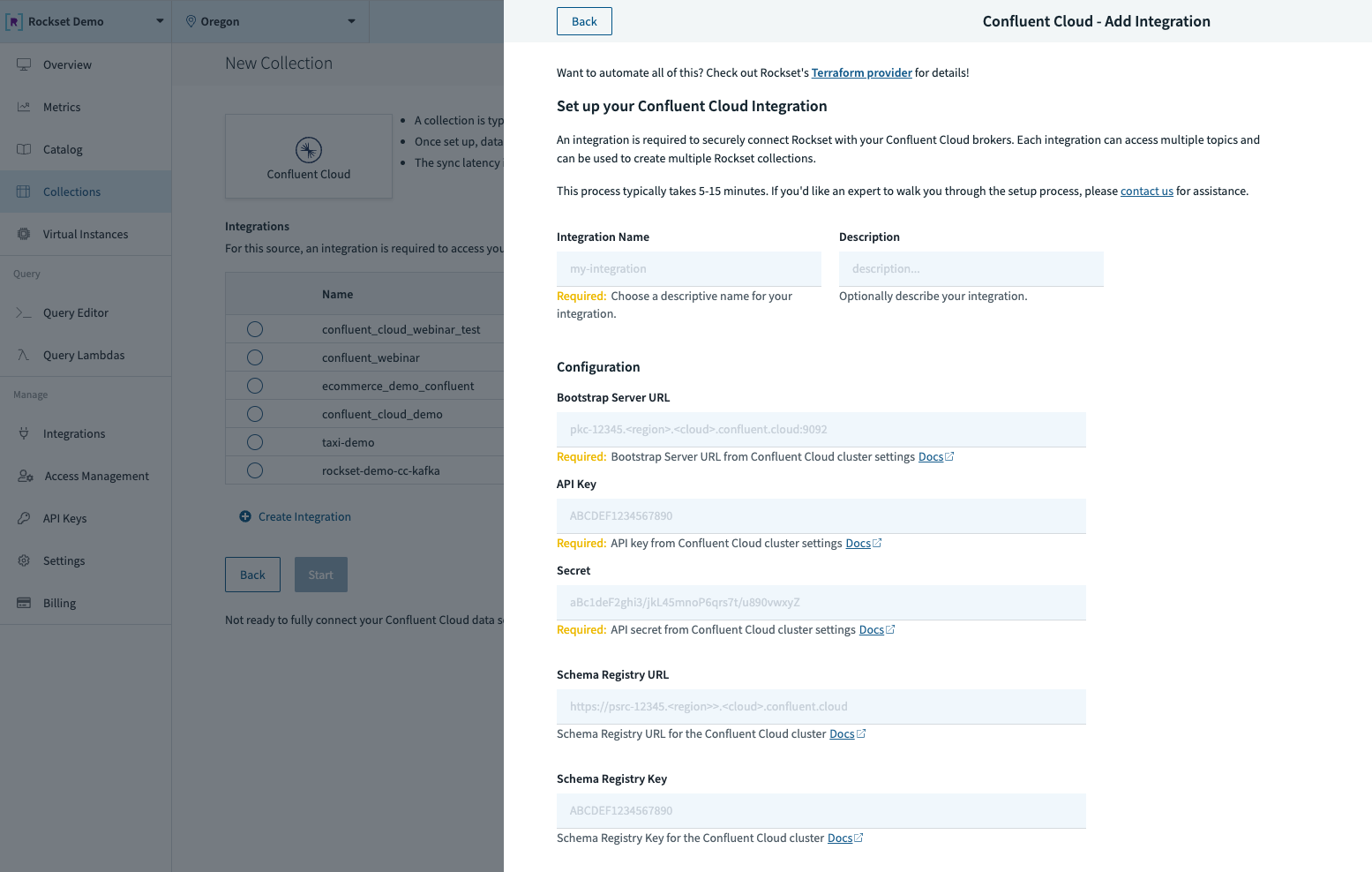

Rockset has constructed an integration that provides native help for Confluent Cloud and Apache Kafka®, making it easy and quick to ingest real-time streaming knowledge for AI functions. The mixing frees customers from having to construct, deploy or function any infrastructure part on the Kafka facet. The mixing is steady, so any new knowledge within the Kafka matter can be immediately listed in Rockset, and pull-based, guaranteeing that knowledge could be reliably ingested even within the face of bursty writes.

Actual-time updates and metadata filtering in Rockset

Whereas Confluent delivers the real-time knowledge for AI functions, the opposite half of the AI equation is a serving layer able to dealing with stringent latency and scale necessities. In functions powered by real-time AI, two efficiency metrics are high of thoughts:

- Information latency measures the time from when knowledge is generated to when it’s queryable. In different phrases, how contemporary is the info on which the mannequin is working? For a suggestions instance, this might manifest in how rapidly vector embeddings for newly added content material could be added to the index or whether or not the newest consumer exercise could be integrated into suggestions.

- Question latency is the time taken to execute a question. Within the suggestions instance, we’re working an ML mannequin to generate consumer suggestions, so the flexibility to return ends in milliseconds below heavy load is important to a constructive consumer expertise.

With these concerns in thoughts, what makes Rockset a super complement to Confluent Cloud for real-time AI? Rockset gives vector search capabilities that open up prospects for using streaming knowledge inputs to semantic search and generative AI. Rockset customers implement ML functions resembling real-time personalization and chatbots at the moment, and whereas vector search is a mandatory part, it’s certainly not enough.

Past help for vectors, Rockset retains the core efficiency traits of a search and analytics database, offering an answer to among the hardest challenges of working real-time AI at scale:

- Actual-time updates are what allow low knowledge latency, in order that ML fashions can use probably the most up-to-date embeddings and metadata. The actual-timeness of the info is often a difficulty as most analytical databases don’t deal with incremental updates effectively, typically requiring batching of writes or occasional reindexing. Rockset helps environment friendly upserts as a result of it’s mutable on the discipline stage, making it well-suited to ingesting streaming knowledge, CDC from operational databases, and different always altering knowledge.

- Metadata filtering is a helpful, even perhaps important, companion to vector search that restricts nearest-neighbor matches primarily based on particular standards. Generally used methods, resembling pre-filtering and post-filtering, have their respective drawbacks. In distinction, Rockset’s Converged Index accelerates many sorts of queries, whatever the question sample or form of the info, so vector search and filtering can run effectively together on Rockset.

Rockset’s cloud structure, with compute-compute separation, additionally allows streaming ingest to be remoted from queries together with seamless concurrency scaling, with out replicating or transferring knowledge.

How Whatnot is innovating in e-commerce utilizing Confluent Cloud with Rockset

Let’s dig deeper into Whatnot’s story that includes each merchandise.

Whatnot is a fast-growing e-commerce startup innovating within the livestream procuring market, which is estimated to achieve $32B within the US in 2023 and double over the subsequent 3 years. They’ve constructed a live-video market for collectors, trend fanatics, and superfans that permits sellers to go dwell and promote merchandise on to consumers by their video public sale platform.

Whatnot’s success is determined by successfully connecting consumers and sellers by their public sale platform for a constructive expertise. It gathers intent indicators in real-time from its viewers: the movies they watch, the feedback and social interactions they go away, and the merchandise they purchase. Whatnot makes use of this knowledge of their ML fashions to rank the preferred and related movies, which they then current to customers within the Whatnot product house feed.

To additional drive development, they wanted to personalize their recommendations in actual time to make sure customers see fascinating and related content material. This evolution of their personalization engine required vital use of streaming knowledge and purchaser and vendor embeddings, in addition to the flexibility to ship sub-second analytical queries throughout sources. With plans to develop utilization 4x in a yr, Whatnot required a real-time structure that would scale effectively with their enterprise.

Whatnot makes use of Confluent because the spine of their real-time stack, the place streaming knowledge from a number of backend providers is centralized and processed earlier than being consumed by downstream analytical and ML functions. After evaluating numerous Kafka options, Whatnot selected Confluent Cloud for its low administration overhead, skill to make use of Terraform to handle its infrastructure, ease of integration with different programs, and strong help.

The necessity for prime efficiency, effectivity, and developer productiveness is how Whatnot chosen Rockset for its serving infrastructure. Whatnot’s earlier knowledge stack, together with AWS-hosted Elasticsearch for retrieval and rating of options, required time-consuming index updates and builds to deal with fixed upserts to present tables and the introduction of recent indicators. Within the present real-time stack, Rockset indexes all ingested knowledge with out handbook intervention and shops and serves occasions, options, and embeddings utilized by Whatnot’s advice service, which runs vector search queries with metadata filtering on Rockset. That frees up developer time and ensures customers have an enticing expertise, whether or not shopping for or promoting.

With Rockset’s real-time replace and indexing capabilities, Whatnot achieved the info and question latency wanted to energy real-time house feed suggestions.

“Rockset delivered true real-time ingestion and queries, with sub-50 millisecond end-to-end latency…at a lot decrease operational effort and value,” Emmanuel Fuentes, head of machine studying and knowledge platforms at Whatnot.

Confluent Cloud and Rockset allow easy, environment friendly growth of real-time AI functions

Confluent and Rockset are serving to increasingly more clients ship on the potential of real-time AI on streaming knowledge with a joint resolution that’s straightforward to make use of but performs nicely at scale. You’ll be able to study extra about vector search on real-time knowledge streaming within the webinar and dwell demo Ship Higher Product Suggestions with Actual-Time AI and Vector Search.

If you happen to’re on the lookout for probably the most environment friendly end-to-end resolution for real-time AI and analytics with none compromises on efficiency or usability, we hope you’ll begin free trials of each Confluent Cloud and Rockset.

Concerning the Authors

Andrew Sellers leads Confluent’s Know-how Technique Group, which helps technique growth, aggressive evaluation, and thought management.

Kevin Leong is Sr. Director of Product Advertising and marketing at Rockset, the place he works carefully with Rockset’s product crew and companions to assist customers notice the worth of real-time analytics. He has been round knowledge and analytics for the final decade, holding product administration and advertising roles at SAP, VMware, and MarkLogic.

[ad_2]