[ad_1]

This weblog is authored by Ben Dias, Director of Information Science and Analytics and Ioannis Mesionis, Lead Information Scientist at easyJet

Introduction to easyJet

easyJet flies on extra of Europe’s hottest routes than every other airline and carried greater than 69 million passengers in 2022 – with 9.5 million touring for enterprise. The airline has over 300 plane on almost 1000 routes to greater than 150 airports throughout 36 international locations. Over 300 million Europeans dwell inside one hour’s drive of an easyJet airport.

Like many corporations within the airline business, easyJet is at present introduced with challenges round buyer expertise and digitalization. In at present’s aggressive panorama, prospects are altering their preferences shortly, and their expectations round customer support have by no means been greater. Having a correct information and AI technique can unlock many enterprise alternatives associated to digital customer support, personalization and operational course of optimization.

Challenges Confronted by easyJet

When beginning this venture, easyJet was already a buyer of Databricks for nearly a yr. At that time, we have been absolutely leveraging Databricks for information engineering and warehousing and had simply migrated all of our information science workloads and began emigrate our analytics workloads onto Databricks. We’re additionally actively decommissioning our outdated know-how stack, as we migrate our workloads over to our new Databricks Lakehouse Platform.

By migrating information engineering workloads to a Lakehouse Structure on Databricks, we have been capable of reap advantages when it comes to platform rationalization, decrease value, much less complexity, and the flexibility to implement real-time information use instances. Nevertheless, the truth that there was nonetheless a major a part of the property operating on our outdated information hub meant that ideating and productionizing new information science and AI use instances remained complicated and time-consuming.

A knowledge lake-based structure has advantages when it comes to volumes of knowledge that prospects are capable of ingest, course of and retailer. Nevertheless, the dearth of governance and collaboration capabilities impacts the flexibility for corporations to run information science and AI experiments and iterate shortly.

Now we have additionally seen the rise of generative AI functions, which presents a problem when it comes to implementation and deployment after we are speaking about information lake estates. Right here, experimenting and ideating requires consistently copying and shifting information throughout completely different silos, with out the right governance and lineage. On the deployment stage, with an information lake structure prospects often see themselves having to both hold including a number of cloud vendor platforms or develop MLOps, deployment and serving options on their very own – which is named the DIY strategy.

Each eventualities current completely different challenges. By including a number of merchandise from cloud distributors into an organization’s structure, prospects as a rule incur excessive prices, excessive overheads and an elevated want for specialised personnel – leading to excessive OPEX prices. In terms of DIY, there are vital prices each from a CAPEX and OPEX perspective. It’s worthwhile to first construct your individual MLOps and Serving capabilities – which may already be fairly daunting – and when you construct it, it is advisable hold these platforms operating, not solely from a platform evolution perspective but additionally from operational, infrastructure and safety standpoints.

After we carry these challenges to the realm of generative AI and Massive Language Fashions (LLMs), their impression turns into much more pronounced given the {hardware} necessities. Graphical Processing Unit playing cards (GPUs) have considerably greater prices in comparison with commoditized CPU {hardware}. It’s thus paramount to think about an optimized option to embody these sources in your information structure. Failing to take action represents an enormous value threat to corporations desirous to reap all the advantages of generative AI and LLMs; having Serverless capabilities extremely mitigates these dangers, whereas additionally decreasing the operational overhead that’s related to sustaining such specialised infrastructure.

Why Lakehouse AI?

We selected Databricks primarily as a result of the Lakehouse Platform allowed us to separate compute from storage. Databricks unified platform additionally enabled cross-functional easyJet groups to seamlessly collaborate on a single platform, resulting in a rise in productiveness.

Via our partnership with Databricks, we even have entry to the latest AI improvements – Lakehouse AI – and are capable of shortly prototype and experiment with our concepts along with their staff of specialists. “When it got here to our LLM journey, working with Databricks felt like we have been one massive staff and didn’t really feel like they have been only a vendor and we have been a buyer,” says Ben Dias, Director of Information Science and Analytics at easyJet.

Deep Dive into the Answer

The goal of this venture was to offer a software for our non-technical customers to ask their questions in pure language and get insights from our wealthy datasets. This perception could be extremely worthwhile within the decision-making course of.

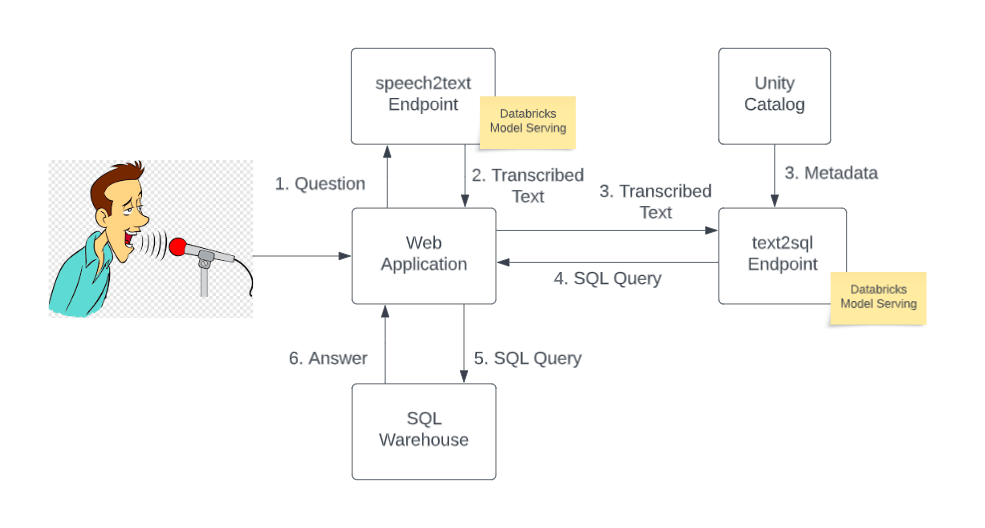

The entry level to the appliance is an online UI. The net UI permits customers to ask questions in pure language utilizing a microphone (e.g., their laptop computer’s built-in microphone). The speech is then despatched to an open supply LLM (Whisper) for transcription. As soon as transcribed, the query and the metadata of related tables within the Unity Catalog are put collectively to craft a immediate after which submitted to a different open supply LLM for text-to-SQL conversion. The text2sql mannequin returns a syntactically appropriate SQL question which is then despatched to a SQL warehouse and the reply is returned and displayed on the net UI.

To resolve the text2sql process, we experimented with a number of open supply LLMs. Because of LLMOps instruments obtainable on Databricks, particularly their integration with Hugging Face and completely different LLM flavors in MLflow, we discovered a low entry barrier to begin working with LLMs. We might seamlessly swap the underlying fashions for this process as higher open supply fashions received launched.

Each transcription and text2sql fashions are served at a REST API endpoint utilizing Databricks Mannequin Serving with assist for Nvidia’s A10G GPU. As one of many first Databricks prospects to leverage GPU serving, we have been capable of serve our fashions on GPU with a couple of clicks, going from improvement to manufacturing in a couple of minutes. Being serverless, Mannequin Serving eradicated the necessity to handle sophisticated infrastructure and let our staff deal with the enterprise downside and massively decreased the time to market.

“With Lakehouse AI, we might host open supply generative AI fashions in our personal atmosphere, with full management. Moreover, Databricks Mannequin Serving automated deployment and inferencing these LLMs, eradicating any must take care of sophisticated infrastructure. Our groups might simply deal with constructing the answer – the truth is, it took us solely a few weeks to get to an MVP.” says Ioannis Mesionis, Lead Information Scientist at easyJet.

Enterprise Outcomes Achieved as a Results of Selecting Databricks

This venture is among the first steps in our GenAI roadmap and with Databricks we have been capable of get to an MVP inside a few weeks. We have been capable of take an thought and rework it into one thing tangible our inside prospects can work together with. This utility paves the way in which for easyJet to be a really data-driven enterprise. Our enterprise customers have simpler entry to our information now. They’ll work together with information utilizing pure language and might base their selections on the perception supplied by LLMs.

What’s Subsequent for easyJet?

This initiative allowed easyJet to simply experiment and quantify the advantage of a cutting-edge generative AI use case. The answer was showcased to greater than 300 folks from easyJet’s IT, Information & Change division, and the thrill helped spark new concepts round modern Gen AI use instances, resembling private assistants for journey suggestions, chatbots for operational processes and compliance, in addition to useful resource optimization.

As soon as introduced with the answer, easyJet’s board of executives shortly agreed that there’s vital potential in together with generative AI of their roadmap. In consequence, there’s now a particular a part of the finances devoted to exploring and bringing these use instances to life as a way to increase the capabilities of each easyJet’s staff and prospects, whereas offering them with a greater, extra data-driven consumer expertise.

[ad_2]