[ad_1]

There have been quite a few makes an attempt to make use of AI to create 3D printable fashions based mostly on a easy textual content enter during the last yr, with various outcomes. One of many extra well-known examples is from LumaAI, and you might recall this challenge from OpenAI earlier this yr.

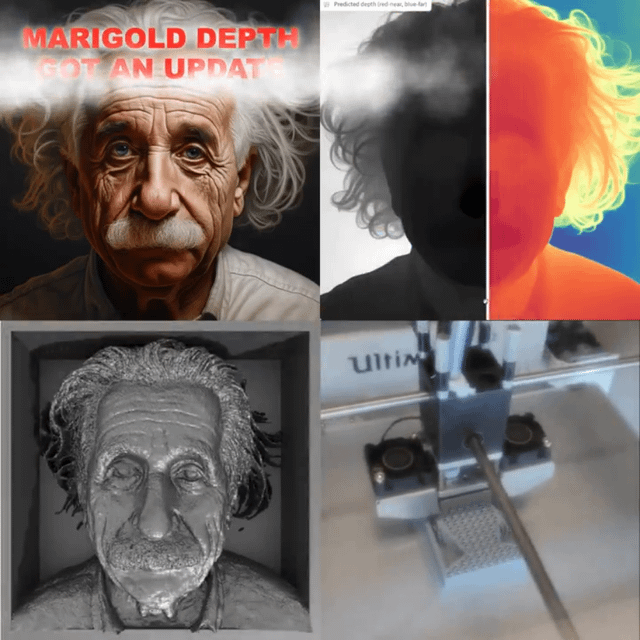

On this article we’ll check out one other methodology of AI-powered text-to-3D, this time utilizing a program referred to as “Marigold”, which in line with its web site, “repurposes diffusion-Based mostly picture mills for monocular depth estimation”. As a result of producing AI artwork is one factor, however giving it precise depth, which is required for 3D printing is a completely completely different recreation altogether. However the creators of Marigold appear to have finished simply that.

What’s Marigold?

Marigold was designed by researchers at ETH Zürich, and its perform is to generate depth info from a 2D picture. Originating from the Secure Diffusion framework, Marigold makes use of artificial knowledge for fine-tuning, enabling it to switch data to unfamiliar knowledge effectively. This method has led Marigold to surpass the beforehand main methodology, LeRes, on this discipline.

The mannequin’s performance hinges on fine-tuning the U-Web element of the Secure Diffusion setup. The method includes encoding each the picture and depth right into a latent area utilizing the unique Secure Diffusion VAE, adopted by optimizing the diffusion goal relative to the depth latent code. A notable side is the modification of the U-Web’s first layer to simply accept concatenated latent codes, enhancing the depth estimation functionality.

Throughout inference, Marigold encodes an enter picture right into a latent code, which is then concatenated with a depth latent. This mix is processed by means of the modified, fine-tuned U-Web. After a number of denoising iterations, the depth latent is decoded into a picture, and its channels are averaged to derive the ultimate depth estimation.

Comparative research have proven Marigold’s superior efficiency in each indoor and out of doors settings in opposition to different state-of-the-art affine-invariant depth estimators. That is significantly notable as Marigold achieves these outcomes with out prior publicity to actual depth samples. For a complete understanding of Marigold’s methodologies and benchmarks, the detailed paper offers in-depth (no pun supposed) info.

So as a result of Marigold is an image-to-3D resolution, it signifies that you should use AI generated photographs because the supply. And that’s precisely what one Redditor has finished, as you may see beneath.

DallE-to-3D

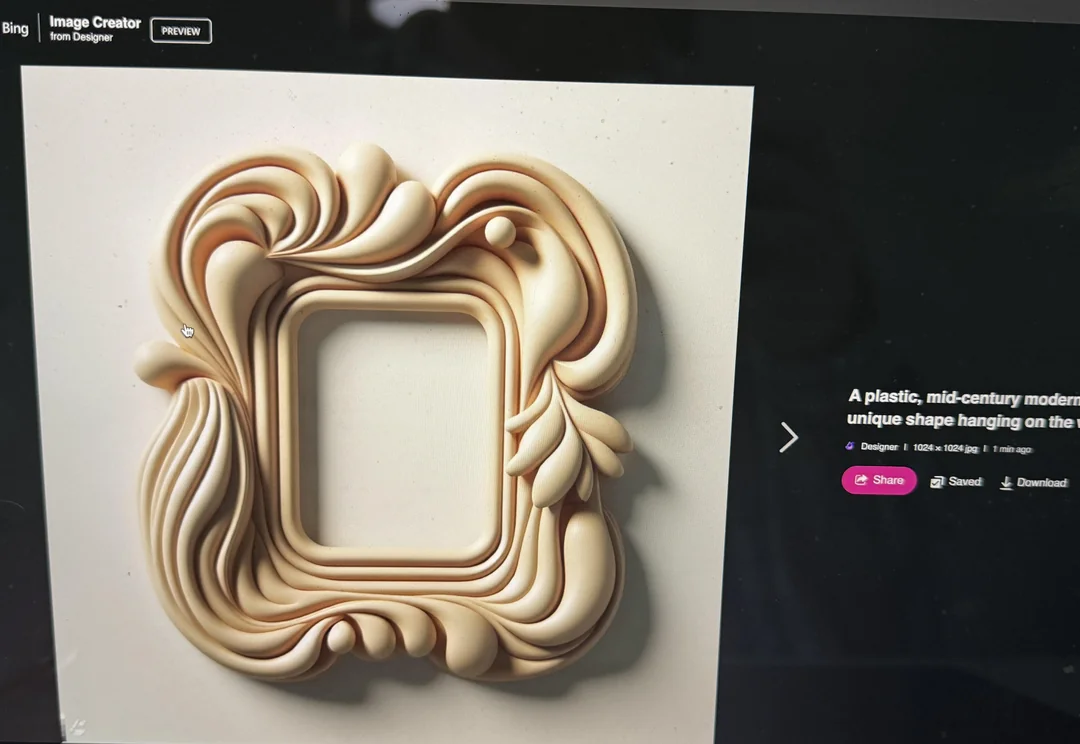

Utilizing the the DallE text-to-image AI discovered on Bing, Redditor “fredandlunchbox” entered a immediate to generate a picture of a plastic image body with trendy options, hanging on a wall.

DallE outputted the picture that you would be able to see beneath.

The Redditor used Marigold to create a depth map, earlier than having the depth map rendered in Cinema4D, after which did some further guide tidying up in Blender and Fusion360. When the mannequin was full, he exported it as an STL, and printed it. The entire course of from DallE text-prompt to ultimate printed object occurred in below 24 hours, and you’ll see the ultimate printed half within the picture beneath.

The Rdditor notes that the method isn’t automated, however the creators of Marigold have not too long ago introduced an replace that “permits the person to provide a watertight STL prepared for printing.”

How watertight that’s precisely, stays to be seen, but it surely feels like one thing enjoyable to experiment with over the vacation season.

If you want to experiment with the depth estimation and 3D printing options of Marigold, you may head on over to the web-based GUI over on the program’s Hugging Face area over at this hyperlink.

Come and tell us your ideas on our Fb, X, and LinkedIn pages, and don’t neglect to join our weekly additive manufacturing publication to get all the newest tales delivered proper to your inbox.

[ad_2]