[ad_1]

Courtesy: Rahul Unnikrishnan Nair | Intel

The panorama of AI and pure language processing has dramatically shifted with the appearance of Massive Language Fashions (LLMs). This shift is characterised by developments like Low-Rank Adaptation (LoRA) and its extra superior iteration, Quantized LoRA (QLoRA), which have reworked the fine-tuning course of from a compute-intensive process into an environment friendly, scalable process.

Generated with Steady Diffusion XL utilizing the immediate: “A cute laughing llama with large eyelashes, sitting on a seashore with sun shades studying in gibili type”

The Creation of LoRA: A Paradigm Shift in LLM Advantageous-Tuning

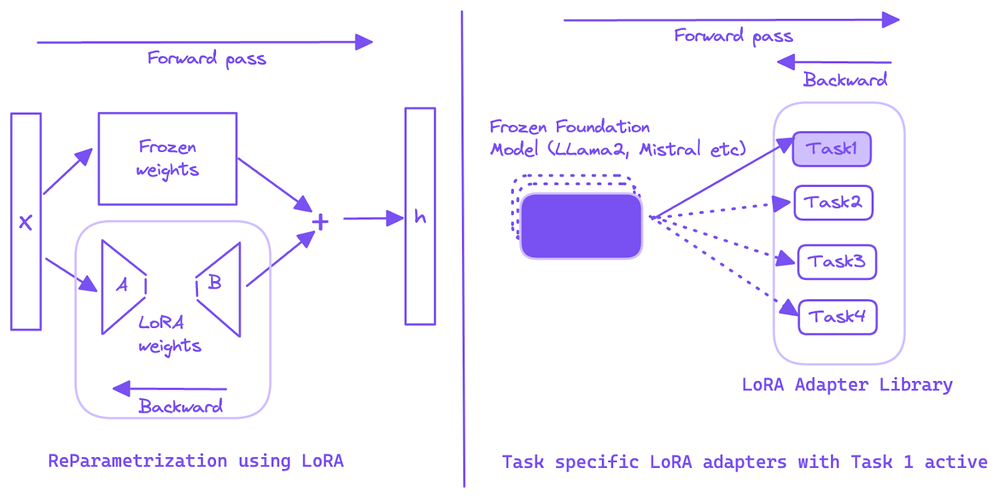

LoRA represents a major development within the fine-tuning of LLMs. By introducing trainable adapter modules between the layers of a giant pre-trained mannequin, LoRA focuses on refining a smaller subset of mannequin parameters. These adapters are low-rank matrices, considerably decreasing the computational burden and preserving the dear pre-trained data embedded inside LLMs. The important thing features of LoRA embrace:

- Low-Rank Matrix Construction: Formed as (r x d), the place ‘r’ is a small rank hyperparameter and ‘d’ is the hidden dimension measurement. This construction ensures fewer trainable parameters.

- Factorization: The adapter matrix is factorized into two smaller matrices, enhancing the mannequin’s perform adaptability with fewer parameters.

- Scalability and Adaptability: LoRA balances the mannequin’s studying capability and generalizability by scaling adapters with a parameter α and incorporating dropout for regularization.

Quantized LoRA (QLoRA): Environment friendly Finetuning on Intel {Hardware}

QLoRA advances LoRA by introducing weight quantization, additional decreasing reminiscence utilization. This strategy allows the fine-tuning of enormous fashions, such because the 70B LLama2, on {hardware} like Intel’s Information Heart GPU Max Collection 1100 with 48 GB VRAM. QLoRA’s primary options embrace:

- Reminiscence Effectivity: By way of weight quantization, QLoRA considerably reduces the mannequin’s reminiscence footprint, essential for dealing with giant LLMs.

- Precision in Coaching: QLoRA maintains excessive accuracy, essential for the effectiveness of fine-tuned fashions.

- On-the-Fly Dequantization: It includes short-term dequantization of quantized weights for computations, focusing solely on adapter gradients throughout coaching.

Advantageous-Tuning Course of with QLoRA on Intel {Hardware}

The fine-tuning course of begins with establishing the setting and putting in obligatory packages, together with bigdl-llm for mannequin loading, peft for LoRA adapters, Intel Extension for PyTorch for coaching utilizing Intel dGPUs, transformers for finetuning and datasets for loading the dataset. We’ll stroll via the high-level technique of fine-tuning a big language mannequin (LLM) to enhance its capabilities. For instance, I’m taking producing SQL queries from pure language enter, however the focus is on common QLoRA finetuning right here. For detailed explanations you possibly can take a look at the total pocket book that takes you from establishing the required python packages, loading the mannequin, finetuning and inferencing the finetuned LLM to generate SQL from textual content on Intel Developer Cloud and likewise right here.

Mannequin Loading and Configuration for Advantageous-Tuning

The foundational mannequin is loaded in a 4-bit format utilizing bigdl-llm, considerably decreasing reminiscence utilization. This step is essential for fine-tuning giant fashions just like the 70B LLama2 on Intel {hardware}.

from bigdl.llm.transformers import AutoModelForCausalLM

# Loading the mannequin in a 4-bit format for environment friendly reminiscence utilization

mannequin = AutoModelForCausalLM.from_pretrained(

“model_id”, # Change along with your mannequin ID

load_in_low_bit=”nf4″,

optimize_model=False,

torch_dtype=torch.float16,

modules_to_not_convert=[“lm_head”],

)

Studying Charge and Stability in Coaching

Choosing an optimum studying charge is essential in QLoRA fine-tuning to stability coaching stability and convergence velocity. This determination is significant for efficient fine-tuning outcomes as the next studying charge can result in instabilities and the coaching loss to abnormally drop to zero after a couple of steps.

from transformers import TrainingArguments

# Configuration for coaching

training_args = TrainingArguments(

learning_rate=2e-5, # Optimum start line; modify as wanted

per_device_train_batch_size=4,

max_steps=200,

# Extra parameters…

)

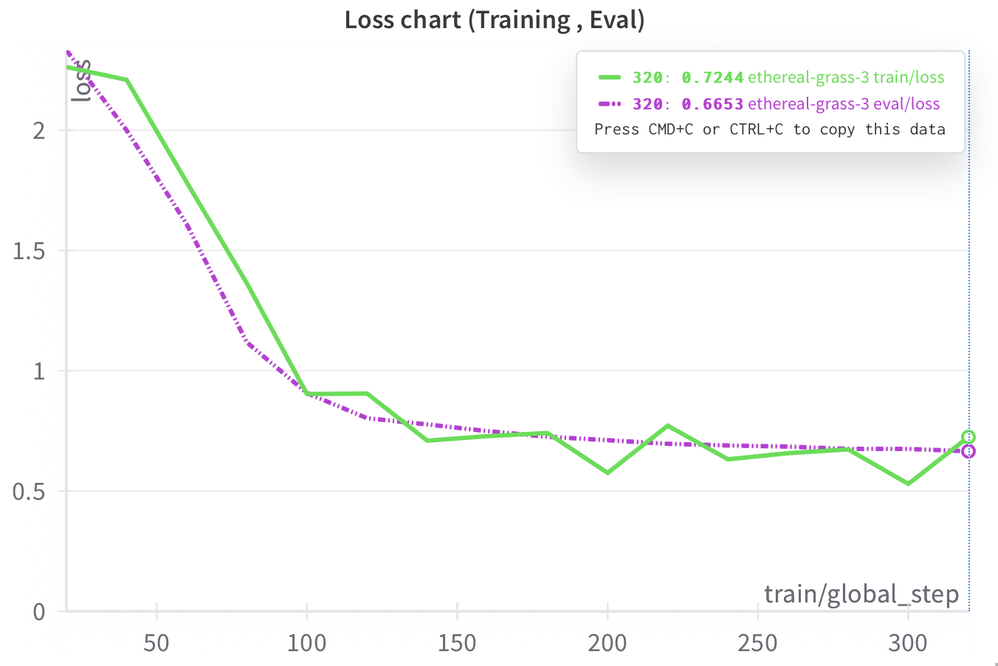

Through the fine-tuning course of, there’s a notable speedy lower within the loss after only a few steps, which then steadily ranges off, reaching a price close to 0.6 at roughly 300 steps as seen within the graph beneath:

Textual content-to-SQL Conversion: Immediate Engineering

With the fine-tuned mannequin, we are able to convert pure language queries into SQL instructions, a significant functionality in knowledge analytics and enterprise intelligence. To finetune the mannequin, we should rigorously convert the information into structured immediate like beneath as an instruction dataset with Enter, Context and Response:

# Perform to generate structured prompts for Textual content-to-SQL duties

def generate_prompt_sql(input_question, context, output=””):

return f”””You’re a highly effective text-to-SQL mannequin. Your job is to reply questions on a database. You might be given a query and context concerning a number of tables.

It’s essential to output the SQL question that solutions the query.

### Enter:

{input_question}

### Context:

{context}

### Response:

{output}”””

Numerous Mannequin Choices

The pocket book helps an array of fashions, every providing distinctive capabilities for various fine-tuning targets:

NousResearch/Nous-Hermes-Llama-2-7b

NousResearch/Llama-2-7b-chat-hf

NousResearch/Llama-2-13b-hf

NousResearch/CodeLlama-7b-hf

Phind/Phind-CodeLlama-34B-v2

openlm-research/open_llama_3b_v2

openlm-research/open_llama_13b

HuggingFaceH4/zephyr-7b-beta

Enhanced Inference with QLoRA: A Comparative Method

The true check of any fine-tuning course of lies in its inference capabilities. Within the case of the implementation, the inference stage not solely demonstrates the mannequin’s proficiency in task-specific purposes but additionally permits for a comparative evaluation between the bottom and the fine-tuned fashions. This comparability sheds gentle on the effectiveness of the LoRA adapters in enhancing the mannequin’s efficiency for particular duties.

Mannequin Loading for Inference:

For inference, the mannequin is loaded in a low-bit format, usually 4-bit, utilizing bigdl-llm library. This strategy drastically reduces the reminiscence footprint, making it appropriate to run a number of LLMs with excessive parameter rely on a single resource-optimized {hardware} like Intel’s Information Heart GPUs 1100. The next code snippet illustrates the mannequin loading course of for inference:

from bigdl.llm.transformers import AutoModelForCausalLM

# Loading the mannequin for inference

model_for_inference = AutoModelForCausalLM.from_pretrained(

“finetuned_model_path”, # Path to the fine-tuned mannequin

load_in_4bit=True, # 4 bit loading

optimize_model=True,

use_cache=True,

torch_dtype=torch.float16,

modules_to_not_convert=[“lm_head”],

)

Working Inference: Evaluating Base vs Advantageous-Tuned Mannequin

As soon as the mannequin is loaded, we are able to carry out inference to generate SQL queries from pure language inputs. This course of will be performed on each the bottom mannequin and the fine-tuned mannequin, permitting customers to straight evaluate the outcomes and assess the enhancements caused by fine-tuning with QLoRA:

# Producing a SQL question from a textual content immediate

text_prompt = generate_sql_prompt(…)

# Base Mannequin Inference

base_model_sql = base_model.generate(text_prompt)

print(“Base Mannequin SQL:”, base_model_sql)

# Advantageous-Tuned Mannequin Inference

finetuned_model_sql = finetuned_model.generate(text_prompt)

print(“Advantageous-Tuned Mannequin SQL:”, finetuned_model_sql)

Following a 15-minute coaching session itself, the finetuned mannequin demonstrates enhanced proficiency in producing SQL queries that extra precisely mirror the given questions, in comparison with the bottom mannequin. With further coaching steps, we are able to anticipate additional enhancements within the mannequin’s response accuracy:

Finetuned mannequin SQL technology for a given query and context:

Base mannequin SQL technology for a given query and context:

LoRA Adapters: A Library of Process-Particular Enhancements

One of the compelling features of LoRA is its means to behave as a library of task-specific enhancements. These adapters will be fine-tuned for distinct duties after which saved. Relying on the requirement, a particular adapter will be loaded and used with the bottom mannequin, successfully switching the mannequin’s capabilities to go well with totally different duties. This adaptability makes LoRA a extremely versatile device within the realm of LLM fine-tuning.

Checkout the pocket book on Intel Developer Cloud

We invite AI practitioners and builders to discover the total pocket book on the Intel Developer Cloud (IDC). IDC is the right setting to experiment with and discover the capabilities of fine-tuning LLMs utilizing QLoRA on Intel {hardware}. When you login to Intel Developer Cloud, go to the “Coaching Catalog” and beneath “Gen AI Necessities” within the catalog, yow will discover the LLM finetuning pocket book.

Conclusion: QLoRA’s Affect and Future Prospects

QLoRA, particularly when applied on Intel’s superior {hardware}, represents a major leap in LLM fine-tuning. It opens up new avenues for leveraging large fashions in varied purposes, making fine-tuning extra accessible and environment friendly.

[ad_2]