[ad_1]

Whereas autonomous driving has lengthy relied on machine studying to plan routes and detect objects, some corporations and researchers are actually betting that generative AI — fashions that absorb knowledge of their environment and generate predictions — will assist deliver autonomy to the subsequent stage. Wayve, a Waabi competitor, launched a comparable mannequin final yr that’s skilled on the video that its autos gather.

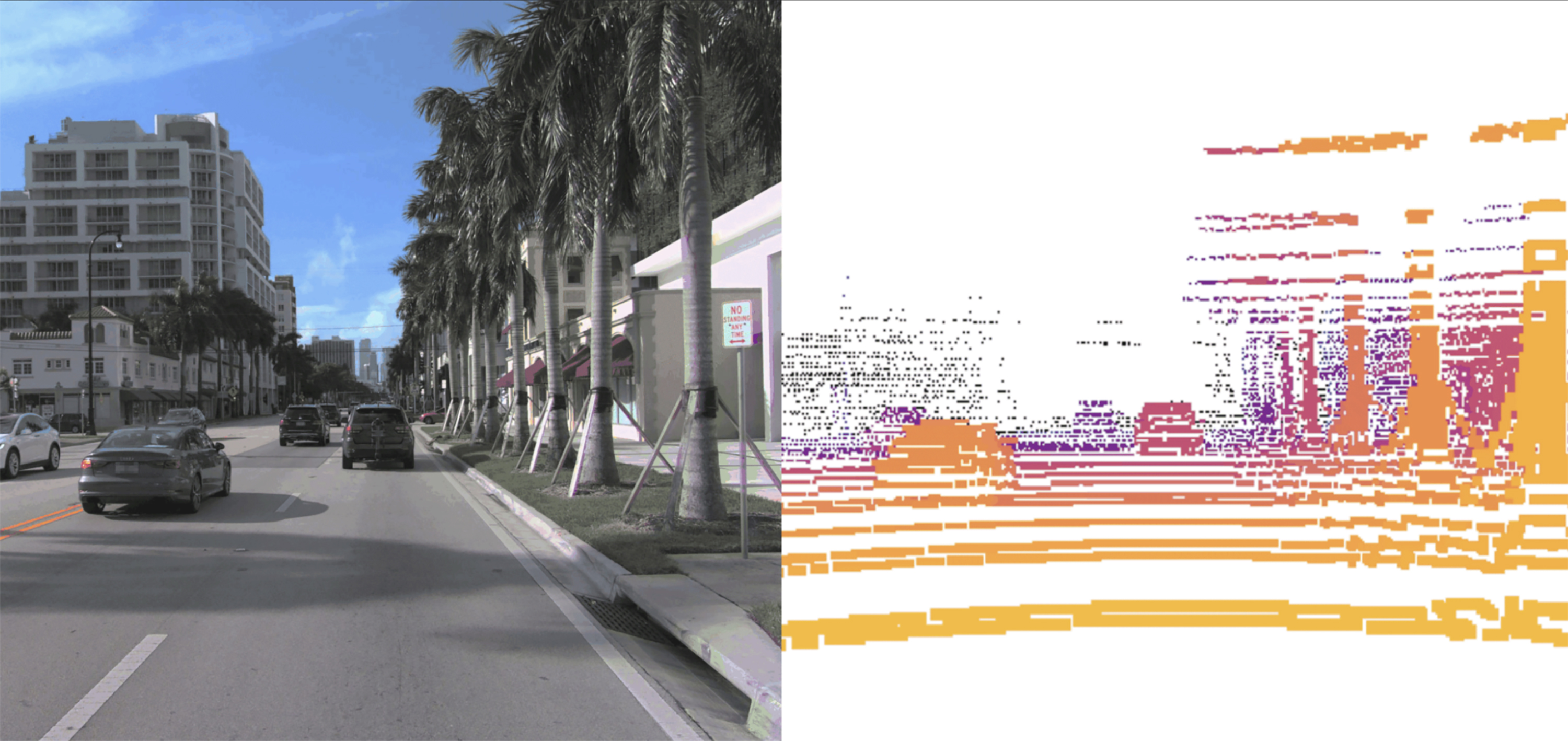

Waabi’s mannequin works in an identical strategy to picture or video mills like OpenAI’s DALL-E and Sora. It takes level clouds of lidar knowledge, which visualize a 3D map of the automotive’s environment, and breaks them into chunks, much like how picture mills break images into pixels. Primarily based on its coaching knowledge, Copilot4D then predicts how all factors of lidar knowledge will transfer. Doing this repeatedly permits it to generate predictions 5-10 seconds into the long run.

Waabi is one in every of a handful of autonomous driving corporations, together with rivals Wayve and Ghost, that describe their method as “AI-first.” To Urtasun, meaning designing a system that learns from knowledge, relatively than one which have to be taught reactions to particular conditions. The cohort is betting their strategies would possibly require fewer hours of road-testing self-driving vehicles, a charged subject following an October 2023 accident the place a Cruise robotaxi dragged a pedestrian in San Francisco.

Waabi is completely different from its rivals in constructing a generative mannequin for lidar, relatively than cameras.

“If you wish to be a Degree 4 participant, lidar is a should,” says Urtasun, referring to the automation degree the place the automotive doesn’t require the eye of a human to drive safely. Cameras do a very good job of exhibiting what the automotive is seeing, however they’re not as adept at measuring distances or understanding the geometry of the automotive’s environment, she says.

Although Waabi’s mannequin can generate movies exhibiting what a automotive will see by way of its lidar sensors, these movies won’t be used as coaching within the firm’s driving simulator that it makes use of to construct and take a look at its driving mannequin. That’s to make sure any hallucinations arising from Copilot4D don’t get taught within the simulator.

The underlying expertise will not be new, says Bernard Adam Lange, a PhD pupil at Stanford who has constructed and researched related fashions, however it’s the primary time he’s seen a generative lidar mannequin depart the confines of a analysis lab and be scaled up for business use. A mannequin like this is able to typically assist make the “mind” of any autonomous automobile capable of motive extra rapidly and precisely, he says.

“It’s the scale that’s transformative,” he says. “The hope is that these fashions will be utilized in downstream duties” like detecting objects and predicting the place folks or issues would possibly transfer subsequent.

[ad_2]