[ad_1]

Organizations throughout industries are leveraging Microsoft Azure OpenAI Service and Copilot providers and capabilities to drive development, enhance productiveness, and create value-added experiences. From advancing medical breakthroughs to streamlining manufacturing operations, our clients belief that their knowledge is protected by strong privateness protections and knowledge governance practices. As our clients proceed to broaden their use of our AI options, they are often assured that their helpful knowledge is safeguarded by industry-leading knowledge governance and privateness practices in essentially the most trusted cloud available on the market immediately.

At Microsoft, we now have a long-standing observe of defending our clients’ data. Our method to Accountable AI is constructed on a basis of privateness, and we stay devoted to upholding core values of privateness, safety, and security in all our generative AI merchandise and options.

Microsoft’s present privateness commitments lengthen to our AI industrial merchandise

Microsoft’s present privateness commitments lengthen to our AI industrial merchandise

Business and public sector clients can relaxation assured that the privateness commitments they’ve lengthy relied on for our enterprise cloud merchandise additionally apply to our enterprise generative AI options, together with Azure OpenAI Service and our Copilots.

- You’re answerable for your group’s knowledge. Your knowledge shouldn’t be utilized in undisclosed methods or with out your permission. You could select to customise your use of Azure OpenAI Service by opting to make use of your knowledge to high quality tune fashions to your group’s personal use. Should you do use your group’s knowledge to high quality tune, any fine-tuned AI options created together with your knowledge will likely be obtainable solely to you.

- Your entry management and enterprise insurance policies are maintained. To guard privateness inside your group when utilizing enterprise merchandise with generative AI capabilities, your present permissions and entry controls will proceed to use to make sure that your group’s knowledge is displayed solely to these customers to whom you might have given applicable permissions.

- Your group’s knowledge shouldn’t be shared. Microsoft doesn’t share your knowledge with third events with out your permission. Your knowledge, together with the information generated via your group’s use of Azure OpenAI Service or Copilots – reminiscent of prompts and responses – are stored non-public and will not be disclosed to 3rd events.

- Your group’s knowledge privateness and safety are protected by design. Safety and privateness are included via all phases of design and implementation of Azure OpenAI Service and Copilots. As with all our merchandise, we offer a powerful privateness and safety baseline and make obtainable extra protections which you can select to allow. As exterior threats evolve, we’ll proceed to advance our options and choices to make sure world-class privateness and safety in Azure OpenAI Service and Copilots, and we’ll proceed to be clear about our method.

- Your group’s knowledge shouldn’t be used to coach basis fashions. Microsoft’s generative AI options, together with Azure OpenAI Service and Copilot providers and capabilities, don’t use your group’s knowledge to coach basis fashions with out your permission. Your knowledge shouldn’t be obtainable to OpenAI or used to coach OpenAI fashions.

- Our merchandise and options proceed to adjust to world knowledge safety laws. The Microsoft AI merchandise and options you deploy proceed to be compliant with immediately’s world knowledge safety and privateness laws. As we proceed to navigate the way forward for AI collectively, together with the implementation of the EU AI Act and different legal guidelines globally, organizations could be sure that Microsoft will likely be clear about our privateness, security, and safety practices. We’ll adjust to legal guidelines globally that govern AI, and again up our guarantees with clear contractual commitments.

You’ll find extra particulars about how Microsoft’s privateness commitments apply to Azure OpenAI and Copilots right here.

We offer packages, transparency documentation, and instruments to help your AI deployment

To assist our clients and empower their use of AI, Microsoft provides a variety of options, tooling, and sources to help of their AI deployment, from complete transparency documentation to a collection of instruments for knowledge governance, danger, and compliance. Devoted packages reminiscent of our industry-leading AI Assurance program and Buyer Copyright Dedication additional broaden the assist we provide industrial clients in addressing their wants.

Microsoft’s AI Assurance Program helps clients be certain that the AI functions they deploy on our platforms meet the authorized and regulatory necessities for accountable AI. This system contains assist for regulatory engagement and advocacy, danger framework implementation and the creation of a buyer council.

For many years we’ve defended our clients towards mental property claims referring to our merchandise. Constructing on our earlier AI buyer commitments, Microsoft introduced our Buyer Copyright Dedication, which extends our mental property indemnity assist to each our industrial Copilot providers and our Azure OpenAI Service. Now, if a 3rd celebration sues a industrial buyer for copyright infringement for utilizing Microsoft’s Copilots or Azure OpenAI Service, or for the output they generate, we’ll defend the client and pay the quantity of any antagonistic judgments or settlements that consequence from the lawsuit, so long as the client has used the guardrails and content material filters we now have constructed into our merchandise.

Our complete transparency documentation about Azure OpenAI Service and Copilot and the client instruments we offer assist organizations perceive how our AI merchandise work and present decisions our clients can use to affect system efficiency and habits.

Azure’s enterprise-grade protections present a powerful basis upon which clients can construct their knowledge privateness, safety, and compliance methods to confidently scale AI whereas managing danger and making certain compliance. With a variety of options within the Microsoft Purview household of merchandise, organizations can additional uncover, defend, and govern their knowledge when utilizing Copilot for Microsoft 365 inside their organizations.

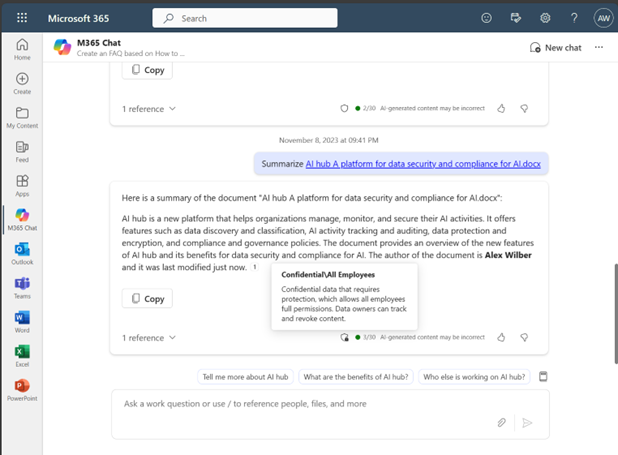

With Microsoft Purview, clients can uncover dangers related to knowledge and customers, reminiscent of which prompts embrace delicate knowledge. They’ll defend that delicate knowledge with sensitivity labels and classifications, which implies Copilot will solely summarize content material for customers once they have the appropriate permissions to the content material. And when delicate knowledge is included in a Copilot immediate, the Copilot generated output mechanically inherits the label from the reference file. Equally, if a person asks Copilot to create new content material primarily based on a labeled doc, the Copilot generated output mechanically inherits the sensitivity label together with all its safety, like knowledge loss prevention insurance policies.

Copilot dialog inherits sensitivity label

Lastly, our clients can govern their Copilot utilization to adjust to regulatory and code of conduct insurance policies via audit logging, eDiscovery, knowledge lifecycle administration, and machine-learning primarily based detection of coverage violations.

As we proceed to innovate and supply new sorts of AI options, Microsoft will proceed to supply industry-leading instruments, transparency sources, and assist for our clients of their AI journey, and stay steadfast in defending our clients’ knowledge.

[ad_2]