[ad_1]

The Federal Bureau of Investigation (FBI) is warning of a rising development of malicious actors creating deepfake content material to carry out sextortion assaults.

Sextortion is a type of on-line blackmail the place malicious actors threaten their targets with publicly leaking specific pictures and movies they stole (by means of hacking) or acquired (by means of coercion), usually demanding cash funds for withholding the fabric.

In lots of circumstances of sextortion, compromising content material will not be actual, with the menace actors solely pretending to have entry to scare victims into paying an extortion demand.

FBI warns that sextortionists are actually scraping publicly obtainable pictures of their targets, like innocuous footage and movies posted on social media platforms. These pictures are then fed into deepfake content material creation instruments that flip them into AI-generated sexually specific content material.

Though the produced pictures or movies aren’t real, they appear very actual, to allow them to serve the menace actor’s blackmail function, as sending that materials to the goal’s household, coworkers, and so forth., might nonetheless trigger victims nice private and reputational hurt.

“As of April 2023, the FBI has noticed an uptick in sextortion victims reporting using pretend pictures or movies created from content material posted on their social media websites or net postings, supplied to the malicious actor upon request, or captured throughout video chats,” reads the alert printed on the FBI’s IC3 portal.

“Primarily based on current sufferer reporting, the malicious actors usually demanded: 1. Fee (e.g., cash, reward playing cards) with threats to share the photographs or movies with members of the family or social media pals if funds weren’t obtained; or 2. The sufferer sends actual sexually-themed pictures or movies.”

The FBI says that specific content material creators typically skip the extortion half and publish the created movies straight to pornographic web sites, exposing the victims to a big viewers with out their information or consent.

In some circumstances, sextortionists use these now-public uploads to extend the stress on the sufferer, demanding cost to take away the posted pictures/movies from the websites.

The FBI experiences that this media manipulation exercise has, sadly, impacted minors too.

defend your self

The speed at which succesful AI-enabled content material creation instruments have gotten obtainable to the broader viewers creates a hostile setting for all web customers, notably these in delicate classes.

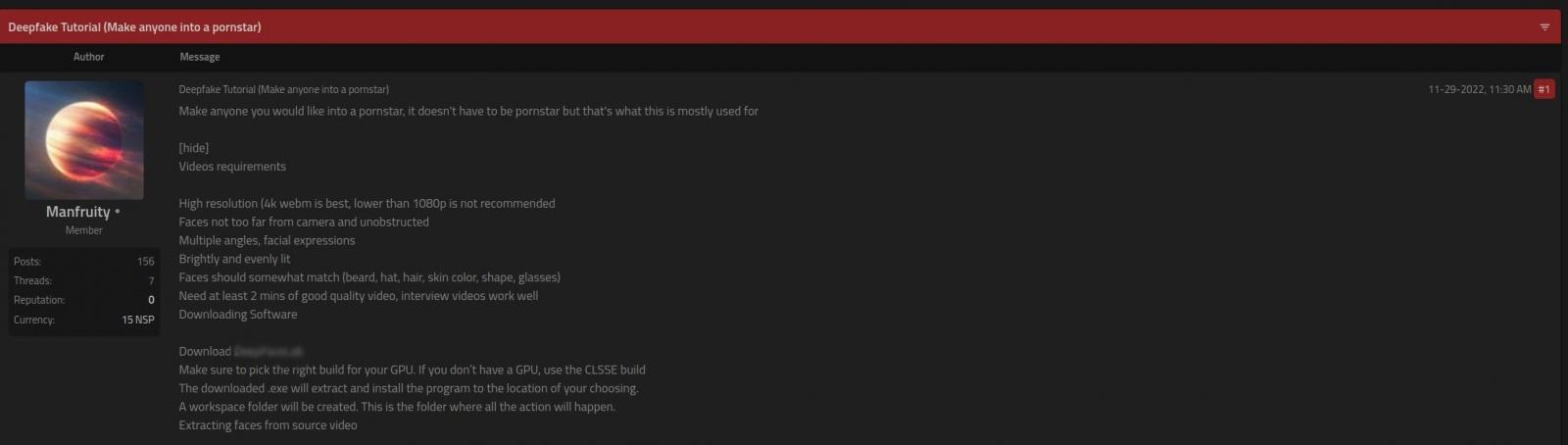

There are a number of content material creation instrument initiatives obtainable without spending a dime by way of GitHub, which may create reasonable movies from only a single picture of the goal’s face, requiring no further coaching or dataset.

Many of those instruments function built-in protections to stop misuse, however these bought in underground boards and darkish net markets do not.

Supply: Kaspersky

The FBI recommends that oldsters monitor their youngsters’s on-line exercise and discuss to them concerning the dangers related to sharing private media on-line.

Moreover, dad and mom are suggested to conduct on-line searches to find out the quantity of publicity their youngsters have on-line and take motion as wanted to take down content material.

Adults posting pictures or movies on-line ought to prohibit viewing entry to a small personal circle of pals to scale back publicity. On the similar time, youngsters’s faces ought to at all times be blurred or masked.

Lastly, in the event you uncover deepfake content material depicting you on pornographic websites, report it to the authorities and get in touch with the internet hosting platform to request the elimination of the offending media.

The UK has just lately launched a regulation within the type of an modification to the On-line Security Invoice that classifies the non-consensual sharing of deepfakes as against the law.

[ad_2]