[ad_1]

AWS yesterday unveiled new EC2 cases geared towards tackling a few of the quickest rising workloads, together with AI coaching and large information analytics. Throughout his re:Invent keynote, CEO Adam Selipsky additionally welcomed Nvidia founder Jensen Huang onto the stage to debate the newest in GPU computing, together with the forthcoming 65 exaflop “ultra-cluster.”

Selipsky unveiled Graviton4, the fourth-generation of the environment friendly 64-bit ARM processor that AWS first launched in 2018 for general-purpose workloads, reminiscent of database serving and working Java functions.

Based on AWS, Graviton4 affords 2 MB of L2 cache per core, for a complete of 192 MB, and 12 DDR5-5600 reminiscence channels. All informed, the brand new chip affords 50% extra cores and 75% extra reminiscence bandwidth than Graviton3, driving 40% higher price-performance for database workloads and a forty five% enchancment for Java, AWS says. You possibly can learn extra in regards to the Graviton4 chip on this AWS weblog.

“We have been the primary to develop and supply our personal server processors,” Selipsky mentioned. “We’re now on our fourth technology in simply 5 years. Different cloud suppliers haven’t even delivered on their first server processors.”

AWS additionally launched R8G, the primary EC2 (Elastic Compute Cluster) cases primarily based on Graviton4, including to the 150-plus Graviton-based cases already within the barn for the cloud huge.

“R8G are a part of our memory-optimized occasion household, design to ship quick efficiency for workloads that course of giant datasets in reminiscence, like database or actual time huge information analytics,” Selipsky mentioned. “R8G cases present the most effective price-performance vitality effectivity for memory-intensive workloads, and there are a lot of, many extra Graviton cases coming.”

The launch of ChatGPT 364 days in the past kicked off a Gold Rush mentality to coach and deploy giant language fashions (LLMs) in assist of Generative AI functions. That’s pure gold for cloud suppliers like AWS, that are very happy to produce the large quantities of compute and storage required.

AWS additionally has a chip for that, dubbed Trainium. And yesterday at re:Invent, AWS unveiled the second technology of its Trainum providing. When the Trainium2-based EC2 cases come on-line in 2024, they are going to ship extra bang for GenAI developer bucks.

“Trainium2 is designed to ship 4 occasions quicker efficiency in comparison with first technology chips, and makes it ultimate for coaching basis fashions with tons of of billions and even trillions of parameters,” he mentioned. “Trainium2 goes to energy the subsequent technology of the EC2 ultra-cluster that can ship as much as 65 exaflops of combination compute.”

Talking of ultra-clusters, AWS continues to work with Nvidia to deliver its newest GPUs into the AWS cloud. Throughout his dialog on stage with Nvidia CEO Huang, re:Invent attendees received a teaser in regards to the ultra-cluster coming down the pike.

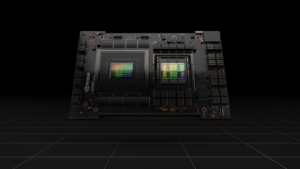

All the consideration was on the Grace Hopper superchip, or the GH200, which pairs two GH100 chips along with the NVLink chip-to-chip interconnect. Nvidia can also be engaged on an NVLink change that enables as much as 32 Grace Hopper superchips to be related collectively. When paired with AWS Nitro and Elastic Cloth Adapter (EFA) networking expertise, it allows the aforementioned ultra-cluster.

“With AWS Intro, that turns into principally one big digital GPU occasion,” Huang mentioned. “You’ve received to think about, you’ve received 32 H200s, unimaginable horsepower, in a single digital occasion due to AWS Nitro. Then we join with AWS EFA, your extremely quick networking. All of those models now can lead into an ultra-cluster, an AWS ultra-cluster. I can’t wait till all this come collectively.”

“How clients are going to make use of these things, I can solely think about,” Selipsky responded. “I do know the GH200s are actually going to supercharge what clients are doing. It’s going to be obtainable–after all EC2 cases are coming quickly.”

The approaching H200 supercluster will sport 16,000 GPUs and supply 65 exaflops of computing energy, or “one big AI supercomputer,” Huang mentioned.

“That is totally unimaginable. We’re going to have the ability to scale back the coaching time of the biggest language fashions, the subsequent technology MoE, these extraordinarily giant combination of consultants fashions,” he continued. “I can’t await us to face this up. Our AI researchers are champing on the bit.”

Associated Objects:

Amazon Launches AI Assistant, Amazon Q

5 AWS Predictions as re:Invent 2023 Kicks Off

Nvidia Launches Hopper H100 GPU, New DGXs and Grace Superchips

65 exaflop, Adam Selipsky, GenAI, Grace Hopper, Graviton, Graviton4, H200, Jensen Huang, giant language mannequin, combination of skilled fashions, MoE, Trainium, Trainium2, ultracluster

[ad_2]