[ad_1]

Introduction

Earlier than the massive language fashions period, extracting invoices was a tedious process. For bill extraction, one has to assemble information, construct a doc search machine studying mannequin, mannequin fine-tuning and so on. The introduction of Generative AI took all of us by storm and plenty of issues have been simplified utilizing the LLM mannequin. The massive language mannequin has eliminated the model-building technique of machine studying; you simply must be good at immediate engineering, and your work is completed in many of the state of affairs. On this article, we’re making an bill extraction bot with the assistance of a giant language mannequin and LangChain. Nonetheless, the detailed information of LangChain and LLM is out of scope however beneath is a brief description of LangChain and its parts.

Studying Aims

- Discover ways to extract data from a doc

- Learn how to construction your backend code by utilizing LangChain and LLM

- Learn how to present the appropriate prompts and directions to the LLM mannequin

- Good information of Streamlit framework for front-end work

This text was printed as part of the Information Science Blogathon.

What’s a Massive Language Mannequin?

Massive language fashions (LLMs) are a kind of synthetic intelligence (AI) algorithm that makes use of deep studying methods to course of and perceive pure language. LLMs are skilled on monumental volumes of textual content information to find linguistic patterns and entity relationships. Due to this, they will now acknowledge, translate, forecast, or create textual content or different data. LLMs could be skilled on potential petabytes of knowledge and could be tens of terabytes in measurement. As an example, one gigabit of textual content area might maintain round 178 million phrases.

For companies wishing to supply buyer assist through a chatbot or digital assistant, LLMs could be useful. With no human current, they will supply individualized responses.

What’s LangChain?

LangChain is an open-source framework used for creating and constructing functions utilizing a big language mannequin (LLM). It offers a regular interface for chains, many integrations with different instruments, and end-to-end chains for widespread functions. This allows you to develop interactive, data-responsive apps that use the newest advances in pure language processing.

Core Elements of LangChain

Quite a lot of Langchain’s parts could be “chained” collectively to construct advanced LLM-based functions. These parts include:

- Immediate Templates

- LLMs

- Brokers

- Reminiscence

Constructing Bill Extraction Bot utilizing LangChain and LLM

Earlier than the period of Generative AI extracting any information from a doc was a time-consuming course of. One has to construct an ML mannequin or use the cloud service API from Google, Microsoft and AWS. However LLM makes it very straightforward to extract any data from a given doc. LLM does it in three easy steps:

- Name the LLM mannequin API

- Correct immediate must be given

- Info must be extracted from a doc

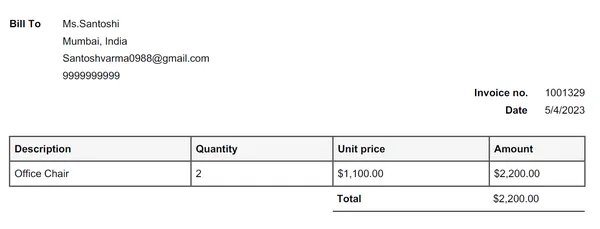

For this demo, we now have taken three bill pdf recordsdata. Beneath is the screenshot of 1 bill file.

Step 1: Create an OpenAI API Key

First, you must create an OpenAI API key (paid subscription). One can discover simply on the web, tips on how to create an OpenAI API key. Assuming the API secret is created. The subsequent step is to put in all the required packages resembling LangChain, OpenAI, pypdf, and so on.

#putting in packages

pip set up langchain

pip set up openai

pip set up streamlit

pip set up PyPDF2

pip set up pandasStep 2: Importing Libraries

As soon as all of the packages are put in. It’s time to import them one after the other. We are going to create two Python recordsdata. One comprises all of the backend logic (named “utils.py”), and the second is for creating the entrance finish with the assistance of the streamlit bundle.

First, we’ll begin with “utils.py” the place we’ll create a number of capabilities.

#import libraries

from langchain.llms import OpenAI

from pypdf import PdfReader

import pandas as pd

import re

from langchain.llms.openai import OpenAI

from langchain.prompts import PromptTemplateLet’s create a perform which extracts all the knowledge from a PDF file. For this, we’ll use the PdfReader bundle:

#Extract Info from PDF file

def get_pdf_text(pdf_doc):

textual content = ""

pdf_reader = PdfReader(pdf_doc)

for web page in pdf_reader.pages:

textual content += web page.extract_text()

return textual contentThen, we’ll create a perform to extract all of the required data from an bill PDF file. On this case, we’re extracting Bill No., Description, Amount, Date, Unit Value, Quantity, Whole, E mail, Cellphone Quantity, and Deal with and calling OpenAI LLM API from LangChain.

def extract_data(pages_data):

template=""'Extract all following values: bill no., Description,

Amount, date, Unit worth, Quantity, Whole,

electronic mail, cellphone quantity and deal with from this information: {pages}

Anticipated output : take away any greenback symbols {{'Bill no.':'1001329',

'Description':'Workplace Chair', 'Amount':'2', 'Date':'05/01/2022',

'Unit worth':'1100.00', Quantity':'2200.00', 'Whole':'2200.00',

'electronic mail':'[email protected]', 'cellphone quantity':'9999999999',

'Deal with':'Mumbai, India'}}

'''

prompt_template = PromptTemplate(input_variables=['pages'], template=template)

llm = OpenAI(temperature=0.4)

full_response = llm(prompt_template.format(pages=pages_data))

return full_response

Step 5: Create a Perform that can Iterate by way of all of the PDF Information

Writing one final perform for the utils.py file. This perform will iterate by way of all of the PDF recordsdata which implies you may add a number of bill recordsdata at one go.

# iterate over recordsdata in

# that person uploaded PDF recordsdata, one after the other

def create_docs(user_pdf_list):

df = pd.DataFrame({'Bill no.': pd.Collection(dtype="str"),

'Description': pd.Collection(dtype="str"),

'Amount': pd.Collection(dtype="str"),

'Date': pd.Collection(dtype="str"),

'Unit worth': pd.Collection(dtype="str"),

'Quantity': pd.Collection(dtype="int"),

'Whole': pd.Collection(dtype="str"),

'E mail': pd.Collection(dtype="str"),

'Cellphone quantity': pd.Collection(dtype="str"),

'Deal with': pd.Collection(dtype="str")

})

for filename in user_pdf_list:

print(filename)

raw_data=get_pdf_text(filename)

#print(raw_data)

#print("extracted uncooked information")

llm_extracted_data=extracted_data(raw_data)

#print("llm extracted information")

#Including objects to our record - Including information & its metadata

sample = r'{(.+)}'

match = re.search(sample, llm_extracted_data, re.DOTALL)

if match:

extracted_text = match.group(1)

# Changing the extracted textual content to a dictionary

data_dict = eval('{' + extracted_text + '}')

print(data_dict)

else:

print("No match discovered.")

df=df.append([data_dict], ignore_index=True)

print("********************DONE***************")

#df=df.append(save_to_dataframe(llm_extracted_data), ignore_index=True)

df.head()

return dfUntil right here our utils.py file is accomplished, Now it’s time to begin with the app.py file. The app.py file comprises front-end code with the assistance of the streamlit bundle.

Streamlit Framework

An open-source Python app framework referred to as Streamlit makes it simpler to construct net functions for information science and machine studying. You possibly can assemble apps utilizing this method in the identical means as you write Python code as a result of it was created for machine studying engineers. Main Python libraries together with scikit-learn, Keras, PyTorch, SymPy(latex), NumPy, pandas, and Matplotlib are suitable with Streamlit. Working pip will get you began with Streamlit in lower than a minute.

Set up and Import all Packages

First, we’ll set up and import all the required packages

#importing packages

import streamlit as st

import os

from dotenv import load_dotenv

from utils import *Create the Predominant Perform

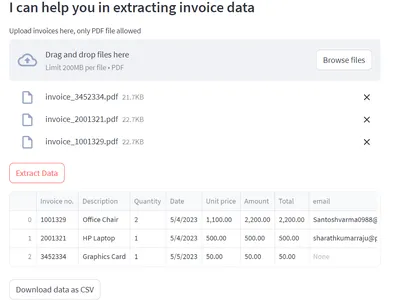

Then we’ll create a principal perform the place we’ll point out all of the titles, subheaders and front-end UI with the assistance of streamlit. Consider me, with streamlit, it is vitally easy and simple.

def principal():

load_dotenv()

st.set_page_config(page_title="Bill Extraction Bot")

st.title("Bill Extraction Bot...💁 ")

st.subheader("I may help you in extracting bill information")

# Add the Invoices (pdf recordsdata)

pdf = st.file_uploader("Add invoices right here, solely PDF recordsdata allowed",

kind=["pdf"],accept_multiple_files=True)

submit=st.button("Extract Information")

if submit:

with st.spinner('Await it...'):

df=create_docs(pdf)

st.write(df.head())

data_as_csv= df.to_csv(index=False).encode("utf-8")

st.download_button(

"Obtain information as CSV",

data_as_csv,

"benchmark-tools.csv",

"textual content/csv",

key="download-tools-csv",

)

st.success("Hope I used to be in a position to save your time❤️")

#Invoking principal perform

if __name__ == '__main__':

principal()Run streamlit run app.py

As soon as that’s executed, save the recordsdata and run the “streamlit run app.py” command within the terminal. Bear in mind by default streamlit makes use of port 8501. You may as well obtain the extracted data in an Excel file. The obtain choice is given within the UI.

Conclusion

Congratulations! You will have constructed an incredible and time-saving app utilizing a big language mannequin and streamlit. On this article, we now have realized what a big language mannequin is and the way it’s helpful. As well as, we now have realized the fundamentals of LangChain and its core parts and a few functionalities of the streamlit framework. An important a part of this weblog is the “extract_data” perform (from the code session), which explains tips on how to give correct prompts and directions to the LLM mannequin.

You will have additionally realized the next:

- Learn how to extract data from an bill PDF file.

- Use of streamlit framework for UI

- Use of OpenAI LLM mannequin

This offers you some concepts on utilizing the LLM mannequin with correct prompts and directions to satisfy your process.

Often Requested Query

A. Streamlit is a library which lets you construct the entrance finish (UI) to your information science and machine studying duties by writing all of the code in Python. Stunning UIs can simply be designed by way of quite a few parts from the library.

A. Flask is a light-weight micro-framework that’s easy to study and use. A more moderen framework referred to as Streamlit is made completely for net functions which can be pushed by information.

A. No, It relies on the use case to make use of case. On this instance, we all know what data must be extracted however if you wish to extract roughly data it’s worthwhile to give the correct directions and an instance to the LLM mannequin accordingly it is going to extract all of the talked about data.

A. Generative AI has the potential to have a profound impression on the creation, development, and play of video video games in addition to it will possibly substitute most human-level duties with automation.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Creator’s discretion.

Associated

[ad_2]