[ad_1]

Final April, a marketing campaign advert appeared on the Republican Nationwide Committee’s YouTube channel. The advert confirmed a collection of photographs: President Joe Biden celebrating his reelection, U.S. metropolis streets with shuttered banks and riot police, and immigrants surging throughout the U.S.-Mexico border. The video’s caption learn: “An AI-generated look into the nation’s potential future if Joe Biden is re-elected in 2024.”

Whereas that advert was up entrance about its use of AI, most faked photographs and movies aren’t: That very same month, a pretend

video clip circulated on social media that purported to point out Hillary Clinton endorsing the Republican presidential candidate Ron DeSantis. The extraordinary rise of generative AI in the previous few years signifies that the 2024 U.S. election marketing campaign received’t simply pit one candidate towards one other—it would even be a contest of reality versus lies. And the U.S. election is way from the one high-stakes electoral contest this yr. Based on the Integrity Institute, a nonprofit centered on enhancing social media, 78 international locations are holding main elections in 2024.

Happily, many individuals have been getting ready for this second. One in all them is

Andrew Jenks, director of media provenance initiatives at Microsoft. Artificial photographs and movies, additionally known as deepfakes, are “going to have an effect” within the 2024 U.S. presidential election, he says. “Our aim is to mitigate that impression as a lot as potential.” Jenks is chair of the Coalition for Content material Provenance and Authenticity (C2PA), a company that’s creating technical strategies to doc the origin and historical past of digital-media information, each actual and faux. In November, Microsoft additionally launched an initiative to assist political campaigns use content material credentials.

The C2PA group brings collectively the Adobe-led

Content material Authenticity Initiative and a media provenance effort known as Mission Origin; in 2021 it launched its preliminary requirements for attaching cryptographically safe metadata to picture and video information. In its system, any alteration of the file is routinely mirrored within the metadata, breaking the cryptographic seal and making evident any tampering. If the particular person altering the file makes use of a instrument that helps content material credentialing, details about the modifications is added to the manifest that travels with the picture.

Since releasing the requirements, the group has been additional creating the open-source specs and implementing them with main media corporations—the BBC, the Canadian Broadcasting Corp. (CBC), and

The New York Occasions are all C2PA members. For the media corporations, content material credentials are a method to construct belief at a time when rampant misinformation makes it simple for individuals to cry “pretend” on something they disagree with (a phenomenon referred to as the liar’s dividend). “Having your content material be a beacon shining by means of the murk is absolutely essential,” says Laura Ellis, the BBC’s head of know-how forecasting.

This yr, deployment of content material credentials will start in earnest, spurred by new AI rules

in america and elsewhere. “I believe 2024 would be the first time my grandmother runs into content material credentials,” says Jenks.

Why do we’d like content material credentials?

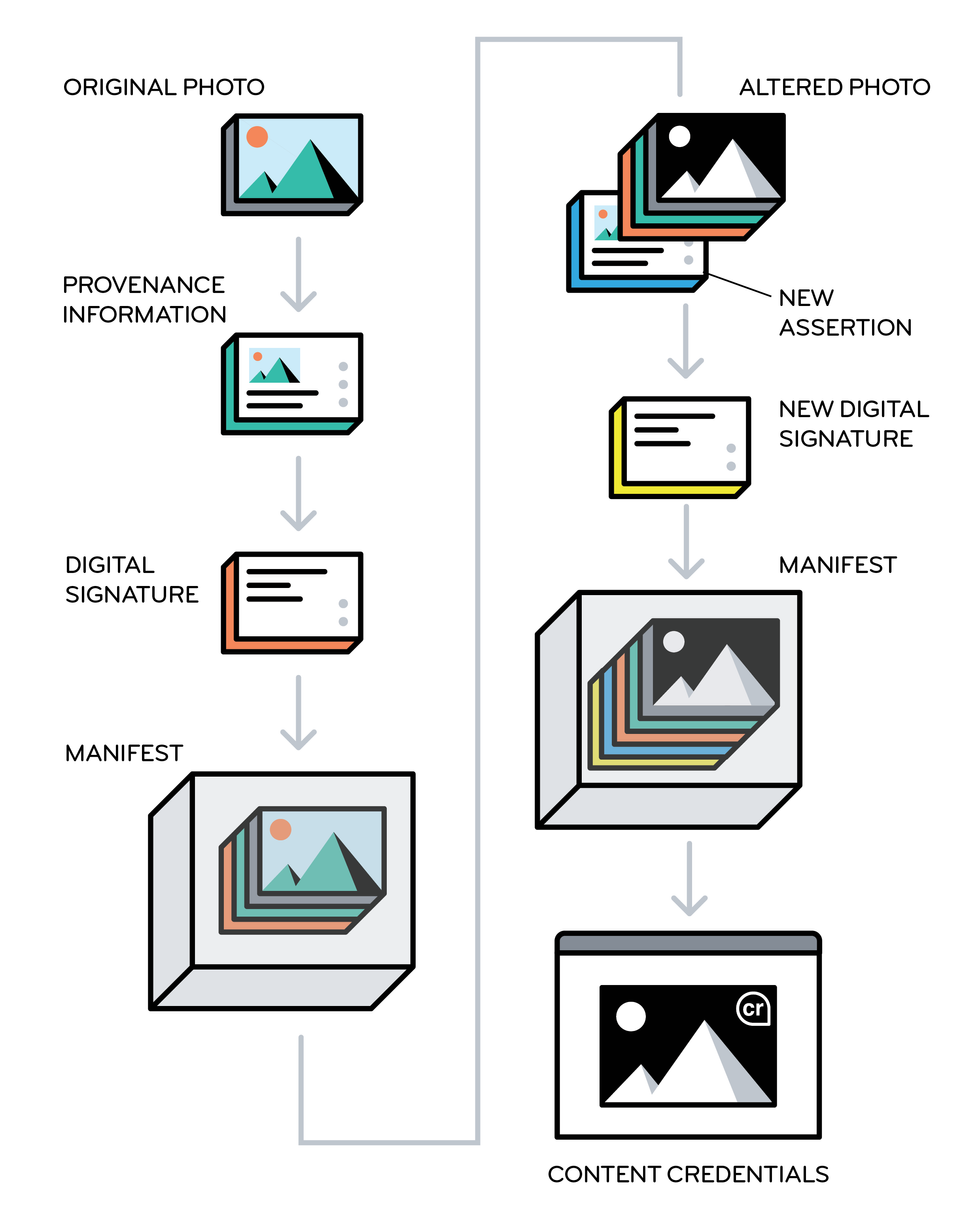

Within the content-credentials system, an unique photograph is supplemented with provenance info and a digital signature which might be bundled collectively in a tamper-evident manifest. If one other person alters the photograph utilizing an authorized instrument, new assertions are added to the manifest. When the picture reveals up on a Internet web page, viewers can click on the content-credentials emblem for details about how the picture was created and altered. C2PA

Within the content-credentials system, an unique photograph is supplemented with provenance info and a digital signature which might be bundled collectively in a tamper-evident manifest. If one other person alters the photograph utilizing an authorized instrument, new assertions are added to the manifest. When the picture reveals up on a Internet web page, viewers can click on the content-credentials emblem for details about how the picture was created and altered. C2PA

The crux of the issue is that image-generating instruments like

DALL-E 2 and Midjourney make it simple for anybody to create realistic-but-fake photographs of occasions that by no means occurred, and related instruments exist for video. Whereas the most important generative-AI platforms have protocols to forestall individuals from creating pretend photographs or movies of actual individuals, reminiscent of politicians, loads of hackers enjoyment of “jailbreaking” these programs and discovering methods across the security checks. And fewer-reputable platforms have fewer safeguards.

Towards this backdrop, a couple of massive media organizations are making a push to make use of the C2PA’s content material credentials system to permit Web customers to verify the manifests that accompany validated photographs and movies. Photos which were authenticated by the C2PA system can embrace somewhat

“cr” icon within the nook; customers can click on on it to see no matter info is offered for that picture—when and the way the picture was created, who first revealed it, what instruments they used to change it, the way it was altered, and so forth. Nonetheless, viewers will see that info provided that they’re utilizing a social-media platform or software that may learn and show content-credential information.

The identical system can be utilized by AI corporations that make image- and video-generating instruments; in that case, the artificial media that’s been created could be labeled as such. Some corporations are already on board:

Adobe, a cofounder of C2PA, generates the related metadata for each picture that’s created with its image-generating instrument, Firefly, and Microsoft does the identical with its Bing Picture Creator.

“Having your content material be a beacon shining by means of the murk is absolutely essential.” — Laura Ellis, BBC

The transfer towards content material credentials comes as enthusiasm fades for automated deepfake-detection programs. Based on the BBC’s Ellis, “we determined that deepfake-detection was a war-game house”—that means that the most effective present detector could possibly be used to coach an excellent higher deepfake generator. The detectors additionally aren’t excellent. In 2020, Meta’s

Deepfake Detection Problem awarded high prize to a system that had solely 65 p.c accuracy in distinguishing between actual and faux.

Whereas just a few corporations are integrating content material credentials up to now, rules are at the moment being crafted that can encourage the observe. The European Union’s

AI Act, now being finalized, requires that artificial content material be labeled. And in america, the White Home just lately issued an government order on AI that requires the Commerce Division to develop tips for each content material authentication and labeling of artificial content material.

Bruce MacCormack, chair of Mission Origin and a member of the C2PA steering committee, says the large AI corporations began down the trail towards content material credentials in mid-2023, once they signed voluntary commitments with the White Home that included a pledge to watermark artificial content material. “All of them agreed to do one thing,” he notes. “They didn’t conform to do the identical factor. The manager order is the driving operate to power all people into the identical house.”

What is going to occur with content material credentials in 2024

Some individuals liken content material credentials to a vitamin label: Is that this junk media or one thing made with actual, healthful elements?

Tessa Sproule, the CBC’s director of metadata and data programs, says she thinks of it as a series of custody that’s used to trace proof in authorized circumstances: “It’s safe info that may develop by means of the content material life cycle of a nonetheless picture,” she says. “You stamp it on the enter, after which as we manipulate the picture by means of cropping in Photoshop, that info can be tracked.”

Sproule says her staff has been overhauling inner image-management programs and designing the person expertise with layers of data that customers can dig into, relying on their degree of curiosity. She hopes to debut, by mid-2024, a content-credentialing system that will probably be seen to any exterior viewer utilizing a kind of software program that acknowledges the metadata. Sproule says her staff additionally desires to return into their archives and add metadata to these information.

On the BBC, Ellis says they’ve already achieved trials of including content-credential metadata to nonetheless photographs, however “the place we’d like this to work is on the [social media] platforms.” In spite of everything, it’s much less seemingly that viewers will doubt the authenticity of a photograph on the BBC web site than in the event that they encounter the identical picture on Fb. The BBC and its companions have additionally been operating workshops with media organizations to speak about integrating content-credentialing programs. Recognizing that it might be laborious for small publishers to adapt their workflows, Ellis’s group can be exploring the concept of “service facilities” to which publishers might ship their photographs for validation and certification; the pictures could be returned with cryptographically hashed metadata testifying to their authenticity.

MacCormack notes that the early adopters aren’t essentially eager to start promoting their content material credentials, as a result of they don’t need Web customers to doubt any picture or video that doesn’t have the little

“cr” icon within the nook. “There needs to be a crucial mass of data that has the metadata earlier than you inform individuals to search for it,” he says.

Going past the media trade, Microsoft’s new

initiative for political campaigns, known as Content material Credentials as a Service, is meant to assist candidates management their very own photographs and messages by enabling them to stamp genuine marketing campaign materials with safe metadata. A Microsoft weblog publish mentioned that the service “will launch within the spring as a personal preview” that’s out there totally free to political campaigns. A spokesperson mentioned that Microsoft is exploring concepts for this service, which “might ultimately develop into a paid providing” that’s extra broadly out there.

The large social-media platforms haven’t but made public their plans for utilizing and displaying content material credentials, however

Claire Leibowicz, head of AI and media integrity for the Partnership on AI, says they’ve been “very engaged” in discussions. Corporations like Meta at the moment are desirous about the person expertise, she says, and are additionally pondering practicalities. She cites compute necessities for example: “If you happen to add a watermark to each piece of content material on Fb, will that make it have a lag that makes customers log out?” Leibowicz expects rules to be the largest catalyst for content-credential adoption, and he or she’s anticipating extra details about how Biden’s government order will probably be enacted.

Even earlier than content material credentials begin exhibiting up in customers’ feeds, social-media platforms can use that metadata of their filtering and rating algorithms to search out reliable content material to suggest. “The worth occurs effectively earlier than it turns into a consumer-facing know-how,” says Mission Origin’s MacCormack. The programs that handle info flows from publishers to social-media platforms “will probably be up and operating effectively earlier than we begin educating shoppers,” he says.

If social-media platforms are the top of the image-distribution pipeline, the cameras that file photographs and movies are the start. In October, Leica unveiled the primary digital camera with

built-in content material credentials; C2PA member corporations Nikon and Canon have additionally made prototype cameras that incorporate credentialing. However {hardware} integration ought to be thought-about “a development step,” says Microsoft’s Jenks. “In the most effective case, you begin on the lens once you seize one thing, and you’ve got this digital chain of belief that extends all the best way to the place one thing is consumed on a Internet web page,” he says. “However there’s nonetheless worth in simply doing that final mile.”

This text seems within the January 2024 print subject as “This Election 12 months, Search for Content material Credentials.”

[ad_2]