[ad_1]

On-line courting has essentially modified the way in which folks search romantic companions. Fifty-three p.c of American adults between the ages of 18 to 29 have used courting apps, in keeping with a current Pew Analysis examine—and the business is estimated to succeed in a market worth of $8.18 billion by the top of 2023.

Regardless of the recognition of on-line courting, customers are more and more involved in regards to the stalking, on-line sexual abuse, and undesirable sharing of express pictures that happen on these platforms. The Pew examine discovered that 46% of on-line courting customers within the US have had unfavorable experiences with apps, and 32% not suppose it’s a secure approach to meet folks. An Australian survey of on-line daters had equally regarding outcomes: One-third of respondents reported experiencing some type of in-person abuse from somebody they met on an app, reminiscent of sexual abuse, coercion, verbal manipulation, or stalking conduct.

Throughout my time as a inventive director and UX guide for firms like Hertz, Jaguar Land Rover, and the courting app Thursday, I’ve realized that consumer belief and security considerably affect a product’s success. On the subject of courting apps, designers can guarantee consumer welfare by implementing ID verification methods and prioritizing abuse detection and reporting methods. Moreover, designers can introduce UX options that make clear consent and educate customers about secure on-line courting practices.

Throughout account creation, the onboarding course of ought to immediate customers to offer complete profile info, together with their full identify, age, and placement. From there, multifactor authentication methods reminiscent of checking e mail addresses, social media accounts, telephone numbers, and government-issued IDs can confirm that profile-makers are who they declare to be, thus constructing consumer belief.

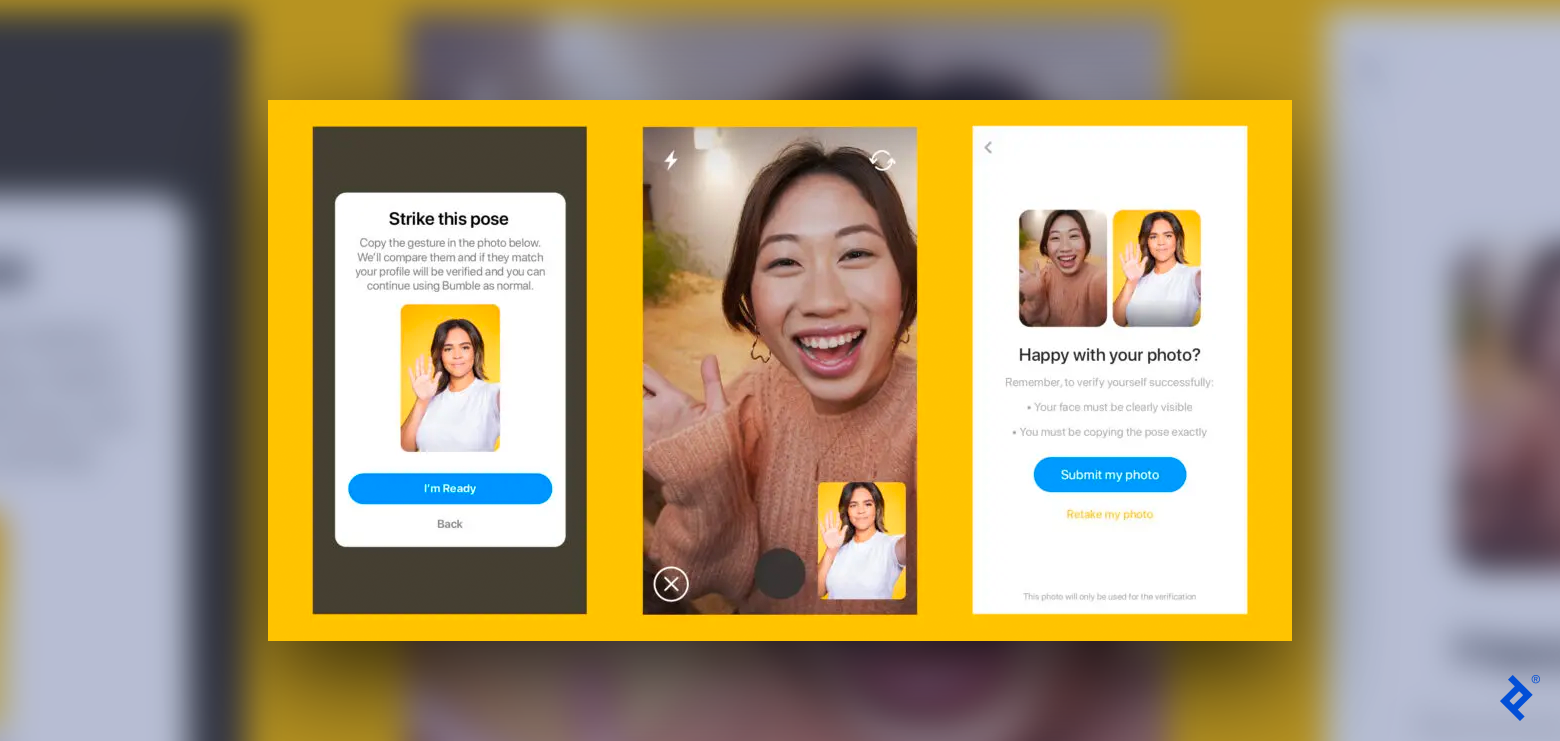

Bumble—a courting app the place girls make the primary transfer—requires customers to submit a photograph and a video of themselves performing a selected motion, reminiscent of holding up a specific variety of fingers or posing with a hand wave. Human moderators examine the selfie and the profile image to confirm the legitimacy of the account. For added peace of thoughts, verified customers can ask their matches to confirm their profile once more utilizing photograph verification. Bumble notifies the consumer if their match is verified or not, and the consumer can determine to finish the interplay. Being clear about what your courting app does with consumer info and pictures can also be key to sustaining belief: Bumble, for instance, makes it clear in its privateness coverage that the corporate will maintain onto the verification selfie and video till a consumer is inactive for 3 years.

Tinder’s onboarding course of contains video verification and requires customers to ship snippets of themselves answering a immediate. AI facial recognition compares the video to the profile photograph by creating a novel facial-geometry template. As soon as verified, customers can customise their settings to solely match with different verified customers. Tinder deletes the facial template and video inside 24 hours however retains two screenshots from every for so long as the consumer retains the account.

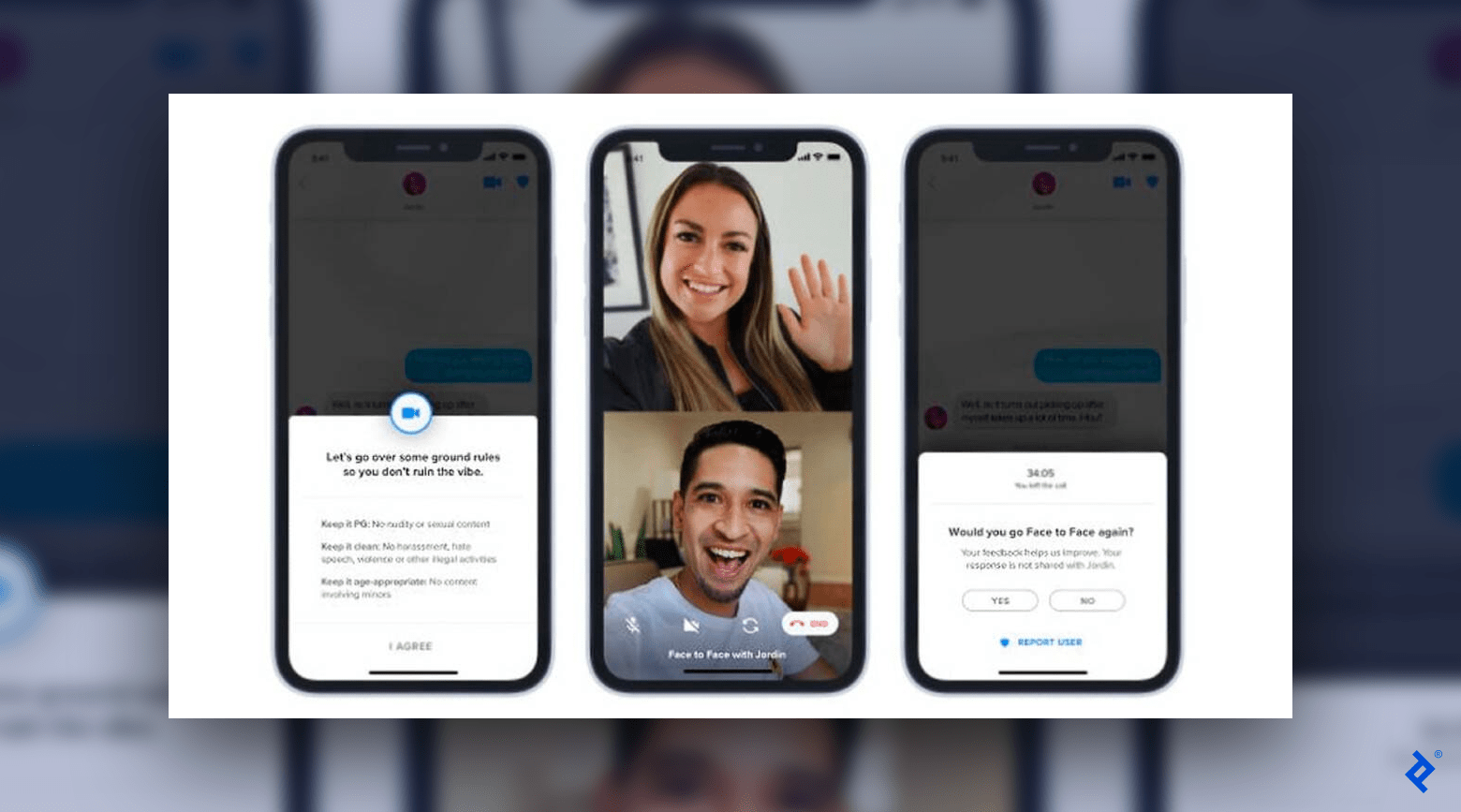

Whereas human moderators and AI instruments are extremely helpful, they will solely go to date in figuring out scammers or expertise that evades verification, reminiscent of face anonymization. In response to those threats, some courting apps empower customers to take additional security precautions. For example, in-app video chats permit customers to find out the legitimacy of a consumer’s profile earlier than assembly in particular person. Although video chats aren’t 100% secure, designers can introduce options that decrease danger. Tinder’s Face to Face video chat requires each customers to comply with the chat earlier than it begins, and in addition establishes floor guidelines, reminiscent of no sexual content material or violence, that customers should comply with for the decision to proceed. As soon as the decision ends, Tinder instantly asks for suggestions, in order that customers can report inappropriate conduct.

Prioritize Reporting and Detection to Shield Customers

Designing an intuitive reporting system makes it simpler for customers to inform courting apps when harassment, abuse, or inappropriate conduct happens. The UI elements used to submit studies needs to be accessible from a number of screens within the app in order that customers can log points in only a few faucets. For instance, Bumble’s Block & Report characteristic makes it easy for customers to report inappropriate conduct from the app’s messaging display or from an offending consumer’s profile.

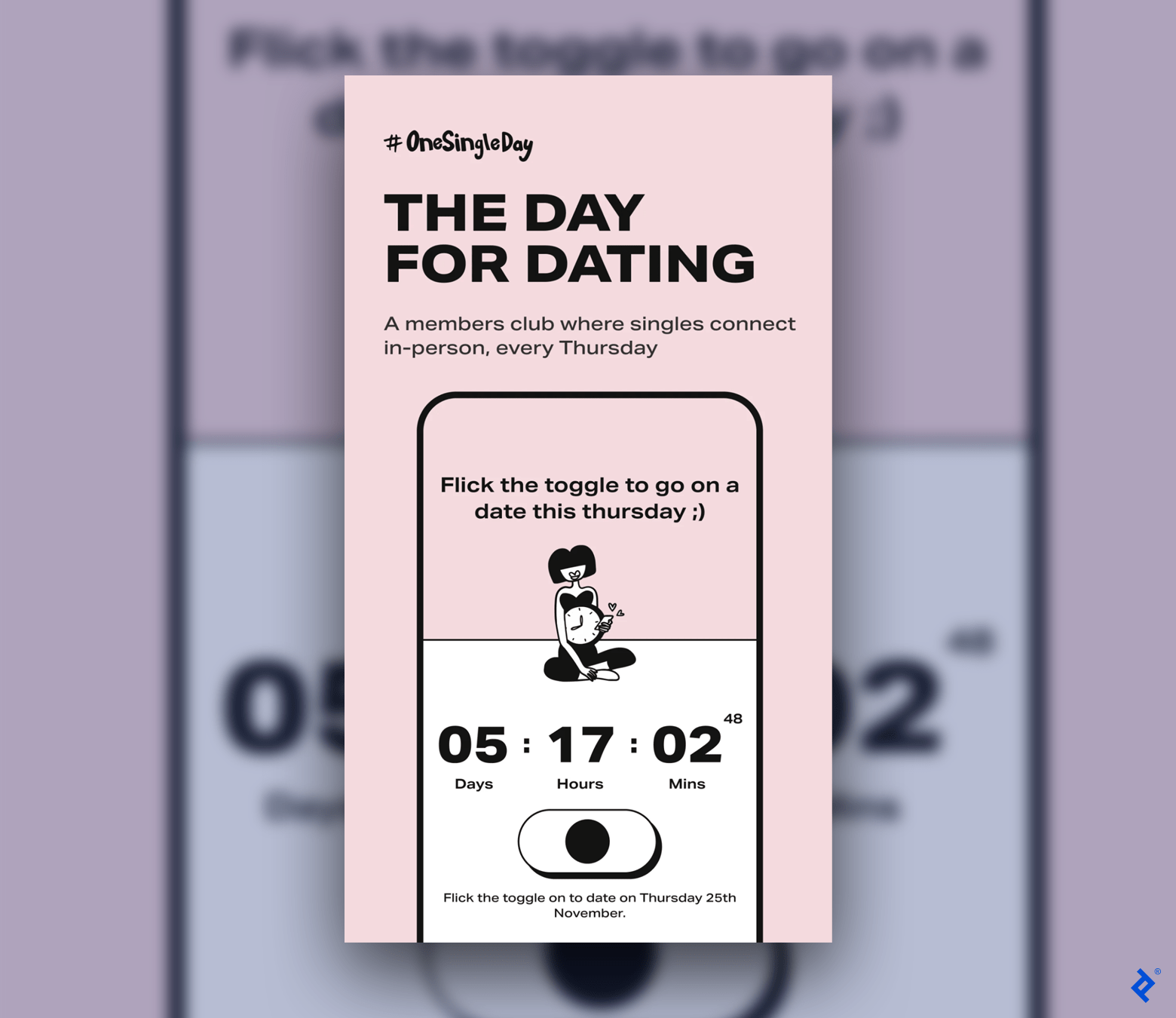

Once I labored on the MVP for Thursday, security was a main concern. The app began with the premise that individuals spend an excessive amount of time on-line looking for potential dates. Each Thursday, the app turns into accessible for folks to match with customers trying to meet that day. In any other case, the app is nearly “closed” for the remainder of the week. Given this distinctive rhythm, the consumer expertise is proscribed, so safety protocols needed to be seamless and dependable.

I tackled the problem of reporting and filtering in Thursday through the use of third-party software program that scans for dangerous content material (e.g., cursing or lewd language) earlier than a consumer sends a message. The software program asks the sender if their message is likely to be perceived as offensive or disrespectful. If the sender nonetheless decides to ship the message, the software program allows the receiver to dam or report the sender. It’s much like Tinder’s Are You Certain? characteristic, which asks customers in the event that they’re sure about sending a message that AI has flagged as inappropriate. Tinder’s filtering characteristic diminished dangerous messages by greater than 10% in early testing and decreased inappropriate conduct long run.

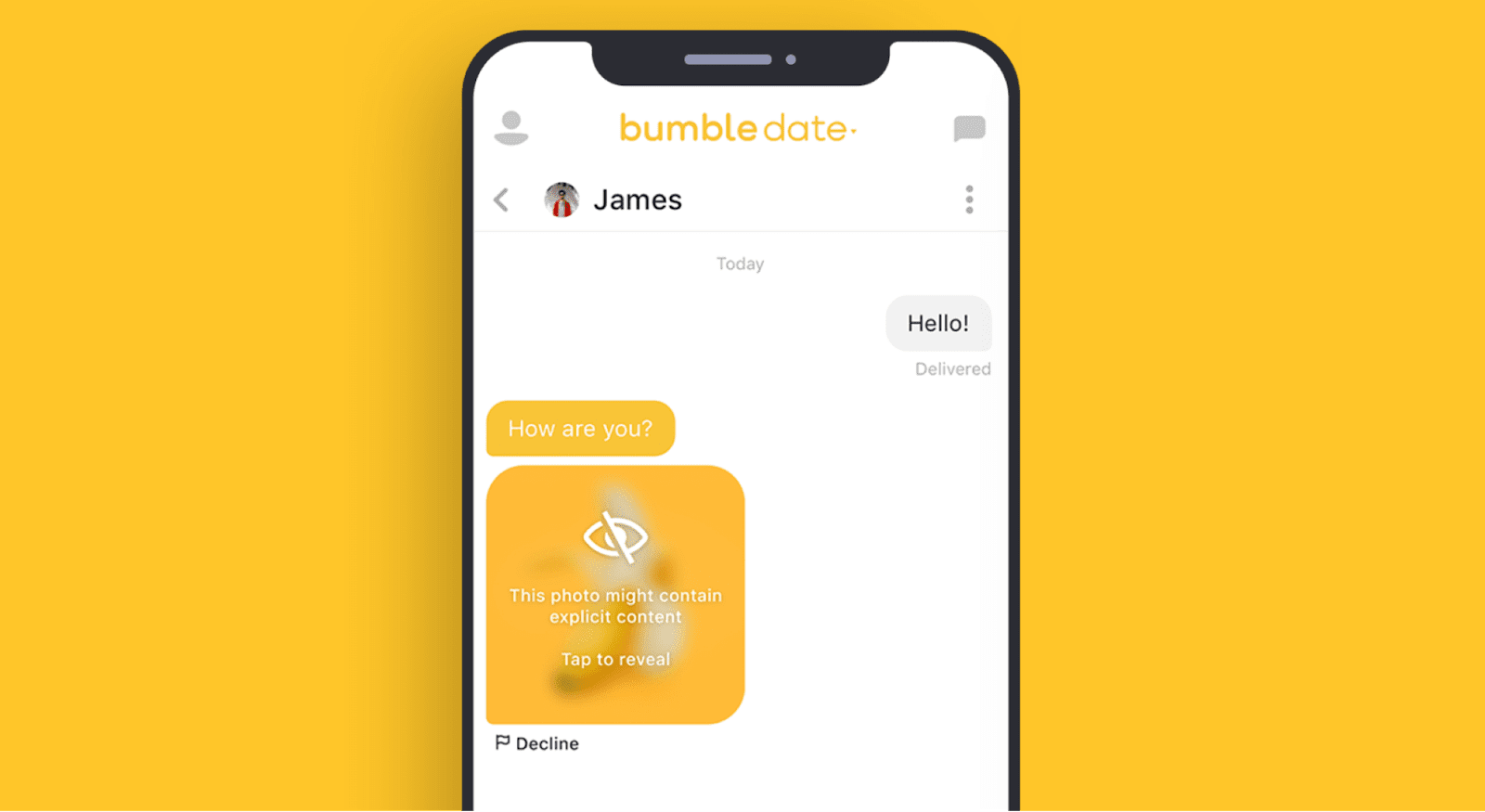

AI and machine studying also can defend customers by preemptively flagging dangerous content material. Bumble’s Non-public Detector makes use of AI to determine and blur inappropriate pictures—and permits customers to unblur a picture if desired. Equally, Tinder’s Does This Hassle You? characteristic makes use of machine studying to detect and flag doubtlessly dangerous content material and gives customers with a possibility to report abuse.

It’s additionally value mentioning that reporting can prolong to in-person interactions. For instance, Hinge—a prompt-based courting app—has a suggestions instrument referred to as We Met. The characteristic surveys customers who met in particular person about how their interplay went and permits customers to privately report matches who had been disrespectful on a date. When one consumer studies one other, Hinge blocks each events from interacting with one another on the platform and makes use of the suggestions to enhance its matching algorithm.

Educate and Inform to Make clear Consent

Even with strong ID verification and reporting options, customers should still encounter dangerous conditions due to courting’s intimate nature. To guard customers, courting apps ought to have steerage pertaining to secure courting practices and consent by digital content material, firm insurance policies, and UX options.

Tinder educates customers by linking to an intensive library of safety-related content material on its homepage. The corporate gives ideas for on-line courting, suggestions for assembly in particular person, and a prolonged checklist of sources for customers searching for further assist, assist, or recommendation.

Bumble’s weblog, The Buzz, additionally options a number of articles about clarifying consent and figuring out and stopping harassment. Consent is when an individual offers an “enthusiastic ‘sure’” to a sexual request whether or not it’s on-line or in-person. People are entitled to revoke consent throughout an encounter, and prior consent doesn’t equal current consent. The majority of courting app interactions are digital and nonverbal, that means there’s potential for confusion and miscommunication between customers. To fight this, courting apps have to have clear and simply accessible consent insurance policies.

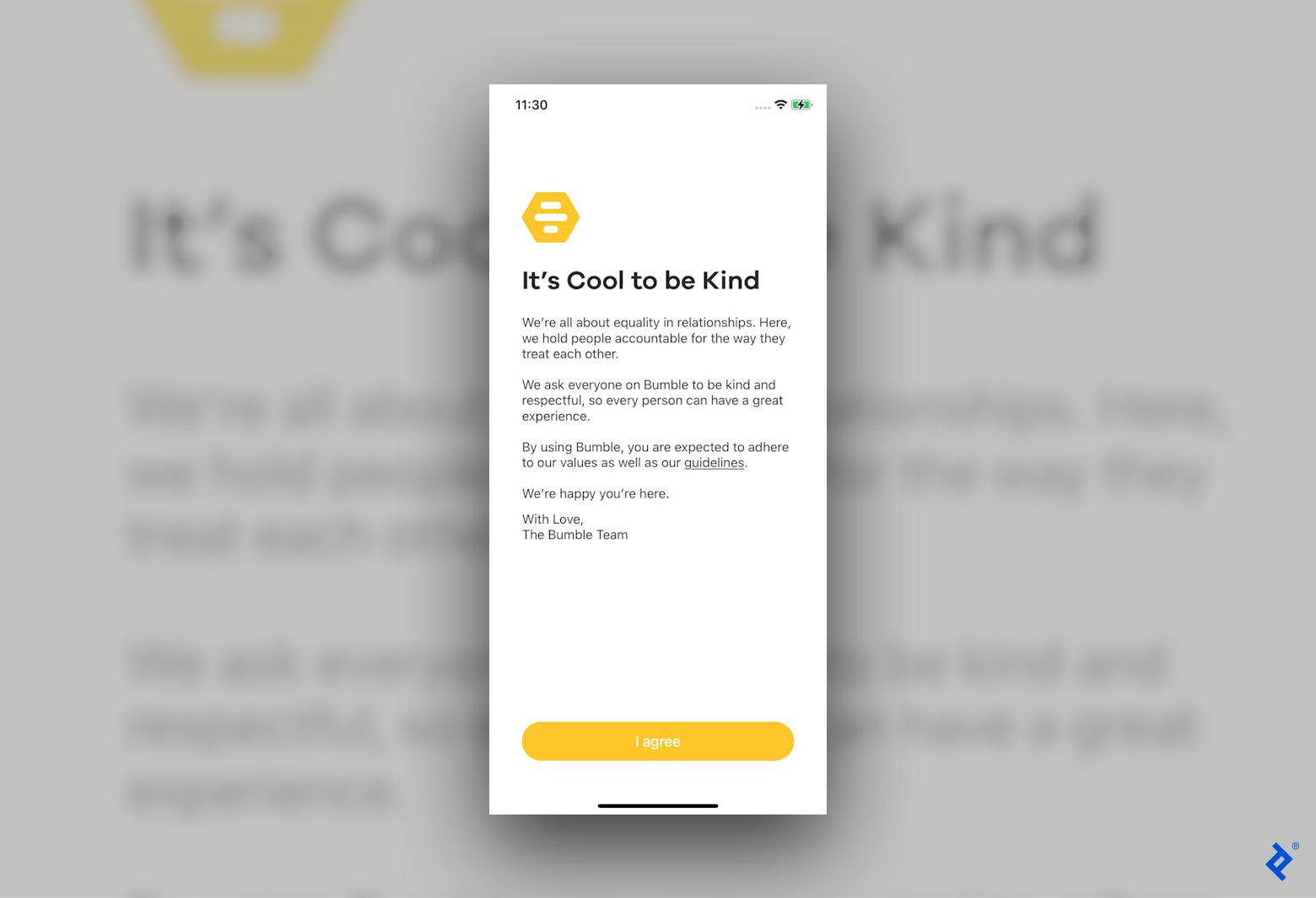

For example, Bumble encourages customers to report disrespectful and nonconsensual conduct, and if a consumer responds rudely when somebody rejects a sexual request, it’s grounds for getting banned from the app. Nevertheless, Bumble’s onboarding solely hints at this rule; extra express UX writing or highlighting the corporate’s consent coverage throughout onboarding would scale back ambiguity and instill larger belief within the product.

Acquainted visible cues reminiscent of icons also can pave the way in which for clearer interactions between customers. In most courting apps, the guts icon means a consumer likes an individual’s whole profile. Hinge, although, permits customers to put hearts on elements of an account, reminiscent of an individual’s response to a immediate or a profile picture. This characteristic will not be a method of granting consent, however it’s a considerate try to foster a extra nuanced dialog about what a consumer likes and doesn’t like.

Designing a Safer Future for On-line Courting

As considerations round privateness and safety enhance, consumer security in courting app design should evolve. One pattern prone to proceed is using multifactor authentication strategies reminiscent of facial recognition and e mail verification to confirm identities and forestall fraud or impersonation.

One other important pattern would be the elevated use of AI and machine studying to mitigate potential dangers earlier than they escalate. As these applied sciences develop into extra subtle, they’ll have the ability to robotically determine and reply to potential threats, serving to courting apps present a safer expertise. Authorities companies are additionally prone to play a extra important function in establishing and imposing requirements for consumer security, and designers might want to keep present on these insurance policies and be certain that their merchandise adjust to related legal guidelines and pointers.

Making certain security goes past design alone. Malicious customers will proceed to seek out new methods to deceive and harass, even manipulating AI expertise to take action. Holding customers secure is a continuing technique of iteration led by consumer testing and analysis of an app’s utilization patterns. When courting app UX prioritizes security, customers have a greater likelihood at falling in love with a possible match—and together with your app.

[ad_2]