[ad_1]

At Microsoft we need to empower our clients to harness the complete potential of latest applied sciences like synthetic intelligence, whereas assembly their privateness wants and expectations. Right now we’re sharing key points of how our strategy to defending privateness in AI – together with our give attention to safety, transparency, consumer management, and continued compliance with knowledge safety necessities – are core elements of our new generative AI merchandise like Microsoft Copilot.

We create our merchandise with safety and privateness included by way of all phases of design and implementation. We offer transparency to allow folks and organizations to know the capabilities and limitations of our AI programs, and the sources of knowledge that generate the responses they obtain, by offering info in real-time as customers interact with our AI merchandise. We offer instruments and clear selections so folks can management their knowledge, together with by way of instruments to entry, handle, and delete private knowledge and saved dialog historical past.

Our strategy to privateness in AI programs is grounded in our longstanding perception that privateness is a elementary human proper. We’re dedicated to continued compliance with all relevant legal guidelines, together with privateness and knowledge safety laws, and we assist accelerating the growth of applicable guardrails to construct belief in AI programs.

We imagine the strategy we now have taken to boost privateness in our AI expertise will assist present readability to folks about how they will management and defend their knowledge in our new generative AI merchandise.

Our strategy

Knowledge safety is core to privateness

Maintaining knowledge safe is a vital privateness precept at Microsoft and is crucial to making sure belief in AI programs. Microsoft implements applicable technical and organizational measures to make sure knowledge is safe and guarded in our AI programs.

Microsoft has built-in Copilot into many alternative providers together with Microsoft 365, Dynamics 365, Viva Gross sales, and Energy Platform: every product is created and deployed with crucial safety, compliance, and privateness insurance policies and processes. Our safety and privateness groups make use of each privateness and safety by design all through the event and deployment of all our merchandise. We make use of a number of layers of protecting measures to maintain knowledge safe in our AI merchandise like Microsoft Copilot, together with technical controls like encryption, all of which play an important position within the knowledge safety of our AI programs. Maintaining knowledge protected and safe in AI programs – and making certain that the programs are architected to respect knowledge entry and dealing with insurance policies – are central to our strategy. Safety and privateness are rules which might be constructed into our inside Accountable AI customary and we’re dedicated to persevering with to give attention to privateness and safety to maintain our AI merchandise secure and reliable.

Transparency

Transparency is one other key precept for integrating AI into Microsoft services and products in a means that promotes consumer management and privateness, and builds belief. That’s why we’re dedicated to constructing transparency into folks’s interactions with our AI programs. This strategy to transparency begins with offering readability to customers when they’re interacting with an AI system if there’s danger that they are going to be confused. And we offer real-time info to assist folks higher perceive how AI options work.

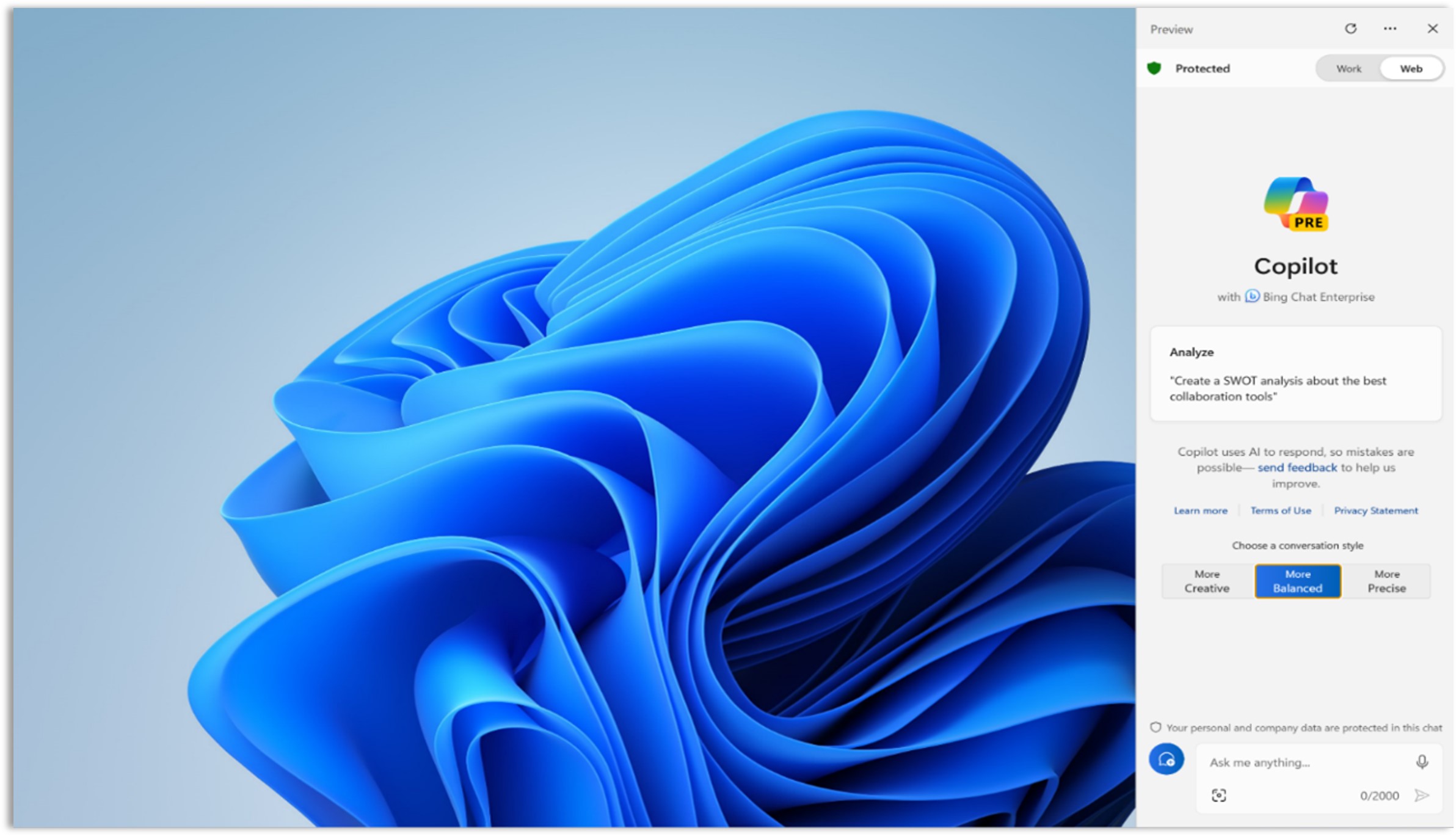

Microsoft Copilot makes use of quite a lot of transparency approaches that meet customers the place they’re. Copilot offers clear details about the way it collects and makes use of knowledge, in addition to its capabilities and its limitations. Our strategy to transparency additionally helps folks perceive how they will greatest leverage the capabilities of Copilot as an on a regular basis AI device and offers alternatives to study extra and supply suggestions.

Clear selections and disclosures whereas customers interact with Microsoft Copilot

To assist folks perceive the capabilities of those new AI instruments, Copilot offers in-product info that clearly lets customers know that they’re interacting with AI and offers easy-to-understand selections in a conversational fashion. As folks work together, these disclosures and selections assist present a greater understanding of learn how to harness the advantages of AI and restrict potential dangers.

Grounding responses in proof and sources

Copilot additionally offers details about how its responses are centered, or “grounded”, on related content material. In our AI choices in Bing, Copilot.microsoft.com, Microsoft Edge, and Home windows, our Copilot responses embrace details about the content material from the net that helped generate the response. In Copilot for Microsoft 365, responses may embrace details about the consumer’s enterprise knowledge included in a generated response, similar to emails or paperwork that you have already got permission to entry. By sharing hyperlinks to enter sources and supply supplies, folks have larger management of their AI expertise and might higher consider the credibility and relevance of Microsoft Copilot outputs, and entry extra info as wanted.

Knowledge safety consumer controls

Microsoft offers instruments that put folks answerable for their knowledge. We imagine all organizations providing AI expertise ought to guarantee shoppers can meaningfully train their knowledge topic rights.

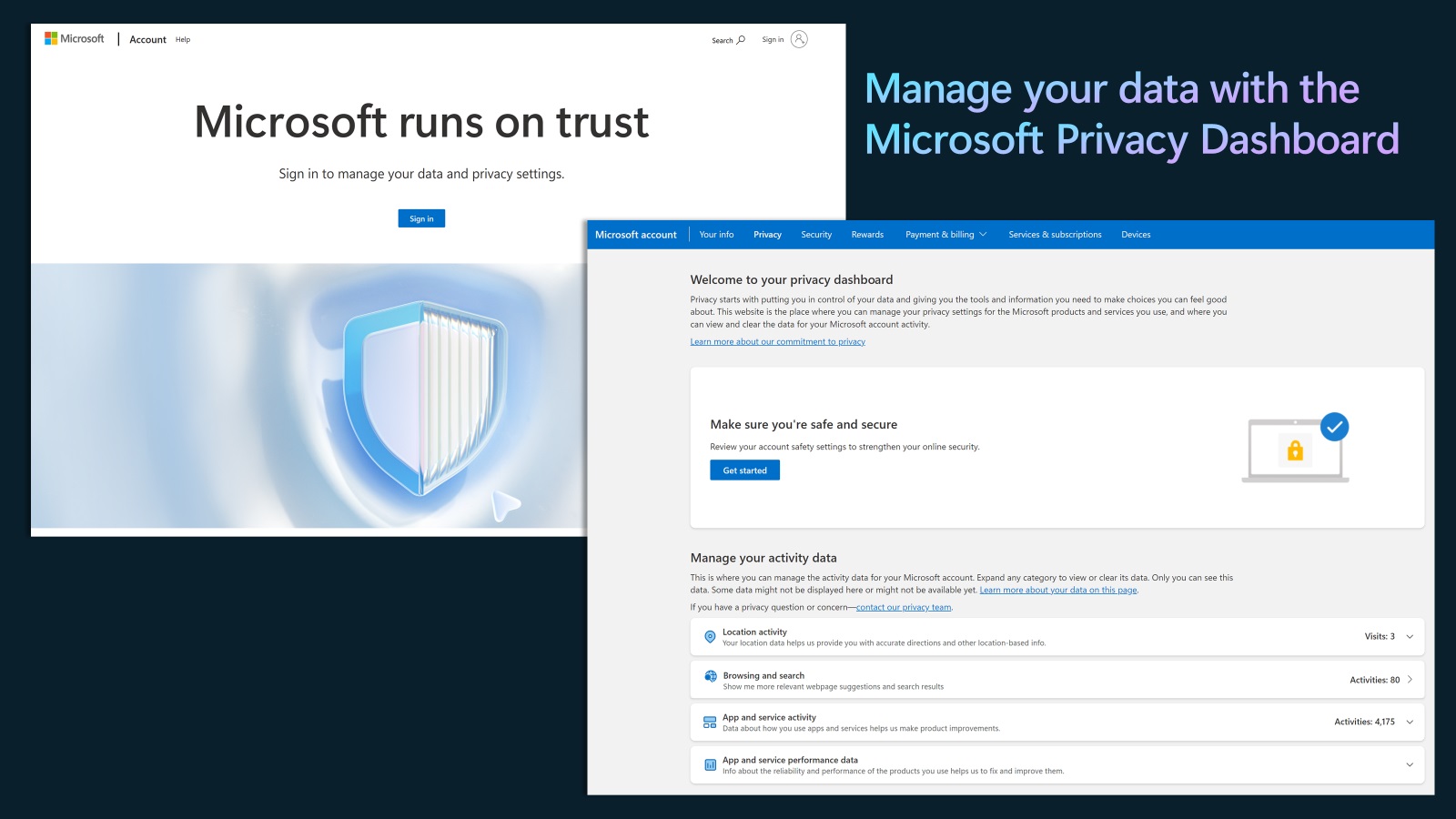

Microsoft offers the power to manage your interactions with Microsoft services and products and honors your privateness selections. Via the Microsoft Privateness Dashboard, our account holders can entry, handle, and delete their private knowledge and saved dialog historical past. In Microsoft Copilot, we honor further privateness selections that our customers have made in our cookie banners and different controls, together with selections about knowledge assortment and use.

The Microsoft Privateness Dashboard permits customers to entry, handle and delete their knowledge when signed into their Microsoft Account

Further transparency about our privateness practices

Microsoft offers deeper details about how we defend people’ privateness in Microsoft Copilot and our different AI merchandise in our transparency supplies similar to M365 Copilot FAQs and The New Bing: Our Method to Accountable AI, that are publicly out there on-line. These transparency supplies describe in larger element how our AI merchandise are designed, examined, and deployed – and the way our AI merchandise handle moral and social points, similar to equity, privateness, safety, and accountability. Our customers and the general public may overview the Microsoft Privateness Assertion which offers details about our privateness practices and controls for all of Microsoft’s shopper merchandise.

AI programs are new and sophisticated, and we’re nonetheless studying how we will greatest inform our customers about our groundbreaking new AI instruments in a significant means. We proceed to hear and incorporate suggestions to make sure we offer clear details about how Microsoft Copilot works.

Complying with present legal guidelines, and supporting developments in international knowledge safety regulation

Microsoft is compliant as we speak with knowledge safety legal guidelines in all jurisdictions the place we function. We’ll proceed to work intently with governments world wide to make sure we keep compliant, at the same time as authorized necessities develop and alter.

Firms that develop AI programs have an vital position to play in working with privateness and knowledge safety regulators world wide to assist them perceive how AI expertise is evolving. We interact with regulators to share details about how our AI programs work, how they defend private knowledge, the teachings we now have discovered as we now have developed privateness, safety and accountable AI governance programs, and our concepts about learn how to handle distinctive points round AI and privateness.

Regulatory approaches to AI are advancing within the European Union by way of its AI Act, and in the USA by way of the President’s Government Order. We count on further regulators across the globe will search to deal with the alternatives and the challenges that new AI applied sciences will carry to privateness and different elementary rights. Microsoft’s contribution to this international regulatory dialogue contains our Blueprint for Governing AI, the place we make options concerning the number of approaches and controls governments might need to take into account to guard privateness, advance elementary rights, and guarantee AI programs are secure. We’ll proceed to work intently with knowledge safety authorities and privateness regulators world wide as they develop their approaches.

As society strikes ahead on this period of AI, we’ll want privateness leaders inside authorities, organizations, civil society, and academia to work collectively to advance harmonized laws that guarantee AI improvements profit everybody and are centered on defending privateness and different elementary human rights.

At Microsoft, we’re dedicated to doing our half.

[ad_2]