[ad_1]

In Half 1 of this weblog sequence, we mentioned how Databricks permits organizations to develop, handle and function processes that extract worth from their knowledge and AI. This time, we’ll deal with staff construction, staff dynamics and duties. To efficiently execute your goal working mannequin (TOM), completely different components and groups inside your group want to have the ability to collaborate.

Previous to becoming a member of Databricks, I labored in consulting and delivered AI initiatives throughout industries and in all kinds of know-how stacks, from cloud native to open supply. Whereas the underlying applied sciences differed, the roles concerned in creating and operating these purposes have been roughly the identical. Discover that I converse of roles and never of people; one individual inside a staff can tackle a number of roles relying on the dimensions and complexity of the work at hand.

Having a platform that enables completely different groups or individuals with completely different roles like engineering, knowledge science and analysts to work collectively utilizing the identical instruments, to talk the identical technical language and that facilitates the mixing of labor merchandise is crucial to realize a constructive Return on Knowledge Property (RODA).

When constructing the correct staff to execute in your working mannequin for AI, it’s key to consider the next components:

- Maturity of your knowledge basis: Whether or not your knowledge continues to be in silos, caught in proprietary codecs or troublesome to entry in a unified means can have huge implications on the quantity of knowledge engineering work and knowledge platform experience that’s required.

- Infrastructure and platform administration: Whether or not it is advisable keep or leverage ‘as-a-service’ choices can significantly impression your total staff composition. Furthermore, suppose your knowledge platform is made up of a number of providers and elements. In that case, the executive burden of governing and securing knowledge and customers and retaining all components working collectively will be overwhelming, particularly at enterprise scale.

- MLOps: To profit from AI, it is advisable apply it to impression your enterprise. Hiring a full knowledge science staff with out the correct ML engineering experience or instruments to bundle, check, deploy and monitor is extraordinarily wasteful. A number of steps go into operating efficient end-to-end AI purposes, and your working mannequin ought to mirror that within the roles concerned and in the way in which mannequin lifecycle administration is executed – from use case identification to growth to deployment to (maybe most significantly) utilization.

These three attributes inform your focus and the roles that must be a part of your growth staff. Over time, the prevalence of sure roles would possibly shift as your group matures alongside these dimensions and in your platform selections.

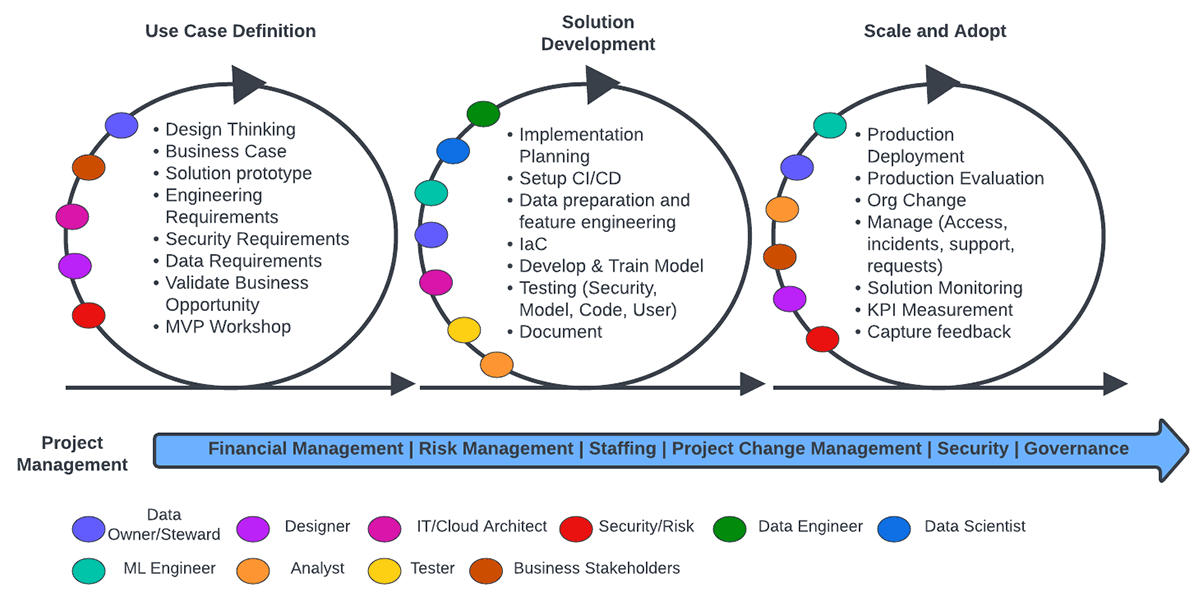

As a result of the event of knowledge and AI purposes is a extremely iterative course of, it is important that accompanying processes allow groups to work carefully collectively and scale back friction when handovers are made. The diagram beneath illustrates the end-to-end circulation of what your working mannequin could appear like and the roles and duties of the assorted groups.

Above, we see three core levels which can be a part of an iterative end-to-end working pipeline each inside and throughout loops. Every profit from a mixture of roles to extract essentially the most worth from them. Moreover, there’s an ongoing challenge administration operate that enacts the operational movement and ensures that the correct sources and processes can be found for every staff to execute throughout the three levels. Let’s stroll by way of every of those levels.

- Use Case Definition: When defining your challenge’s use case, it is very important work with enterprise stakeholders to align knowledge and technical capabilities to enterprise aims. A vital step right here is figuring out the info necessities, thus, having knowledge homeowners take part is vital to tell the feasibility of the use case, as is knowing whether or not the info platform can assist it, one thing that platform homeowners/architects must validate. The opposite components which can be highlighted at this stage are geared in the direction of guaranteeing the usability of the specified resolution each by way of safety and person expertise.

- Resolution Improvement: This stage focuses totally on technical growth. Right here is the place the core ML/AI growth cycle, pushed by the info engineering, knowledge science and ML engineering groups takes place, together with all of the ancillary steps and components wanted to check, validate and bundle the answer. This stage represents the interior loop of MLOps the place the onus is on experimentation. Knowledge homeowners and designers stay vital at this stage to allow the core growth staff with the correct supply supplies and instruments.

- Scale and Undertake: In a enterprise context, an ML/AI software is just helpful if it may be used to have an effect on the enterprise positively, due to this fact, enterprise stakeholders have to be intimately concerned. The principle goal at this stage is to develop and function the correct mechanisms and processes to allow end-users to eat and make the most of the appliance outputs. And since enterprise shouldn’t be static, steady monitoring of efficiency and KPIs and the implementation of suggestions loops again to the event and knowledge groups are elementary at this stage.

This working course of is only one instance; particular implementations will depend upon the construction of your group. Varied configurations – from centralized to CoE to federated – are definitely potential, however the rules described by the circulation above will stay relevant relating to roles and duties.

Conclusion

Creating knowledge and AI initiatives and purposes requires a various staff and roles. Furthermore, new organizational paradigms centered round knowledge exacerbate the necessity for an AI working mannequin that may assist the brand new roles inside a data-forward group successfully.

Lastly, it’s worthwhile to focus on as soon as extra {that a} (multi-cloud) platform that may assist simplify and consolidate the entire gamut of infrastructure, knowledge and tooling necessities, in addition to assist the required enterprise processes that should run on prime of it whereas on the identical time facilitating clear reporting, monitoring and KPI monitoring, is a big asset. This permits various, cross-functional groups to work collectively extra successfully, accelerating time to manufacturing and fostering innovation.

If you wish to study extra concerning the rules and methods to design your working mannequin for Knowledge and AI you may take a look at Half 1 of this weblog sequence

[ad_2]