[ad_1]

Introduction

Textual content technology has witnessed vital developments lately, because of state-of-the-art language fashions like GPT-2 (Generative Pre-trained Transformer 2). These fashions have demonstrated exceptional capabilities in producing human-like textual content primarily based on given prompts. Nonetheless, balancing creativity and coherence within the generated textual content stays difficult. On this article, we delve into textual content technology utilizing GPT-2, exploring its rules, sensible implementation, and the fine-tuning of parameters to regulate the generated output. We’ll present code examples for textual content technology with GPT 2 and talk about real-world functions, shedding gentle on how this expertise could be harnessed successfully.

Studying Targets

- The learners ought to be capable to clarify the foundational ideas of GPT-2, together with its structure, pre-training course of, and autoregressive textual content technology.

- The learners must be proficient in fine-tuning GPT-2 for particular textual content technology duties and controlling its output by adjusting parameters akin to temperature, max_length, and top-k sampling.

- The learners ought to be capable to determine and describe real-world functions of GPT-2 in numerous fields, akin to inventive writing, chatbots, digital assistants, and knowledge augmentation in pure language processing.

This text was printed as part of the Knowledge Science Blogathon.

Understanding GPT-2

GPT-2, quick for Generative Pre-trained Transformer 2, has launched a revolutionary strategy to pure language understanding and textual content technology by means of progressive pre-training methods on an enormous corpus of web textual content and switch studying. This part will delve deeper into these essential improvements and perceive how they empower GPT-2 to excel in numerous language-related duties.

Pre-training and Switch Studying

One in every of GPT-2’s key improvements is pre-training on an enormous corpus of web textual content. This pre-training equips the mannequin with common linguistic information, permitting it to grasp grammar, syntax, and semantics throughout numerous subjects. This mannequin can then be fine-tuned for particular duties.

Analysis Reference: “Bettering Language Understanding by Generative Pre-training” by Devlin et al. (2018)

Pre-training on Large Textual content Corpora

- The Corpus of Web Textual content

GPT-2’s journey begins with pre-training on an enormous and numerous corpus of Web textual content. This corpus includes huge textual content knowledge from the World Vast Internet, encompassing numerous topics, languages, and writing kinds. This knowledge’s sheer scale and variety present GPT-2 with a treasure trove of linguistic patterns, constructions, and nuances. - Equipping GPT-2 with Linguistic Information

In the course of the pre-training part, GPT-2 learns to discern and internalize the underlying rules of language. It turns into proficient in recognizing grammatical guidelines, syntactic constructions, and semantic relationships. By processing an intensive vary of textual content material, the mannequin positive aspects a deep understanding of the intricacies of human language. - Contextual Studying

GPT-2’s pre-training entails contextual studying, analyzing phrases and phrases within the context of the encompassing textual content. This contextual understanding is a trademark of its skill to generate contextually related and coherent textual content. It might probably infer which means from the interaction of phrases inside a sentence or doc.

From Transformer Structure to GPT-2

GPT-2 is constructed upon the Transformer structure, revolutionizing numerous pure language processing duties. This structure depends on self-attention mechanisms, enabling the mannequin to weigh the significance of various phrases in a sentence regarding one another. The Transformer’s success laid the inspiration for GPT-2.

Analysis Reference: Consideration Is All You Want” by Vaswani et al. (2017)

How Does GPT-2 Work?

At its core, GPT-2 is an autoregressive mannequin. It predicts the following phrase in a sequence primarily based on the previous phrases. This prediction course of continues iteratively till the specified size of textual content is generated. GPT-2 makes use of a softmax operate to estimate the chance distribution over the vocabulary for every phrase within the sequence.

Code Implementation

Setting Up the Surroundings

Earlier than diving into GPT-2 textual content technology, it’s important to arrange your Python surroundings and set up the required libraries:

Be aware: If ‘transformers’ just isn’t already put in, use: !pip set up transformers

import torch

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Loading pre-trained GPT-2 mannequin and tokenizer

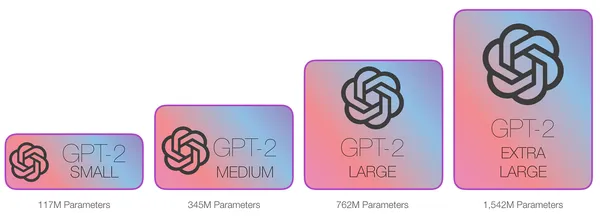

model_name = "gpt2" # Mannequin dimension could be switched accordingly (e.g., "gpt2-medium")

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

mannequin = GPT2LMHeadModel.from_pretrained(model_name)

# Set the mannequin to analysis mode

mannequin.eval()

Producing Textual content with GPT-2

Now, let’s outline a operate to generate textual content primarily based on a given immediate:

def generate_text(immediate, max_length=100, temperature=0.8, top_k=50):

input_ids = tokenizer.encode(immediate, return_tensors="pt")

output = mannequin.generate(

input_ids,

max_length=max_length,

temperature=temperature,

top_k=top_k,

pad_token_id=tokenizer.eos_token_id,

do_sample=True

)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

return generated_text

Functions and Use Circumstances

Inventive Writing

GPT-2 has discovered functions in inventive writing. Authors and content material creators use it to generate concepts, plotlines, and even total tales. The generated textual content can function inspiration or a place to begin for additional refinement.

Chatbots and Digital Assistants

Chatbots and digital assistants profit from GPT-2’s pure language technology capabilities. They will present extra partaking and contextually related responses to person queries, enhancing the person expertise.

Knowledge Augmentation

GPT-2 can be utilized for knowledge augmentation in knowledge science and pure language processing duties. Producing extra textual content knowledge helps enhance the efficiency of machine studying fashions, particularly when coaching knowledge is proscribed.

Superb-Tuning for Management

Whereas GPT-2 generates spectacular textual content, fine-tuning its parameters is important to regulate the output. Listed here are key parameters to contemplate:

- Max Size: This parameter limits the size of the generated textual content. Setting it appropriately prevents excessively lengthy responses.

- Temperature: Temperature controls the randomness of the generated textual content. Greater values (e.g., 1.0) make the output extra random, whereas decrease values (e.g., 0.7) make it extra targeted.

- High-k Sampling: High-k sampling limits the vocabulary selections for every phrase, making the textual content extra coherent.

Adjusting Parameters for Management

To generate extra managed textual content, experiment with totally different parameter settings. For instance, to create a coherent and informative response, you would possibly use:

# Instance immediate

immediate = "As soon as upon a time"

generated_text = generate_text(immediate, max_length=40)

# Print the generated textual content

print(generated_text)

Output: As soon as upon a time, town had been reworked right into a fortress, full with its secret vault containing among the most vital secrets and techniques on the planet. It was this vault that the Emperor ordered his

Be aware: Alter the utmost size primarily based on the applying.

Conclusion

On this article, you discovered textual content technology with GPT-2 is a robust language mannequin that may be harnessed for numerous functions. We’ve delved into its underlying rules, offered code examples, and mentioned real-world use circumstances.

Key Takeaways

- GPT-2 is a state-of-the-art language mannequin that generates textual content primarily based on given prompts.

- Superb-tuning parameters like max size, temperature, and top-k sampling permit management over the generated textual content.

- Functions of GPT-2 vary from inventive writing to chatbots and knowledge augmentation.

Incessantly Requested Questions

A. GPT-2 is a bigger and extra highly effective mannequin than GPT-1, able to producing extra coherent and contextually related textual content.

A. Superb-tune GPT-2 on domain-specific knowledge to make it extra contextually conscious and helpful for particular functions.

A. Moral concerns embody guaranteeing that generated content material just isn’t deceptive, offensive, or dangerous. Reviewing and curating the generated textual content to align with moral pointers is essential.

A. Sure, there are numerous language fashions, together with GPT-3, BERT, and XLNet, every with strengths and use circumstances.

A. Analysis metrics akin to BLEU rating, ROUGE rating, and human analysis can assess the standard and relevance of generated textual content for particular duties.

The media proven on this article just isn’t owned by Analytics Vidhya and is used on the Writer’s discretion.

Associated

[ad_2]