[ad_1]

This weblog publish is in collaboration with Greg Rokita, AVP of Expertise at Edmunds.

Lengthy envisioned as a key milestone in computing, we have lastly arrived for the time being the place machines can seemingly perceive us and reply in our personal pure language. Whereas nobody needs to be confused that enormous language fashions (LLMs) solely give the looks of intelligence, their skill to have interaction us on a variety of subjects in an knowledgeable, authoritative, and at occasions artistic method is poised to drive a revolution in the best way we work.

Estimates from McKinsey and others are that by 2030, duties at present consuming 60 to 70% of staff’ time may very well be automated utilizing these and different generative AI applied sciences. That is driving many organizations, together with Edmunds, to discover methods to combine these capabilities into inner processes in addition to buyer going through merchandise, to reap the advantages of early adoption.

In pursuit of this, we lately sat down with the parents at Databricks, leaders within the information & AI house, to discover classes realized from early makes an attempt at LLM adoption, previous cycles surrounding rising applied sciences and experiences with corporations with demonstrated observe data of sustained innovation.

Edmunds is a trusted automobile procuring useful resource and main supplier of used automobile listings in the US. Edmunds’ web site presents real-time pricing and deal scores on new and used automobiles to offer automobile buyers with essentially the most correct and clear data doable. We’re continually innovating, and we’ve got a number of boards and conferences targeted on rising applied sciences, resembling Edmunds’ current LLMotive convention.

From this dialog, we have recognized 4 mandates that inform our go-forward efforts on this house.

Embrace Experimentation

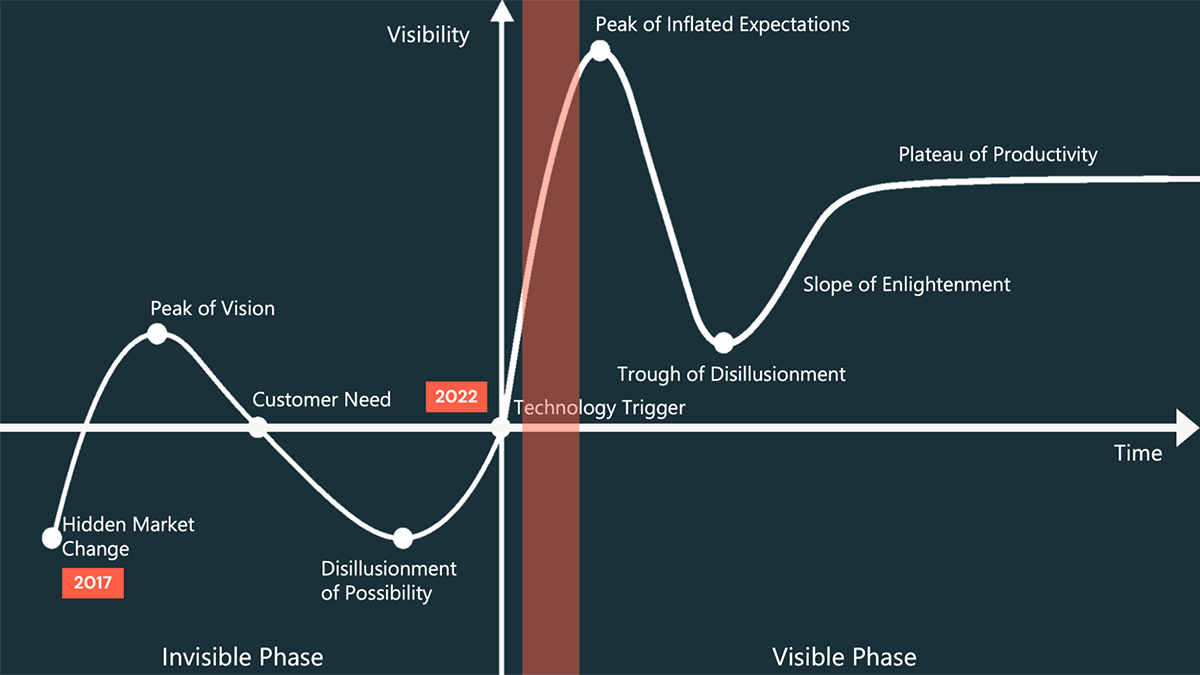

It is simple at this second to neglect simply how new Giant Language Fashions (LLMs) are. The transformer structure, on which these fashions are primarily based, was launched in 2017 and remained largely off the mainstream radar till November 2022 when OpenAI surprised the world with Chat GPT. Since then, we have seen a gradual stream of innovation from each tech corporations and the open supply group. Nevertheless it’s nonetheless early days within the LLM house.

As with each new know-how, there’s a interval following mainstream consciousness the place pleasure over the potential of the know-how outpaces its actuality. Captured neatly in traditional hype cycle (Determine 1), we all know we’re headed in direction of the Peak of Inflated Expectations adopted by a crash into the Trough of Disillusionment inside which frustrations over organizations’ talents to satisfy these expectations power many to pause their efforts with the know-how. It is laborious to say precisely the place we’re within the hype cycle, however given we aren’t even 1 12 months out from the discharge of Chat GPT, it feels protected to say we’ve got not but hit the height within the hype cycle.

Given this, organizations trying to make use of the know-how ought to anticipate speedy evolution and quite a lot of tough edges and outright characteristic gaps. With the intention to ship operational options, these innovators and early adopters have to be dedicated to beat these challenges on their very own and with the help of technical companions and the open supply group. Firms are greatest positioned to do that after they embrace such know-how as both central to their enterprise mannequin or central to the accomplishment of a compelling enterprise imaginative and prescient.

However sheer expertise and can don’t assure success. As well as, organizations embracing early stage applied sciences resembling these acknowledge that it isn’t simply the trail to their vacation spot that is unknown however the vacation spot itself could not exist precisely within the method it is initially envisioned. Fast, interactive experimentation is required to higher perceive this know-how in its present state and the feasibility of making use of it to specific wants. Failure, an unpleasant phrase in lots of organizations, is embraced if that failure was arrived at shortly and effectively and generated information and insights that inform the following iteration and the numerous different experimentation cycles underway inside the enterprise. With the precise mindset, innovators and early adopters can develop a deep information of those applied sciences and ship strong options forward of their competitors, giving them an early benefit over others who may choose to attend for it to mature.

Edmunds has created an LLM incubator to check and develop massive language fashions (LLMs) from third-party and inner sources. The incubator’s purpose is to discover capabilities, and develop modern enterprise fashions—not particularly to launch a product. Along with creating and demonstrating capabilities, the incubator additionally focuses on buying information. Our engineers are in a position to be taught extra concerning the inside workings of LLMs and the way they can be utilized to unravel real-world issues.

Protect Optionality

Persevering with with the theme of know-how maturity, it is value noting an attention-grabbing sample that happens as a know-how passes by the Trough of Disillusionment. Defined in-depth in Geoffrey A. Moore’s traditional ebook, Crossing the Chasm, most of the organizations that convey a selected know-how to market within the early phases of its growth battle to transition into long-term mainstream adoption. It is on the trough that many of those corporations are acquired, merge or just fade away due to this issue that we see again and again.

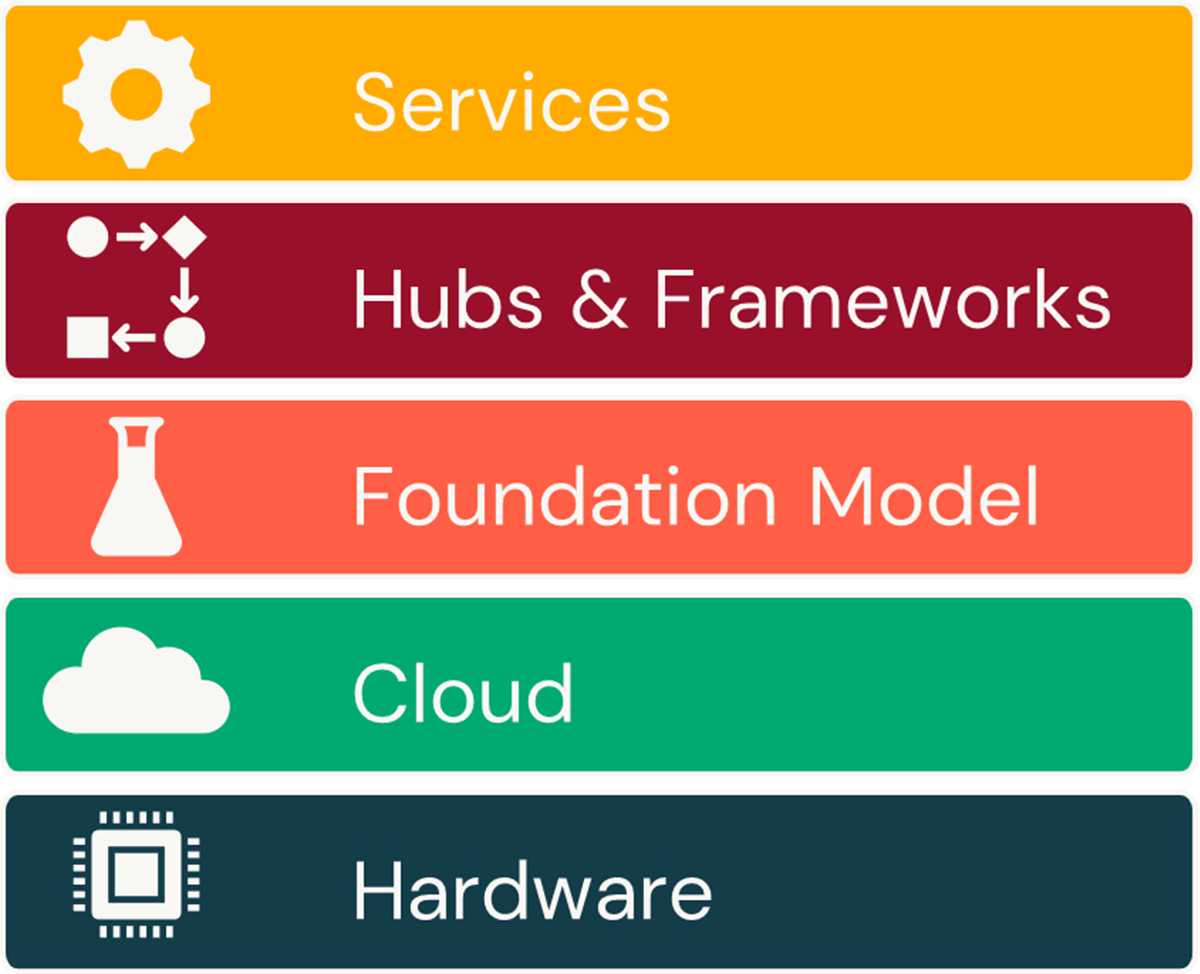

If we apply this considering to the LLM house in its entirety (Determine 2), we will see the seeds for a lot of this turmoil already being sown. Whereas NVidia has a powerful grip on the GPU market – GPUs being a vital {hardware} useful resource for many LLM coaching efforts – organizations resembling MosaicML are already exhibiting methods wherein these fashions will be skilled on a lot decrease price AMD units. Within the cloud, the massive three, i.e. AWS, Azure and GCP, have embraced LLM applied sciences as a automobile for progress, however seemingly out of nowhere, the Oracle Cloud has entered into the dialog. And whereas OpenAI was first out the gate with ChatGPT carefully adopted by varied Google choices, there was an explosion in basis fashions from the open supply group that problem their long-term place available in the market. This final level deserves a bit extra examination.

In Could 2023, a leaked memo from Google titled We Have No Moat, And Neither Does OpenAI, highlighted the speedy and gorgeous developments being made by the open supply group in catching up with OpenAI, Google and different large tech corporations who made early entrances into this house. Because the launch of the unique tutorial paper that launched the transformer motion, there has all the time been a small open supply group actively working to construct LLMs. However these efforts had been turbo-charged in February when Meta (formerly-Fb) opened up their LLaMA mannequin to this group. Inside one month, researchers at Stanford confirmed they might create a mannequin able to carefully imitating the capabilities of ChatGPT. Inside a month of that, Databricks launched Dolly 1.0, exhibiting they might create an excellent smaller, less complicated mannequin able to operating on extra commodity infrastructure and attaining comparable outcomes. Since then, the open supply group has solely snowballed by way of the pace and breadth of this innovation on this house.

All of that is to say that the LLM fashions and all the ecosystem surrounding them is in flux. For a lot of organizations, there’s a want to choose the profitable know-how in a given house and construct their options on these. However given the state of the market, it is inconceivable to say at the moment precisely who the winners and losers can be. Good organizations will acknowledge the fluidity of the market and maintain their choices open till we cross by the eventual shakeout that comes with passing by the trough and that point choose these elements and companies that greatest align their goals round return on funding and whole price of possession. However at this second, time to market is the information.

However what concerning the debt that comes with selecting the flawed know-how? In advocating for selecting the most effective know-how for the time being, we’re in search of to keep away from evaluation paralysis however totally acknowledge that organizations will make some know-how selections they may later remorse. As members of organizations with lengthy histories of early innovation, the most effective steering at this stage is to stick to design patterns resembling using abstractions and decoupled architectures and embrace agile CI/CD processes that permit organizations to quickly iterate options with minimal disruption. As new applied sciences emerge that provide compelling benefits, these can be utilized to displace beforehand chosen elements with much less effort and influence on the group. It isn’t an ideal answer however getting an answer out the door requires choices to be made within the face of an immense quantity of uncertainty that won’t be resolved for fairly a while.

At Edmunds, we perceive that the sector of AI is continually evolving, so we do not depend on a single method to success. We provide entry to a wide range of third-party LLMs, whereas additionally investing in making open-source fashions operational and tailor-made to the automotive vertical. This method provides us flexibility by way of cost-efficiency, safety, privateness, possession, and management of LLMs.

Innovate on the Core

If we’re snug with the know-how and we’ve got the precise mindset and know-how method to constructing LLM purposes, which purposes ought to we construct? As a substitute of utilizing threat avoidance because the governing technique for deciding when and the place to make use of these applied sciences, we deal with the potential for worth era in each the brief and the long-term.

This implies we have to begin with an understanding of what we do at the moment and the place it’s we might do these issues smarter. That is what we name innovation on the core. Core improvements aren’t attractive, however they’re impactful. They assist us enhance effectivity, scalability, reliability and consistency of what we do. We’ve got current enterprise stakeholders, not solely invested in these enhancements, but additionally with the flexibility to evaluate the influence of what we ship. We even have the encircling processes required to place these into manufacturing and monitor their influence on an on-going foundation.

The core improvements are essential as a result of they offer us the flexibility to have an effect whereas we be taught these new applied sciences. Additionally they assist us set up belief with the enterprise and to evolve the processes that permit us to convey concepts into manufacturing. The momentum we construct with these core improvements give us the aptitude and credibility to take greater dangers, to maneuver into new areas associated to our core capabilities however which lengthen and improve what we do at the moment. We refer to those because the adjoining improvements. As we start to exhibit success with adjoining improvements, once more we construct the momentum not solely with the know-how however with our stakeholders to tackle even greater and even much less sure improvements which have the potential to really rework our enterprise.

There are a lot of on the market within the digital transformation group who advocate in opposition to this extra incremental method. And whereas their considerations that efforts which can be too small are unlikely to yield the sorts of outcomes that result in true transformation are legit, the flip aspect that we’ve got witnessed again and again is that know-how efforts that aren’t grounded in prior successes which have included the help of the enterprise battle to realize operationalization. Transferring from the core to adjoining to transformative ranges of innovation doesn’t must be a dawdling course of, however it does want to maneuver at a tempo at which each the technical and enterprise sides of any answer can sustain with each other.

We imagine at Edmunds that semi-autonomous AI brokers will turn out to be vital to each enterprise in the long run. Whereas short-term developments within the generative AI house are unsure, we’re targeted on constructing core capabilities within the automotive vertical. This may permit Edmunds to spin off efforts into extra tactical and scope-limited use instances. Nonetheless, we’re taking a holistic and strategic method to AI, with the purpose of being in a pole place if the AI revolution drastically forces modifications within the enterprise fashions of knowledge aggregators and curators. We aren’t afraid to disrupt ourself with a view to keep management in our area, primarily based on many classes from historical past.

Set up a Knowledge Basis

As we study what all is required to construct an LLM utility, the one part typically missed is the unstructured data belongings inside the group round which most options are to be primarily based. Whereas pre-trained fashions present a foundational understanding of a language, it is by publicity to a company’s data belongings that these fashions are able to talking in a way that is in line with a company’s wants.

However whereas we have been advocating for years for the higher group and administration of this data, a lot of that dialog has been targeted on the explosive progress of unstructured content material and the fee implications of trying to retailer it. Misplaced on this dialog is considered how we determine new and modified content material, transfer it into an analytics atmosphere and assess it for sensitivity, applicable use and high quality prior to make use of in an utility.

That is as a result of up till this second, there have been only a few compelling causes to think about these belongings as high-value analytic assets. In consequence, one Deloitte survey discovered that solely 18% of organizations had been leveraging unstructured information for any type of analytic operate, and it appears extremely unlikely that lots of these had been contemplating systemic technique of managing these information for broader analytic wants. In consequence, we’ve got seen extra situations than not of organizations figuring out compelling makes use of for LLM know-how after which wrestling to amass the belongings wanted to start growth, not to mention maintain the hassle as these belongings broaden and evolve over time.

Whereas we do not have a precise answer to this drawback, we expect it is time that organizations start figuring out the place inside their organizations the very best worth data belongings required by LLM purposes probably reside and start exploring the creation of frameworks to maneuver these into an analytics lakehouse structure. In contrast to the ETL frameworks developed in a long time previous, these frameworks might want to acknowledge that these belongings are created outdoors of tightly ruled and monitored operational options and might want to discover methods to maintain up with new data with out interfering with the enterprise processes dependent upon their creation.

These frameworks will even want to acknowledge the actual safety and governance necessities related to these paperwork. Whereas high-level insurance policies may outline a variety of applicable makes use of for a set of paperwork, data inside particular person paperwork could must be redacted in some eventualities however probably not others earlier than they are often employed. Issues over the accuracy of knowledge in these belongings must also be thought-about, particularly the place paperwork aren’t regularly reviewed and approved by gatekeepers within the group.

The complexity of the challenges on this house to not point out the immense quantity of knowledge in play would require new approaches to data administration. However for organizations keen to wade into these deep waters, there’s the potential to create a basis prone to speed up the implementation of all kinds of LLM purposes for years to return.

A data-centric method is on the coronary heart of Edmunds’ AI efforts. By working with Databricks, our groups at Edmunds are in a position to offload non-business-specific duties to deal with information repurposing and automotive-specific mannequin creation. Edmunds’ shut collaboration with Databricks on a wide range of merchandise ensures that our initiatives are aligned with new product and have releases. That is essential in mild of the quickly evolving panorama of AI fashions, frameworks, and approaches. We’re assured {that a} data-centric method will allow us to lower bias, improve effectivity, cut back prices in our AI efforts and create correct industry-leading fashions and AI brokers.

[ad_2]