[ad_1]

As a part of MLflow 2’s assist for LLMOps, we’re excited to introduce the newest updates to assist immediate engineering in MLflow 2.7.

Assess LLM venture viability with an interactive immediate interface

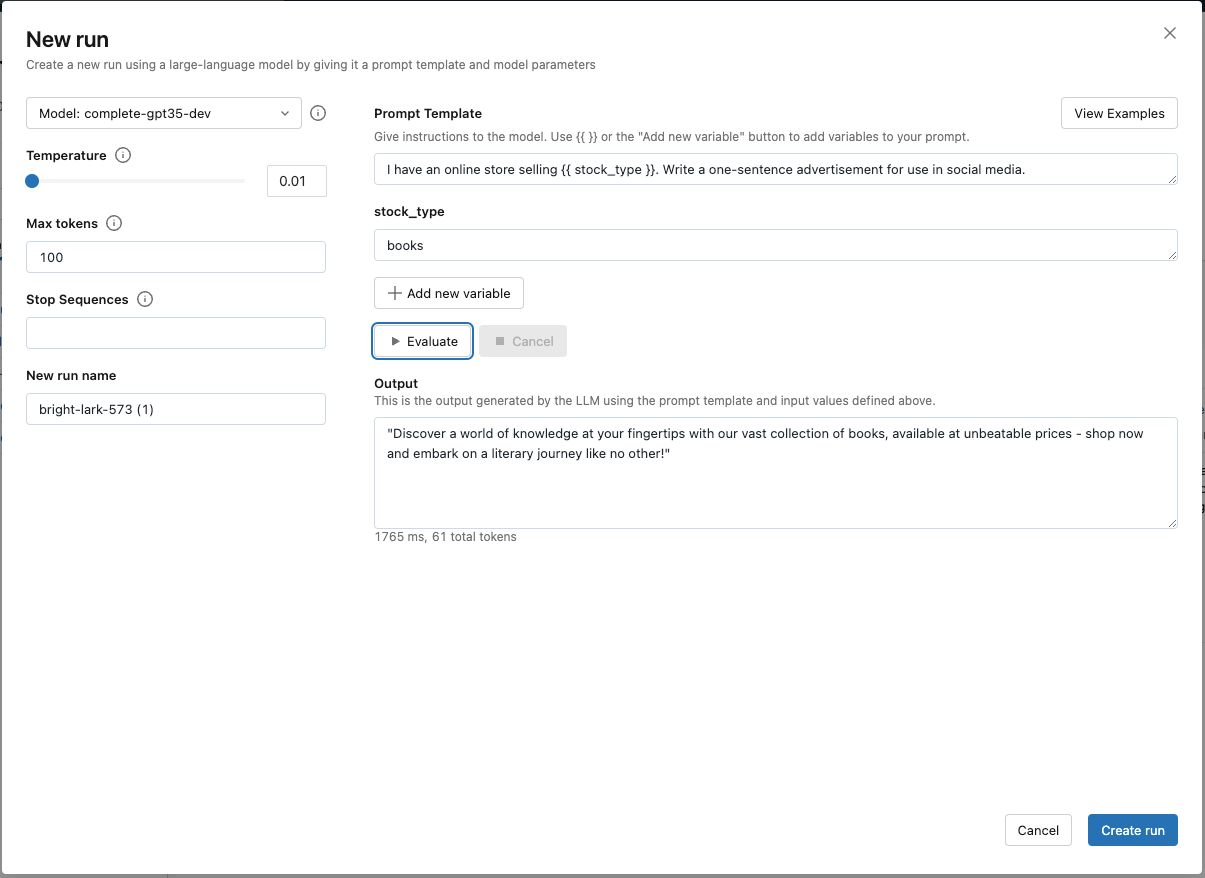

Immediate engineering is an effective way to shortly assess if a use case may be solved with a big language mannequin (LLM). With the new immediate engineering UI in MLflow 2.7, enterprise stakeholders can experiment with numerous base fashions, parameters, and prompts to see if outputs are promising sufficient to start out a brand new venture. Merely create a brand new Clean Experiment or (open an current one) and click on “New Run” to entry the interactive immediate engineering software. Signal as much as be part of the preview right here.

Robotically observe immediate engineering experiments to construct analysis datasets and determine greatest mannequin candidates

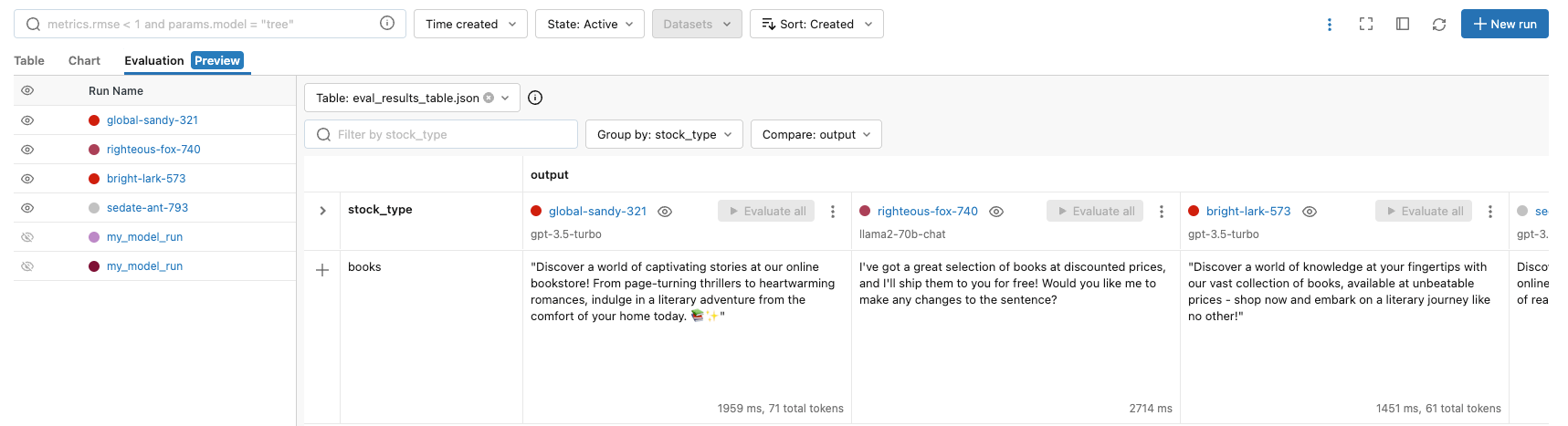

Within the new immediate engineering UI, customers can explicitly observe an analysis run by clicking “Create run” to log outcomes to MLflow. This button tracks the set of parameters, base fashions, and prompts as an MLflow mannequin and outputs are saved in an analysis desk. This desk can then be used for handbook analysis, transformed to a Delta desk for deeper evaluation in SQL, or be used because the take a look at dataset in a CI/CD course of.

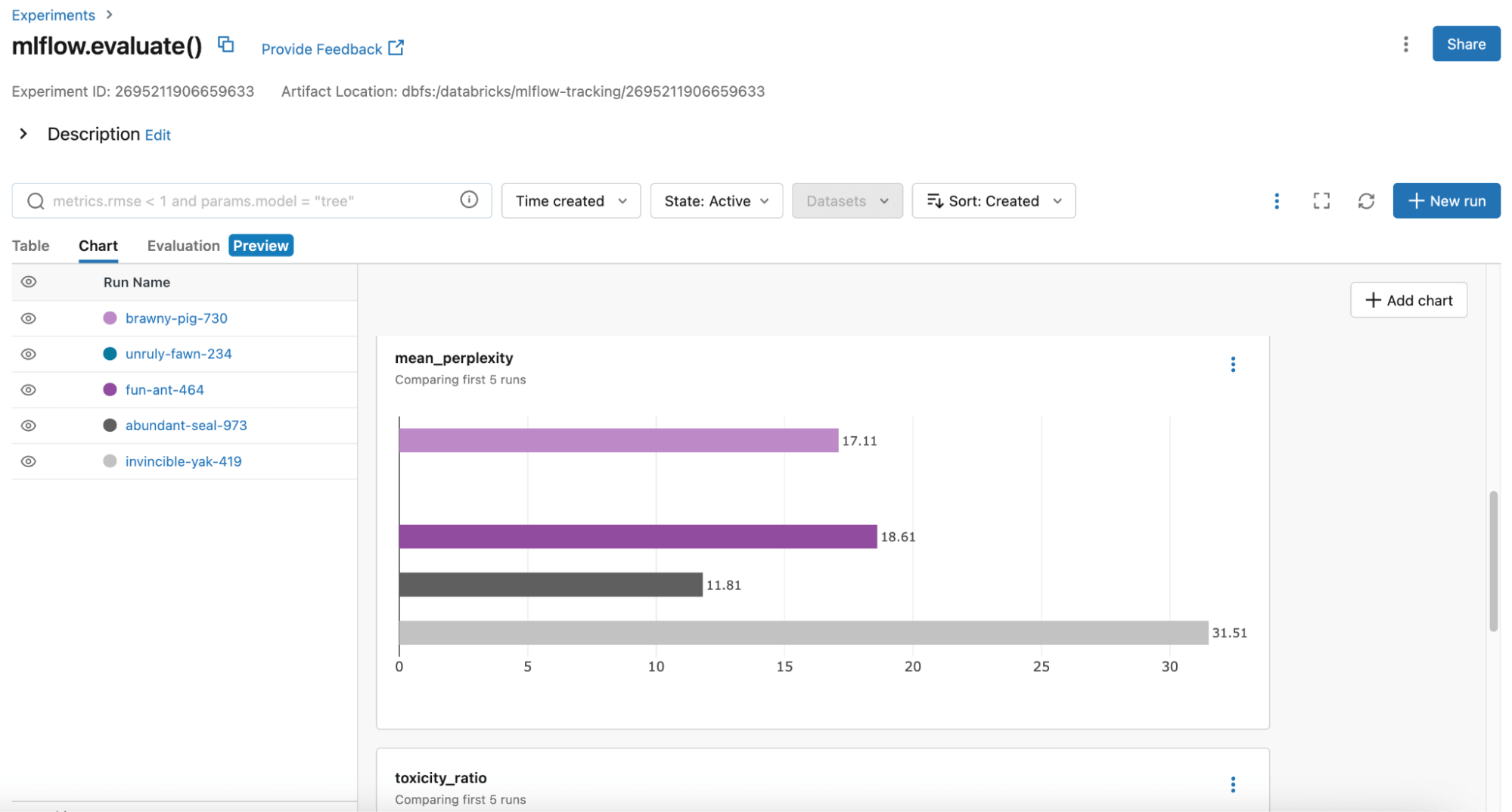

MLflow is at all times including extra metrics within the MLflow Analysis API that can assist you determine one of the best mannequin candidate for manufacturing, now together with toxicity and perplexity. Customers can use the MLflow Desk or Chart view to match mannequin performances:

As a result of the set of parameters, prompts, and base fashions are logged as MLflow fashions, this implies you possibly can deploy a set immediate template for a base mannequin with set parameters in batch inference or serve it as an API with Databricks Mannequin Serving. For LangChain customers that is particularly helpful as MLflow comes with mannequin versioning.

Democratize ad-hoc experimentation throughout your group with guardrails

The MLflow immediate engineering UI works with any MLflow AI Gateway route. AI Gateway routes enable your group to centralize governance and insurance policies for SaaS LLMs; for instance, you possibly can put OpenAI’s GPT-3.5-turbo behind a Gateway route that manages which customers can question the route, gives safe credential administration, and gives fee limits. This protects in opposition to abuse and provides platform groups confidence to democratize entry to LLMs throughout their org for experimentation.

The MLflow AI Gateway helps OpenAI, Cohere, Anthropic, and Databricks Mannequin Serving endpoints. Nonetheless, with generalized open supply LLMs getting an increasing number of aggressive with proprietary generalized LLMs, your group could wish to shortly consider and experiment with these open supply fashions. Now you can additionally name MosaicML’s hosted Llama2-70b-chat.

Strive MLflow right this moment in your LLM improvement!

We’re working shortly to assist and standardize the most typical workflows for LLM improvement in MLflow. Try this demo pocket book to see learn how to use MLflow in your use circumstances. For extra sources:

- Join the MLflow AI Gateway Preview (consists of the immediate engineering UI) right here.

- To get began with the immediate engineering UI, merely improve your MLflow model (pip set up –improve mlflow), create an MLflow Experiment, and click on “New Run”.

- To judge numerous fashions on the identical set of questions, use the MLflow Analysis API.

- If there’s a SaaS LLM endpoint you wish to assist within the MLflow AI Gateway, observe the tips for contribution on the MLflow repository. We love contributions!

[ad_2]