[ad_1]

Adopting AI is existentially important for many companies

Machine Studying (ML) and generative AI (GenAI) are revolutionizing the way forward for work. Organizations perceive that AI helps construct innovation, keep competitiveness, and enhance the productiveness of their workers. Equally, organizations perceive that their knowledge supplies a aggressive benefit for his or her AI functions. Leveraging these applied sciences presents alternatives but in addition potential dangers to organizations, as embracing them with out correct safeguards can lead to important mental property and reputational loss.

In our conversations with clients, they often cite dangers similar to knowledge loss, knowledge poisoning, mannequin theft, and compliance and regulation challenges. Chief Data Safety Officers (CISOs) are beneath strain to adapt to enterprise wants whereas mitigating these dangers swiftly. Nonetheless, if CISOs say no to the enterprise, they’re perceived as not being workforce gamers and placing the enterprise first. However, they’re perceived as careless if they are saying sure to doing one thing dangerous. Not solely do CISOs have to sustain with the enterprise’ urge for food for development, diversification, and experimentation, however they must sustain with the explosion of applied sciences promising to revolutionize their enterprise.

Half 1 of this weblog collection will talk about the safety dangers CISOs have to know as their group evaluates, deploys, and adopts enterprise AI functions.

Your knowledge platform needs to be an skilled on AI safety

At Databricks, we consider knowledge and AI are your most treasured non-human belongings, and that the winners in each business can be knowledge and AI firms. That is why safety is embedded within the Databricks Knowledge Intelligence Platform. The Databricks Knowledge Intelligence Platform permits your total group to make use of knowledge and AI. It is constructed on a lakehouse to supply an open, unified basis for all knowledge and governance, and is powered by a Knowledge Intelligence Engine that understands the individuality of your knowledge.

Our Databricks Safety workforce works with hundreds of consumers to securely deploy AI and machine studying on Databricks with the suitable security measures that meet their structure necessities. We’re additionally working with dozens of specialists internally at Databricks and within the bigger ML and GenAI neighborhood to determine safety dangers to AI methods and outline the controls essential to mitigate these dangers. Now we have reviewed quite a few AI and ML threat frameworks, requirements, suggestions, and steering. In consequence, we now have sturdy AI safety tips to assist CISOs and safety groups perceive easy methods to deploy their organizations’ ML and AI functions securely. Nonetheless, earlier than discussing the dangers to ML and AI functions, let’s stroll by the constituent elements of an AI and ML system used to handle the info, construct fashions, and serve functions.

Understanding the core elements of an AI system for efficient safety

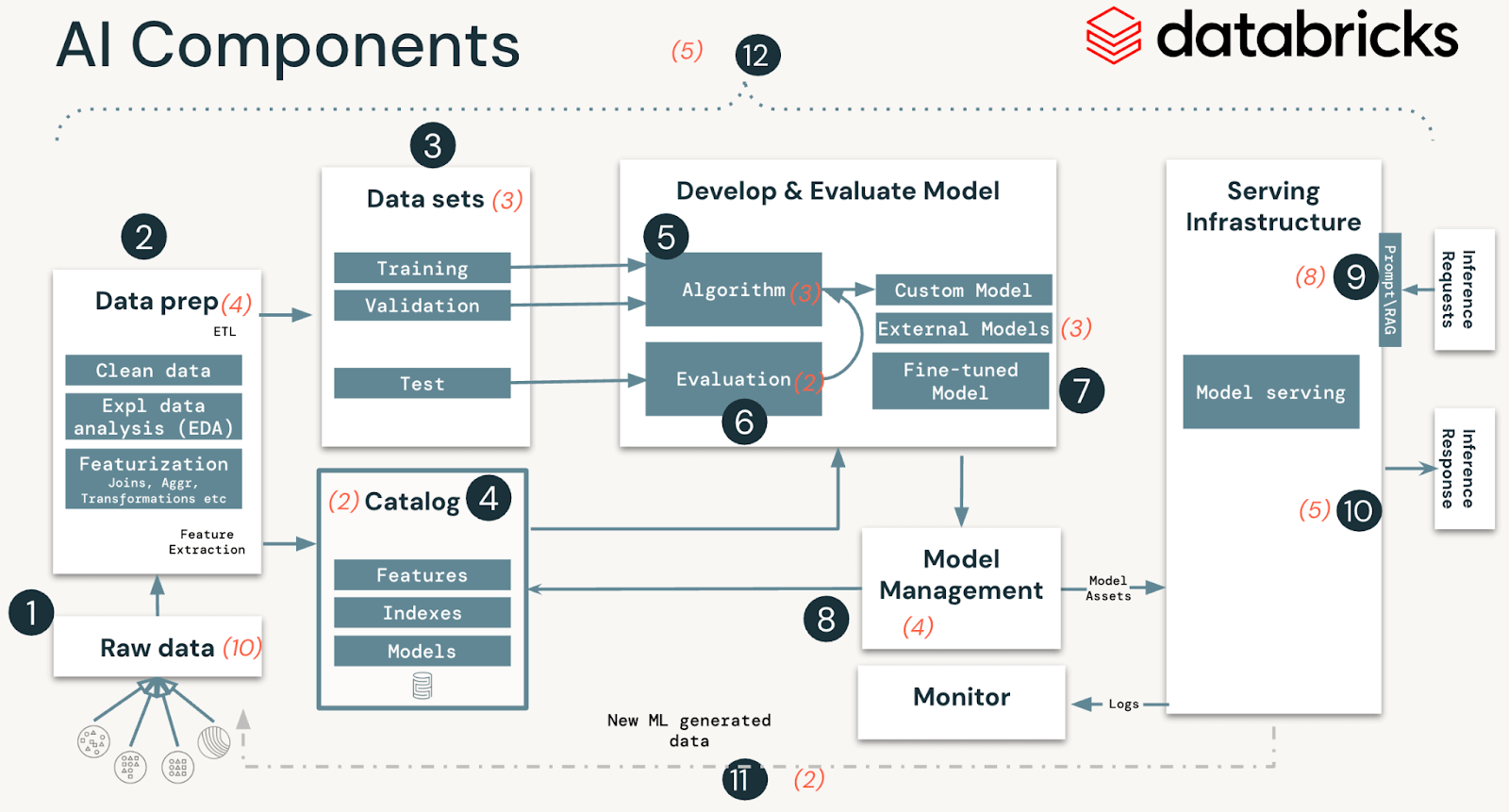

AI and ML methods are comprised of knowledge, code, and fashions. A typical system for such an answer has 12 foundational structure elements, broadly categorized into 4 main levels:

- Knowledge operations include ingesting and remodeling knowledge and making certain knowledge safety and governance. Good ML fashions rely upon dependable knowledge pipelines and safe infrastructure.

- Mannequin operations embrace constructing customized fashions, buying fashions from a mannequin market, or utilizing SaaS LLMs (like OpenAI). Growing a mannequin requires a collection of experiments and a strategy to monitor and examine the circumstances and outcomes of these experiments.

- Mannequin deployment and serving consists of securely constructing mannequin photos, isolating and securely serving fashions, automated scaling, price limiting, and monitoring deployed fashions.

- Operations and platform embrace platform vulnerability administration and patching, mannequin isolation and controls to the system, and approved entry to fashions with safety within the structure. It additionally consists of operational tooling for CI/CD. It ensures the whole lifecycle meets the required requirements by retaining the distinct execution environments – improvement, staging, and manufacturing for safe MLOps.

MLOps is a set of processes and automatic steps to handle the AI and ML system’s code, knowledge, and fashions. MLOps needs to be mixed with safety operations (SecOps) practices to safe the whole ML lifecycle. This consists of defending knowledge used for coaching and testing fashions and deploying fashions and the infrastructure they run on from malicious assaults.

High safety dangers of AI and ML methods

Technical Safety Dangers

In our evaluation of AI and ML methods, we recognized 51 technical safety dangers throughout the 12 elements. Within the desk beneath, we define these primary elements that align with steps in any AI and ML system and spotlight the sorts of safety dangers our workforce recognized:

| System stage | System elements (Determine 1) | Potential safety dangers |

|---|---|---|

| Knowledge operations |

|

19 particular dangers, similar to

|

| Mannequin operations |

|

12 particular dangers, similar to

|

| Mannequin deployment and serving |

|

13 particular dangers, similar to

|

| Operations and Platform |

|

7 particular dangers, similar to

|

Databricks has mitigation controls for all the above-outlined dangers. In a future weblog and whitepaper, we are going to stroll by the whole listing of dangers in addition to our steering on the related controls and out-of-the-box capabilities like Databricks Delta Lake, Databricks Managed MLflow, Unity Catalog, and Mannequin Serving that you should utilize as mitigation controls to the above dangers.

Organizational Safety Dangers

Along with the technical dangers, our discussions with CISOs have highlighted the need of addressing 4 organizational threat areas to ease the trail to AI and ML adoption. These are key to aligning the safety perform with the wants, tempo, outcomes and threat urge for food of the enterprise they serve.

- Expertise: Safety organizations can lag the remainder of the group when adopting new applied sciences. CISOs want to grasp which roles of their expertise pool ought to upskill to AI and implement MLSecOps throughout departments.

- Working mannequin: Figuring out the important thing stakeholders, champions, and enterprise-wide processes to securely deploy new AI use circumstances is vital to assessing threat ranges and discovering the correct path to deploying functions.

- Change administration: Discovering hurdles for change, speaking expectations, and eroding inertia to speed up by leveraging an organizational change administration framework, e.g., ADKAR, and so on., helps organizations to allow new processes with minimal disruption.

- Choice help: Tying technical work to enterprise outcomes for prioritizing which technical efforts or enterprise use circumstances is vital to arriving at a single set of organization-wide priorities.

Mitigate AI safety dangers successfully with our CISO-driven workshop

CISOs are instinctive threat assessors. Nonetheless, this superpower fails most CISOs in terms of AI. The first purpose is that CISOs do not have a easy psychological mannequin of an AI and ML system that they will readily visualize to synthesize belongings, threats, impacts, and controls.

That can assist you with this, the Databricks Safety workforce has designed a brand new machine studying and generative AI safety workshop for CISOs and safety leaders. The content material of those workshops is additional being developed right into a framework for managing AI Safety to render CISOs’ instinctive superpower of assessing threat to be operable and efficient concerning AI.

As a sneak peek, this is the top-line method we suggest for managing the technical safety dangers of ML and AI functions at scale:

- Determine the ML Enterprise Use Case: Be certain that there’s a well-defined use case with stakeholders you are attempting to safe adequately, whether or not already carried out or in planning phases.

- Decide ML Deployment Mannequin: Select an applicable mannequin (e.g., Customized, SaaS LLM, RAG, fine-tuned mannequin, and so on.) to find out how shared tasks (particularly for securing every part) are break up throughout the 12 ML/GenAI elements between your group and any companions concerned.

- Choose Most Pertinent Dangers: From our documented listing of 51 ML dangers, pinpoint probably the most related to your group primarily based on the result of step #2.

- Enumerate Threats for Every Danger: Determine the precise threats linked to every threat and the focused ML/GenAI part for each menace.

- Select and Implement Controls: Choose controls that align together with your group’s threat urge for food. These controls are outlined generically for compatibility with any knowledge platform. Our framework additionally supplies tips on tailoring these controls particularly for the Databricks atmosphere.

- Operationalize Controls: Decide management homeowners, a lot of whom you would possibly inherit out of your knowledge platform supplier. Guarantee controls are carried out, monitored for effectiveness, and often reviewed. Changes could also be wanted primarily based on modifications in enterprise use circumstances, deployment fashions, or evolving menace landscapes.

Be taught extra and join with our workforce

For those who’re eager about collaborating in one in every of our CISO workshops or reviewing our upcoming Databricks AI Safety Framework whitepaper, contact [email protected].

In case you are interested by how Databricks approaches safety, please go to our Safety and Belief Middle.

[ad_2]