[ad_1]

Introduction

Object Localization refers back to the activity of exactly figuring out and localizing objects of curiosity inside a picture. It performs a vital function in laptop imaginative and prescient functions, enabling duties like object detection, monitoring, and segmentation. Within the context of CNN-based localizers, object localization entails coaching a convolutional neural community (CNN) to foretell the coordinates of bounding bins that tightly enclose the objects inside a picture.

The localization course of sometimes follows a two-step pipeline, with a spine CNN extracting picture options and a regression head predicting the bounding field coordinates.

Studying Aims

- To grasp the fundamentals of Convolutional Neural Networks (CNNs).

- Clarify CNN structure for localization fashions.

- Implement localizer structure utilizing a pre-trained CNN mannequin for localization.

This text was printed as part of the Knowledge Science Blogathon.

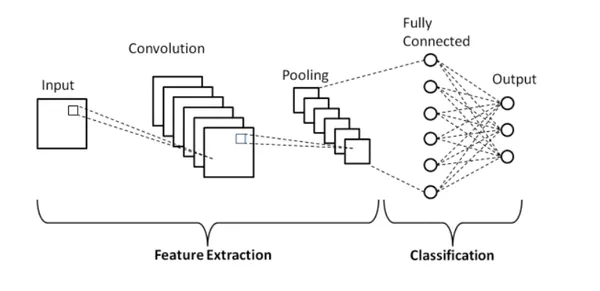

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a category of deep studying fashions used for picture evaluation.

Their structure consists of an enter layer that takes within the picture knowledge, adopted by convolutional layers that study and extract options utilizing convolutional filters. Activation capabilities introduce non-linearities whereas pooling layers scale back spatial dimensions. Absolutely related layers on the finish make closing predictions.

CNNs study hierarchical options, beginning with low-level options like edges and progressing to advanced and summary options like shapes and object compositions.

Throughout the coaching part of a CNN, the community learns to acknowledge and extract completely different ranges of options mechanically. The preliminary layers seize low-level options equivalent to edges, corners, and textures, whereas deeper layers study extra advanced and summary options like shapes, object components, and object compositions. The hierarchical construction of a CNN permits it to study representations which are more and more invariant to variations in translation, scale, rotation, and different picture transformations.

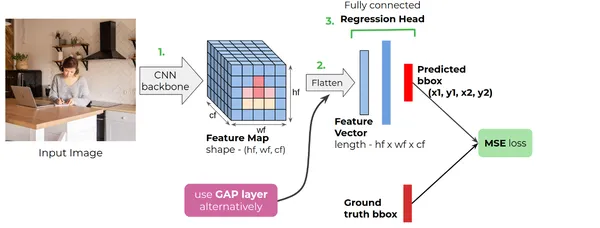

CNN-based Localizer Structure

The CNN-based localizer mannequin for object localization consists of three elements:

1. CNN Spine

Incorporating the Energy of SQL: Selecting a Normal CNN Structure (equivalent to ResNet 18, ResNet 50, VGG, and so on.) for Finetuning Pre-trained Fashions on Imagenet Classification Duties. Enhancing the Spine Community with Further CNN Layers for Function Map Dimension Discount

2. Vectorizer

The output of the CNN spine is a 3D tensor. However the resultant output of the Localizer is a 1D vector with 4 values corresponding to every coordinate for the bounding field. To transform the 3D tensor right into a vector, we make use of a vectorizer or make the most of a Flatten layer in its place strategy.

3. Regression Head

We assemble a completely related regression head particularly for this activity. After that, the function vector, obtained from the spine, is fed to the regression head. The regression head consists of 4 nodes on the finish similar to the (x1, y1, x2, y2) or every other equal bounding field representations.

Understanding the Mannequin Structure Higher

The determine reveals a typical CNN-based localizer mannequin structure. In brief, the CNN spine takes in an RGB picture, then generates a function map. We then use a flattened layer or a World Common Pooling layer to kind a 1-dimensional function vector. The totally related regression head takes within the function vector and provides predictions.

The CNN community maintains a hard and fast measurement for the enter picture, and we make use of a Flatten layer to transform the function map acquired from the CNN spine right into a vector. Nonetheless, when adaptive layers like GAP (World Common Pooling) are utilized, there isn’t a requirement to resize the picture.

Coaching the Localizer

Import Essential Libraries

import ast

import math

import os

import cv2

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from functools import partial

from tensorflow.knowledge import Dataset

from tensorflow.keras.functions import ResNet50

from tensorflow.keras import layers, losses, fashions, optimizers, utils Constructing the Parts

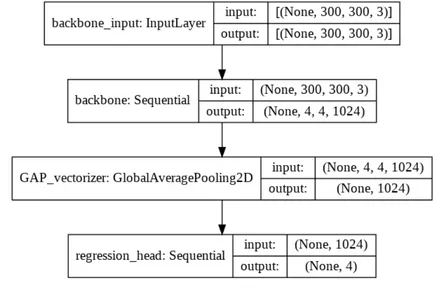

The structure takes an enter picture of measurement 300×300 with 3 coloration channels.

- The spine processes the picture and extracts high-level options.

- The vectorizer then computes a fixed-length vector illustration of those options.

- Lastly, the regression head takes this vector and performs regression, outputting a four-dimensional vector as the ultimate prediction.

IMG_SHAPE = (300, 300)

spine = fashions.Sequential([

ResNet50(include_top=False,

weights="imagenet",

input_shape=IMG_SHAPE + (3,)),

layers.Conv2D(1024, 3, 2, activation='relu'),

], title="spine" )

vectorizer = layers.GlobalAveragePooling2D(title="GAP_vectorizer")

regression_head = fashions.Sequential([

layers.Dense(512, activation='relu'),

layers.Dense(4)

], title="regression_head")

Constructing the Mannequin

It defines a whole mannequin by combining the beforehand outlined elements: the spine, the vectorizer, and the regression head.

bbox_regressor = fashions.Sequential([

backbone,

vectorizer,

regression_head

])

bbox_regressor.abstract()

utils.plot_model(bbox_regressor, "localizer.png", show_shapes=True)

Obtain the Dataset

We’re utilizing a selfies dataset. The Selfie dataset comprises 46,836 selfie photographs. We generate bounding bins for faces utilizing Haar Cascades. A CSV file is out there which consists of a picture path and bounding field coordinates for about 22K photographs.

The dataset is out there at:

https://www.crcv.ucf.edu/knowledge/Selfie/Selfie-dataset.tar.gz

Producing Knowledge Batches

DataGenerator class is liable for loading and preprocessing current knowledge for the localization activity.

- It takes a picture listing and a CSV file with picture paths and bounding field info as enter.

- The category divides the info into coaching and testing subsets primarily based on the offered fractions.

- Throughout era, the category preprocesses every picture by resizing it, changing coloration channels, and normalizing pixel values.

- Bounding field coordinates are additionally regular.

The generator yields the preprocessed picture and corresponding bounding field for every knowledge pattern.

class DataGenerator(object):

def __init__(self, img_dir, _csv_path, train_max=0.8, test_min=0.9, target_shape=(300, 300)):

for okay, v in locals().objects():

if okay != "self" and never okay.startswith("_"):

setattr(self, okay, v)

self.df = pd.read_csv(_csv_path)

def __len__(self):

return len(self.df)

def generate(self, part):

assert part in [None, 'train', 'test']

_df = self.divide_data(part)

for rel_img_path, bbox in _df.values:

img, bbox = self.preprocess_data(rel_img_path, bbox)

img = tf.fixed(img, dtype=tf.float32)

bbox = tf.fixed(bbox, dtype=tf.float32)

yield img, bbox

def preprocess_data(self, rel_img_path, bbox):

bbox = np.array(ast.literal_eval(bbox))

img_path = os.path.be part of(self.img_dir, rel_img_path)

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

_h, _w, _ = img.form

img = cv2.resize(img, self.target_shape)

img = img.astype(np.float32) / 127.0 - 1

bbox = bbox / np.array([_w, _h, _w, _h])

return img, bbox # np.expand_dims(bbox, 0)

def divide_data(self, part):

train_max = int(self.train_max * len(self.df))

_df = None

if part is None:

_df = self.df

elif part == 'practice':

_df = self.df.iloc[:train_max, :].pattern(frac=1)

else:

_df = self.df.iloc[train_max:, :]

return _df Loading and Creating the Dataset

This makes use of the DataGenerator class to create coaching and testing datasets utilizing TensorFlow’s Dataset API.

- It creates coaching and testing datasets utilizing TensorFlow’s Dataset API.

- We generate the coaching dataset by invoking the ‘generate’ methodology of the DataGenerator occasion, specifying the ‘practice’ part.

- Generate the testing dataset with the ‘take a look at’ part.

- Each datasets are shuffled and batched with a batch measurement of 16.

The ensuing train_dataset and test_dataset are TensorFlow Dataset objects, prepared for additional processing or coaching of a mannequin.

IMG_DIR = 'Selfie-dataset/photographs'

CSV_PATH = '3-lv1-8-4-selfies_dataset.csv'

BATCH_SIZE = 16

dataset_generator = DataGenerator(IMG_DIR, CSV_PATH)

train_max = int(len(dataset_generator) * 0.9)

train_dataset = Dataset.from_generator(partial(dataset_generator.generate,

part="practice"), output_types=(tf.float32, tf.float32),

output_shapes = (IMG_SHAPE + (3,), (4,)))

train_dataset = train_dataset.shuffle(buffer_size=2 * BATCH_SIZE).batch(BATCH_SIZE)

test_dataset = Dataset.from_generator(partial(dataset_generator.generate,

part="take a look at"),output_types=(tf.float32, tf.float32),

output_shapes = (IMG_SHAPE + (3,), (4,)))

test_dataset = test_dataset.shuffle(buffer_size=2 * BATCH_SIZE).batch(BATCH_SIZE)

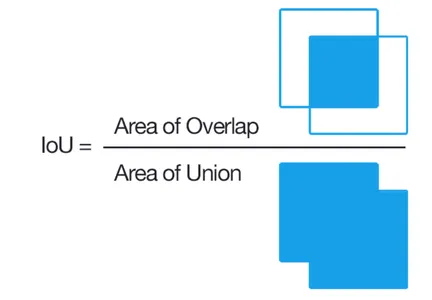

Loss Perform and Efficiency Metric

A number of loss capabilities for regression can be utilized to coach a bounding field localizer. The regression loss capabilities like MSE, and Easy L1 are used in a similar way as within the case of different regression duties and are utilized between the bottom reality bounding field vector and predicted bounding field vector.

Intersection over Union (IoU) is a typical efficiency metric utilized in bounding field regression.

The operate defines a set of capabilities for calculating the Intersection over Union (IoU) and evaluating the efficiency of a mannequin’s predictions. It gives the means to calculate IoU, consider predictions when it comes to loss and IoU, and assign the analysis criterion to a variable.

def cal_IoU(b1, b2):

zero = tf.convert_to_tensor(0., b1.dtype)

b1_x1, b1_y1, b1_x2, b1_y2 = tf.unstack(b1, 4, axis=-1)

b2_x1, b2_y1, b2_x2, b2_y2 = tf.unstack(b2, 4, axis=-1)

b1_width = tf.most(zero, b1_x2 - b1_x1)

b1_height = tf.most(zero, b1_y2 - b1_y1)

b2_width = tf.most(zero, b2_x2 - b2_x1)

b2_height = tf.most(zero, b2_y2 - b2_y1)

b1_area = b1_width * b1_height

b2_area = b2_width * b2_height

intersect_x1 = tf.most(b1_x1, b2_x1)

intersect_y1 = tf.most(b1_y1, b2_y1)

intersect_y2 = tf.minimal(b1_y2, b2_y2)

intersect_x2 = tf.minimal(b1_x2, b2_x2)

intersect_width = tf.most(zero, intersect_x2 - intersect_x1)

intersect_height = tf.most(zero, intersect_y2 - intersect_y1)

intersect_area = intersect_width * intersect_height

union_area = b1_area + b2_area - intersect_area

iou = tf.math.divide_no_nan(intersect_area, union_area)

return iou

def calculate_iou(y_true, y_pred):

y_pred = tf.convert_to_tensor(y_pred)

y_pred = tf.forged(y_pred, tf.float32)

y_true = tf.forged(y_true, y_pred.dtype)

iou = cal_IoU(y_pred, y_true)

return iou

def consider(precise, pred):

iou = calculate_iou(precise, pred)

loss = losses.MSE(precise, pred)

return loss, iou

criteron = considerOptimizer and Studying Fee Scheduler

We use an exponential decay studying fee for scheduling studying charges and an Adam optimizer for optimization.

EPOCHS = 10

LEARNING_RATE = 0.0003

lr_scheduler = optimizers.schedules.ExponentialDecay(LEARNING_RATE, 3600, 0.8)

optimizer = optimizers.Adam(learning_rate=lr_scheduler)

os.makedirs('checkpoints', exist_ok=True)

Coaching Loop

It implements a coaching loop that runs for a specified variety of epochs.

- Inside every epoch, the loop iterates via the batches of the coaching dataset.

- It performs ahead propagation to acquire predicted bounding field coordinates, calculates the loss and IoU values, applies backpropagation to replace the mannequin’s weights, and data the coaching metrics.

- After every epoch, the common coaching loss and IoU are computed.

The mannequin is saved on the finish of every epoch.

for epoch in vary(EPOCHS):

train_losses, train_ious = np.array([]), np.array([])

for step, (inputs, labels) in enumerate(train_dataset):

with tf.GradientTape() as tape:

preds = bbox_regressor(inputs, coaching=True)

loss, iou = criteron(labels, preds)

grads = tape.gradient(loss, bbox_regressor.trainable_weights)

optimizer.apply_gradients(zip(grads, bbox_regressor.trainable_weights))

loss_value = tf.math.reduce_mean(loss).numpy()

train_losses = np.hstack([train_losses, loss_value])

iou_value = tf.math.reduce_mean(iou).numpy()

train_ious = np.hstack([train_ious, iou_value])

print('Coaching Loss : %f'%(step + 1, math.ceil(train_max / BATCH_SIZE),

loss_value), finish='')

tr_lss, tr_iou = np.imply(train_losses), np.imply(train_ious)

print('Prepare loss : %f -- Prepare Common IOU : %f' % (epoch, EPOCHS,

tr_lss, tr_iou))

print()

save_path="./fashions/checkpointpercentd.h5" % (epoch)

bbox_regressor.save(save_path)Predictions

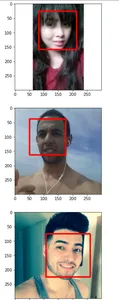

We visualize the bounding bins predicted by the Bbox regressor for some photographs within the take a look at set by drawing the bounding bins within the photographs.

for inputs, labels in test_dataset:

bbox_preds = bbox_regressor(inputs, coaching=False).numpy()

bbox_preds = (bbox_preds * (dataset_generator.target_shape * 2)).astype(int)

imgs = (127 * (inputs + 1)).numpy().astype(np.uint8)

for idx, img in enumerate(imgs):

x1, y1, x2, y2 = bbox_preds[idx]

img = cv2.rectangle(img, (x1, y1), (x2, y2), (255, 0, 0), 4)

plt.imshow(img)

plt.present()

breakOutput

Conclusion

In conclusion, CNN-based localizers are instrumental in advancing laptop imaginative and prescient functions, significantly in object localization duties. The article highlighted the significance of CNNs in picture evaluation and defined the two-step pipeline, involving a spine CNN for function extraction and a regression head for predicting bounding field coordinates. The way forward for object localization holds immense potential with developments in deep studying methods, bigger datasets, and integration of different modalities, promising vital impacts on industries and remodeling visible notion and understanding.

Key Takeaways

- CNN-based localizers are important for advancing laptop imaginative and prescient functions, leveraging CNNs’ capability to study hierarchical options from photographs.

- The 2-step pipeline, consisting of a function extraction spine CNN and a regression head, is usually utilized in CNN-based localizers to realize correct object localization.

- The way forward for object localization holds nice promise with developments in deep studying, bigger datasets, and the combination of different modalities, providing vital impacts on industries equivalent to autonomous driving, robotics, surveillance, and healthcare.

The media proven on this article just isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.

Associated

[ad_2]