[ad_1]

Introduction

We reside in an age the place giant language fashions (LLMs) are on the rise. One of many first issues that involves thoughts these days once we hear LLM is OpenAI’s ChatGPT. Now, do you know that ChatGPT isn’t precisely an LLM however an utility that runs on LLM fashions like GPT 3.5 and GPT 4? We will develop AI purposes in a short time by prompting an LLM. However, there’s a limitation. An utility might require a number of prompting on LLM, which includes writing glue code a number of instances. This limitation could be simply overcome through the use of LangChain.

This text is about LangChain and its purposes. I assume you will have a good understanding of ChatGPT as an utility. For extra particulars about LLMs and the fundamental rules of Generative AI, you may check with my earlier article on immediate engineering in generative AI.

Studying Aims

- Attending to know concerning the fundamentals of the LangChain framework.

- Realizing why LangChain is quicker.

- Comprehending the important parts of LangChain.

- Understanding the right way to apply LangChain in Immediate Engineering.

This text was printed as part of the Information Science Blogathon.

What’s LangChain?

LangChain, created by Harrison Chase, is an open-source framework that allows utility improvement powered by a language mannequin. There are two packages viz. Python and JavaScript (TypeScript) with a give attention to composition and modularity.

Why Use LangChain?

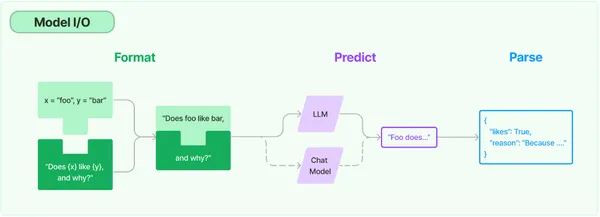

After we use ChatGPT, the LLM makes direct calls to the API of OpenAI internally. API calls by means of LangChain are made utilizing parts comparable to prompts, fashions, and output parsers. LangChain simplifies the tough job of working and constructing with AI fashions. It does this in two methods:

- Integration: Exterior knowledge like information, API knowledge, and different purposes are being delivered to LLMs.

- Company: Facilitates interplay between LLMs and their setting by means of decision-making.

By means of parts, custom-made chains, pace, and neighborhood, LangChain helps keep away from friction factors whereas constructing complicated LLM-based purposes.

Parts of LangChain

There are 3 most important parts of LangChain.

- Language fashions: Frequent interfaces are used to name language fashions. LangChain gives integration for the next kinds of fashions:

i) LLM: Right here, the mannequin takes a textual content string as enter and returns a textual content string.

ii) Chat fashions: Right here, the mannequin takes an inventory of chat messages as enter and returns a chat message. A language mannequin backs some of these fashions. - Prompts: Helps in constructing templates and allows dynamic choice and administration of mannequin inputs. It’s a set of directions a consumer passes to information the mannequin in producing a constant language-based output, like answering questions, finishing sentences, writing summaries, and so forth.

- Output Parsers: Takes out info from mannequin outputs. It helps in getting extra structured info than simply textual content as an output.

Sensible Software of LangChain

Allow us to begin working with LLM with the assistance of LangChain.

openai_api_key='sk-MyAPIKey'Now, we’ll work with the nuts and bolts of LLM to know the basic rules of LangChain.

ChatMessages shall be mentioned on the outset. It has a message kind with system, human, and AI. The roles of every of those are:

- System – Useful background context that guides AI.

- Human – Message representing the consumer.

- AI – Messages exhibiting the response of AI.

#Importing essential packages

from langchain.chat_models import ChatOpenAI

from langchain.schema import HumanMessage, SystemMessage, AIMessage

chat = ChatOpenAI(temperature=.5, openai_api_key=openai_api_key)

#temperature controls output randomness (0 = deterministic, 1 = random)We’ve imported ChatOpenAI, HumanMessage, SystemMessage, and AIMessage. Temperature is a parameter that defines the diploma of randomness of the output and ranges between 0 and 1. If the temperature is about to 1, the output generated shall be extremely random, whereas whether it is set to 0, the output shall be least random. We’ve set it to .5.

# Making a Chat Mannequin

chat(

[

SystemMessage(content="You are a nice AI bot that helps a user figure out

what to eat in one short sentence"),

HumanMessage(content="I like Bengali food, what should I eat?")

]

)Within the above strains of code, we have now created a chat mannequin. Then, we typed two messages: one is a system message that can work out what to eat in a single brief sentence, and the opposite is a human message asking what Bengali meals the consumer ought to eat. The AI message is:

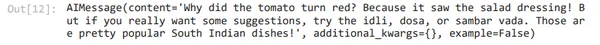

We will cross extra chat historical past with responses from the AI.

# Passing chat historical past

chat(

[

SystemMessage(content="You are a nice AI bot that helps a user figure out

where to travel in one short sentence"),

HumanMessage(content="I like the spirtual places, where should I go?"),

AIMessage(content="You should go to Madurai, Rameswaram"),

HumanMessage(content="What are the places I should visit there?")

]

)Within the above case, we’re saying that the AI bot suggests locations to journey in a single brief sentence. The consumer is saying that he likes to go to non secular locations. This sends a message to the AI that the consumer intends to go to Madurai and Rameswaram. Then, the consumer requested what the locations to go to there have been.

It’s noteworthy that it has not been instructed to the mannequin the place I went. As a substitute, it referred to the historical past to search out out the place the consumer went and responded completely.

How Do the Parts of LangChain Work?

Let’s see how the three parts of LangChain, mentioned earlier, make an LLM work.

The Language Mannequin

The primary part is a language mannequin. A various set of fashions bolsters OpenAI API with completely different capabilities. All these fashions could be custom-made for particular purposes.

# Importing OpenAI and making a mannequin

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-ada-001", openai_api_key=openai_api_key)The mannequin has been modified from default to text-ada-001. It’s the quickest mannequin is the GPT-3 collection and has confirmed to value the bottom. Now, we’re going to cross a easy string to the language mannequin.

# Passing common string into the language mannequin

llm("What day comes after Saturday?")

Thus, we obtained the specified output.

The subsequent part is the extension of the language mannequin, i.e., a chat mannequin. A chat mannequin takes a collection of messages and returns a message output.

from langchain.chat_models import ChatOpenAI

from langchain.schema import HumanMessage, SystemMessage, AIMessage

chat = ChatOpenAI(temperature=1, openai_api_key=openai_api_key)We’ve set the temperature at 1 to make the mannequin extra random.

# Passing collection of messages to the mannequin

chat(

[

SystemMessage(content="You are an unhelpful AI bot that makes a joke at

whatever the user says"),

HumanMessage(content="I would like to eat South Indian food, what are some

good South Indian food I can try?")

]

)Right here, the system is passing on the message that the AI bot is an unhelpful one and makes a joke at regardless of the customers say. The consumer is asking for some good South Indian meals options. Allow us to see the output.

Right here, we see that it’s throwing some jokes in the beginning, but it surely did recommend some good South Indian meals as effectively.

The Immediate

The second part is the immediate. It acts as an enter to the mannequin and isn’t arduous coded. A number of parts assemble a immediate, and a immediate template is chargeable for setting up this enter. LangChain helps in making the work with prompts simpler.

# Educational Immediate

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-davinci-003", openai_api_key=openai_api_key)

immediate = """

In the present day is Monday, tomorrow is Wednesday.

What's mistaken with that assertion?

"""

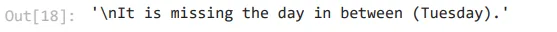

llm(immediate)The above prompts are of educational kind. Allow us to see the output

So, it appropriately picked up the error.

Immediate templates are like pre-defined recipes for producing prompts for LLM. Directions, few-shot examples, and particular context and questions for a given job kind a part of a template.

from langchain.llms import OpenAI

from langchain import PromptTemplate

llm = OpenAI(model_name="text-davinci-003", openai_api_key=openai_api_key)

# Discover "location" under, that may be a placeholder for one more worth later

template = """

I actually need to journey to {location}. What ought to I do there?

Reply in a single brief sentence

"""

immediate = PromptTemplate(

input_variables=["location"],

template=template,

)

final_prompt = immediate.format(location='Kanyakumari')

print (f"Ultimate Immediate: {final_prompt}")

print ("-----------")

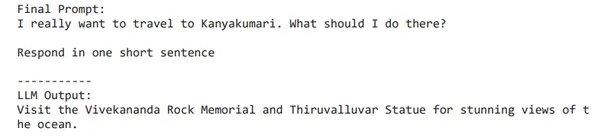

print (f"LLM Output: {llm(final_prompt)}")So, we have now imported packages on the outset. The mannequin we have now used right here is text-DaVinci-003, which might do any language job with higher high quality, longer output, and constant directions in comparison with Curie, Babbage, or Ada. So, now we have now created a template. The enter variable is location, and the worth is Kanyakumari.

The Output Parser

The third part is the output parser, which allows the format of the output of a mannequin. Parser is a technique that can extract mannequin textual content output to a desired format.

from langchain.output_parsers import StructuredOutputParser, ResponseSchema

from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate

from langchain.llms import OpenAIllm = OpenAI(model_name="text-davinci-003", openai_api_key=openai_api_key)# How you prefer to your response structured. That is mainly a flowery immediate template

response_schemas = [

ResponseSchema(name="bad_string", description="This a poorly formatted user input string"),

ResponseSchema(name="good_string", description="This is your response, a reformatted response")

]

# The way you want to parse your output

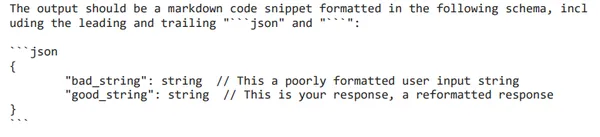

output_parser = StructuredOutputParser.from_response_schemas(response_schemas)# See the immediate template you created for formatting

format_instructions = output_parser.get_format_instructions()

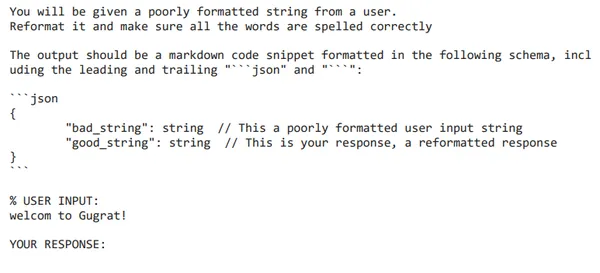

print (format_instructions)

template = """

You can be given a poorly formatted string from a consumer.

Reformat it and ensure all of the phrases are spelled appropriately

{format_instructions}

% USER INPUT:

{user_input}

YOUR RESPONSE:

"""

immediate = PromptTemplate(

input_variables=["user_input"],

partial_variables={"format_instructions": format_instructions},

template=template

)

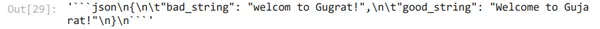

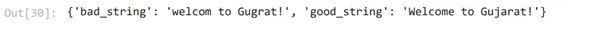

promptValue = immediate.format(user_input="welcom to Gugrat!")

print(promptValue)

llm_output = llm(promptValue)

llm_output

output_parser.parse(llm_output)

The language mannequin is just going to return a string, but when we want a JSON object, we have to parse that string. Within the response schema above, we will see that there are 2 discipline objects, viz., good string and dangerous string. Then, we have now created a immediate template.

Conclusion

On this article, we have now briefly examined the important thing parts of the LangChain and their purposes. On the outset, we understood what LangChain is and the way it simplifies the tough job of working and constructing with AI fashions. We’ve additionally understood the important thing parts of LangChain, viz. prompts (a set of directions handed on by a consumer to information the mannequin to supply a constant output), language fashions (the bottom which helps in giving a desired output), and output parsers (allows getting extra structured info than simply textual content as an output). By understanding these key parts, we have now constructed a powerful basis for constructing custom-made purposes.

Key Takeaways

- LLMs possess the capability to revolutionize AI. It opens a plethora of alternatives for info seekers, as something could be requested and answered.

- Whereas fundamental ChatGPT immediate engineering augurs effectively for a lot of functions, LangChain-based LLM utility improvement is way quicker.

- The excessive diploma of integration with numerous AI platforms helps make the most of the LLMs higher.

Continuously Requested Questions

Ans. Python and JavaScript are the 2 packages of LangChain.

Ans. Temperature is a parameter that defines the diploma of randomness of the output. Its worth ranges from 0 to 1.

Ans. text-ada-001 is the quickest mannequin within the GPT-3 collection.

Ans. Parser is a technique that can extract a mannequin’s textual content output to a desired format.

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Writer’s discretion.

Associated

[ad_2]