[ad_1]

Subsequent.js affords way over customary server-side rendering capabilities. Software program engineers can configure their internet apps in some ways to optimize Subsequent.js efficiency. In reality, Subsequent.js builders routinely make use of totally different caching methods, various pre-rendering strategies, and dynamic parts to optimize and customise Subsequent.js rendering to fulfill particular necessities.

When your aim is creating a multipage scalable internet app with tens of 1000’s of pages, it’s all the extra necessary to take care of stability between Subsequent.js web page load velocity and optimum server load. Choosing the proper rendering strategies is essential in constructing a performant internet app that gained’t waste {hardware} sources and generate further prices.

Subsequent.js Pre-rendering Methods

Subsequent.js pre-renders each web page by default, however efficiency and effectivity may be additional improved utilizing totally different Subsequent.js rendering varieties and approaches to pre-rendering and rendering. Along with conventional client-side rendering (CSR), Subsequent.js affords builders a alternative between two fundamental types of pre-rendering:

-

Server-side rendering (SSR) offers with rendering webpages at runtime when the request is named. This method will increase server load however is important if the web page has dynamic content material and wishes social visibility.

-

Static web site era (SSG) primarily offers with rendering webpages at construct time. Subsequent.js affords further choices for static era with or with out information, in addition to automated static optimization, which determines whether or not or not a web page may be pre-rendered.

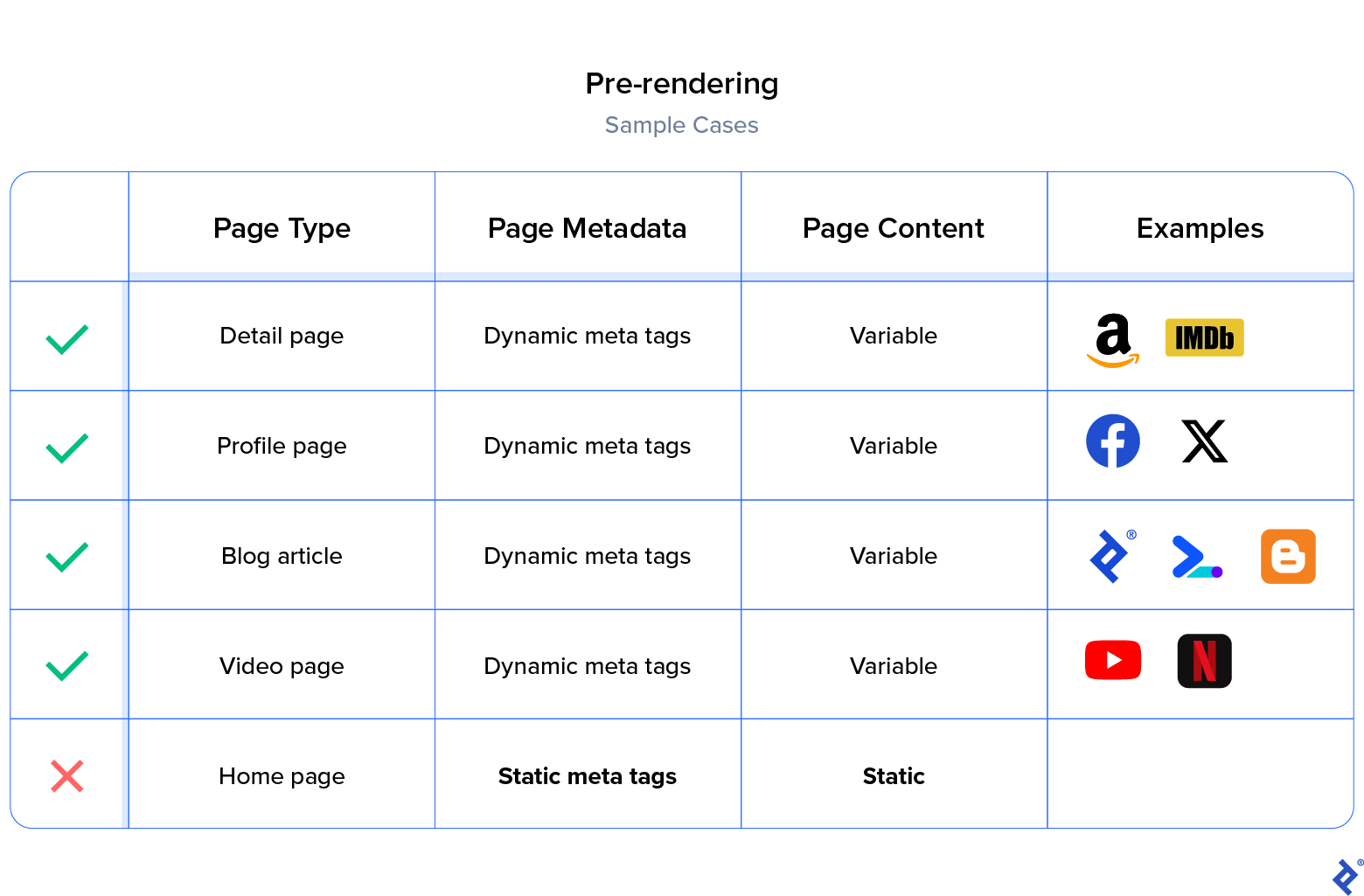

Pre-rendering is beneficial for pages that want social consideration (Open Graph protocol) and good search engine optimization (meta tags) however include dynamic content material primarily based on the route endpoint. For instance, an X (previously Twitter) consumer web page with a /@twitter_name endpoint has page-specific metadata. Therefore, pre-rendering all pages on this route is an effective choice.

Metadata will not be the one motive to decide on SSR over CSR—rendering the HTML on the server can even result in important enhancements in first enter delay (FID), the Core Internet Vitals metric that measures the time from a consumer’s first interplay to the time when the browser is definitely in a position to course of a response. When rendering heavy (data-intensive) parts on the consumer aspect, FID turns into extra noticeable to customers, particularly these with slower web connections.

If Subsequent.js efficiency optimization is the highest precedence, one should not overpopulate the DOM tree on the server aspect, which inflates the HTML doc. If the content material belongs to an inventory on the backside of the web page and isn’t instantly seen within the first load, client-side rendering is a greater choice for that exact element.

Pre-rendering may be additional divided into a number of optimum strategies by figuring out elements equivalent to variability, bulk dimension, and frequency of updates and requests. We should decide the suitable methods whereas preserving in thoughts the server load; we don’t need to adversely have an effect on the consumer expertise or incur pointless internet hosting prices.

Figuring out the Components for Subsequent.js Efficiency Optimization

Simply as conventional server-side rendering imposes a excessive load on the server at runtime, pure static era will place a excessive load at construct time. We should make cautious choices to configure the rendering method relying on the character of the webpage and route.

When coping with Subsequent.js optimization, the choices offered are ample and we now have to find out the next standards for every route endpoint:

- Variability: The content material of the webpage, both time dependent (adjustments each minute), motion dependent (adjustments when a consumer creates/updates a doc), or stale (doesn’t change till a brand new construct).

- Bulk dimension: The estimate of the utmost variety of pages in that route endpoint (e.g., 30 genres in a streaming app).

- Frequency of updates: The estimated fee of content material updates (e.g., 10 updates per thirty days), whether or not time dependent or motion dependent.

- Frequency of requests: The estimated fee of consumer/consumer requests to a webpage (e.g., 100 requests per day, 10 requests per second).

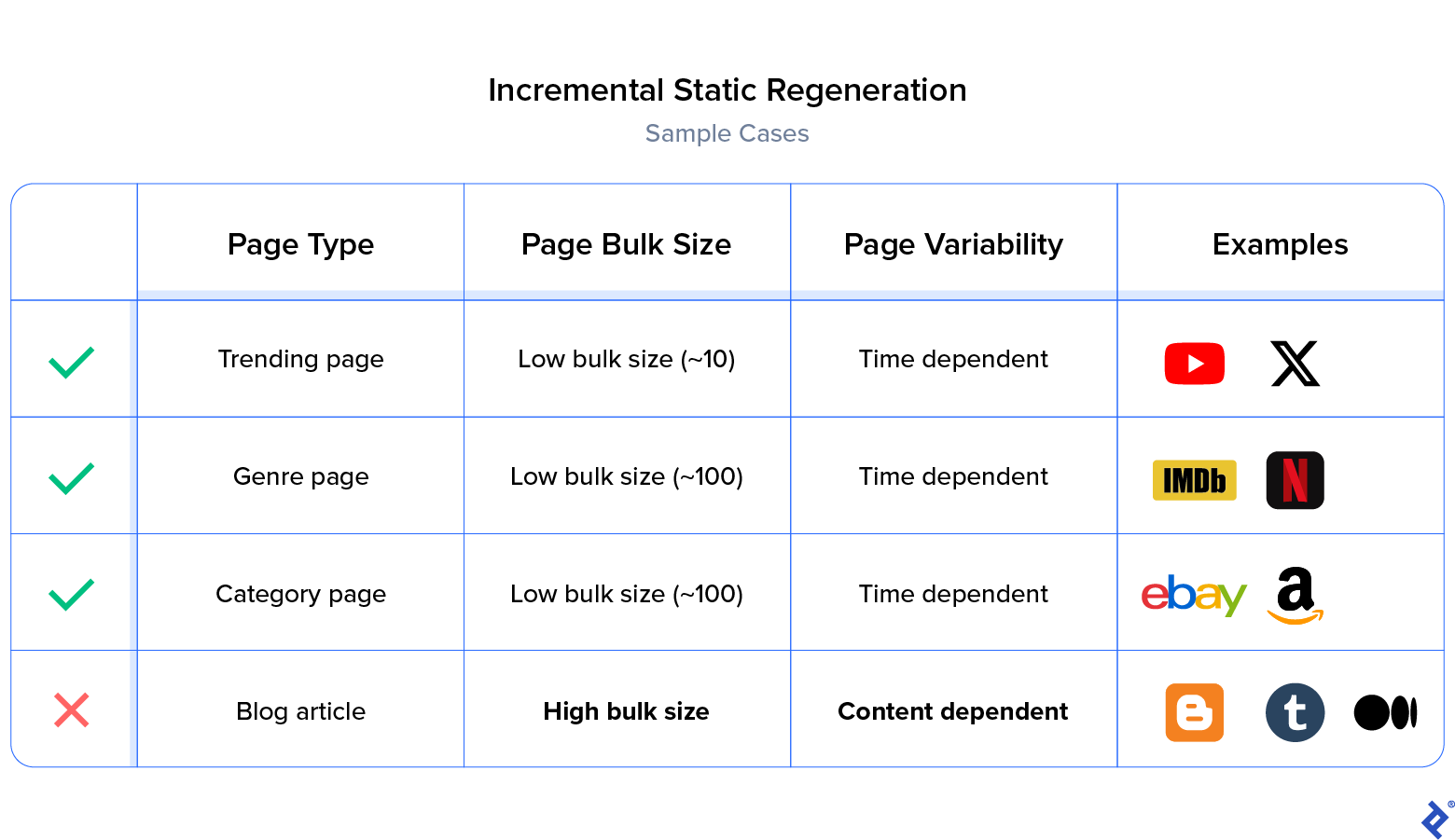

Low Bulk Dimension and Time-dependent Variability

Incremental static regeneration (ISR) revalidates the webpage at a specified interval. That is the most suitable choice for normal construct pages in a web site, the place the information is anticipated to be refreshed at a sure interval. For instance, there’s a genres/genre_id route level in an over-the-top media app like Netflix, and every style web page must be regenerated with recent content material each day. As the majority dimension of genres is small (about 200), it’s a higher choice to decide on ISR, which revalidates the web page given the situation that the pre-built/cached web page is greater than someday outdated.

Right here is an instance of an ISR implementation:

export async perform getStaticProps() {

const posts = await fetch(url-endpoint).then((information)=>information.json());

/* revalidate at most each 10 secs */

return { props: { posts }, revalidate: 10, }

}

export async perform getStaticPaths() {

const posts = await fetch(url-endpoint).then((information)=>information.json());

const paths = posts.map((submit) => (

params: { id: submit.id },

}));

return { paths, fallback: false }

}

On this instance, Subsequent.js will revalidate all these pages each 10 seconds at most. The important thing right here is at most, because the web page doesn’t regenerate each 10 seconds, however solely when the request is available in. Right here’s a step-by-step walkthrough of the way it works:

- A consumer requests an ISR web page route.

- Subsequent.js sends the cached (stale) web page.

- Subsequent.js tries to examine if the stale web page has aged greater than 10 seconds.

- If that’s the case, Subsequent.js regenerates the brand new web page.

Excessive Bulk Dimension and Time-dependent Variability

Most server-side purposes fall into this class. We time period them public pages as these routes may be cached for a time period as a result of their content material will not be consumer dependent, and the information doesn’t should be updated always. In these circumstances, the majority dimension is normally too excessive (~2 million), and producing tens of millions of pages at construct time will not be a viable answer.

SSR and Caching:

The higher choice is at all times to do server-side rendering, i.e., to generate the webpage at runtime when requested on the server and cache the web page for a whole day, hour, or minute, in order that any later request will get a cached web page. This ensures the app doesn’t have to construct tens of millions of pages at construct time, nor repetitively construct the identical web page at runtime.

Let’s see a fundamental instance of an SSR and caching implementation:

export async perform getServerSideProps({ req, res }) {

/* setting a cache of 10 secs */

res.setHeader( 'Cache-Management','public, s-maxage=10')

const information = fetch(url-endpoint).then((res) => res.json());

return {

props: { information },

}

}

Chances are you’ll look at the Subsequent.js caching documentation if you need to be taught extra about cache headers.

ISR and Fallback:

Although producing tens of millions of pages at construct time will not be a super answer, generally we do want them generated within the construct folder for additional configuration or customized rollbacks. On this case, we will optionally bypass web page era on the construct step, rendering on-demand just for the very first request or any succeeding request that crosses the stale age (revalidate interval) of the generated webpage.

We begin by including {fallback: 'blocking'} to the getStaticPaths, and when the construct begins, we change off the API (or forestall entry to it) so that it’ll not generate any path routes. This successfully bypasses the part of needlessly constructing tens of millions of pages at construct time, as an alternative producing them on demand at runtime and preserving leads to a construct folder (_next/static) for succeeding requests and builds.

Right here is an instance of limiting static generations on the construct part:

export async perform getStaticPaths() {

// fallback: 'blocking' will attempt to server-render

// all pages on demand if the web page doesn’t exist already.

if (course of.env.SKIP_BUILD_STATIC_GENERATION) {

return {paths: [], fallback: 'blocking'};

}

}

Now we wish the generated web page to enter the cache for a time period and revalidate afterward when it crosses the cache interval. We are able to use the identical strategy as in our ISR instance:

export async perform getStaticProps() {

const posts = await fetch(<url-endpoint>).then((information)=>information.json());

// Revalidates each 10 secs.

return { props: { posts }, revalidate: 10, }

}

If there’s a brand new request after 10 seconds, the web page will likely be revalidated (or invalidated if the web page will not be constructed already), successfully working the identical approach as SSR and caching, however producing the webpage in a construct output folder (/_next/static).

Normally, SSR with caching is the higher choice. The draw back of ISR and fallback is that the web page could initially present stale information. A web page gained’t be regenerated till a consumer visits it (to set off the revalidation), after which the identical consumer (or one other consumer) visits the identical web page to see probably the most up-to-date model of it. This does have the unavoidable consequence of Person A seeing stale information whereas Person B sees correct information. For some apps, that is insignificant, however for others, it’s unacceptable.

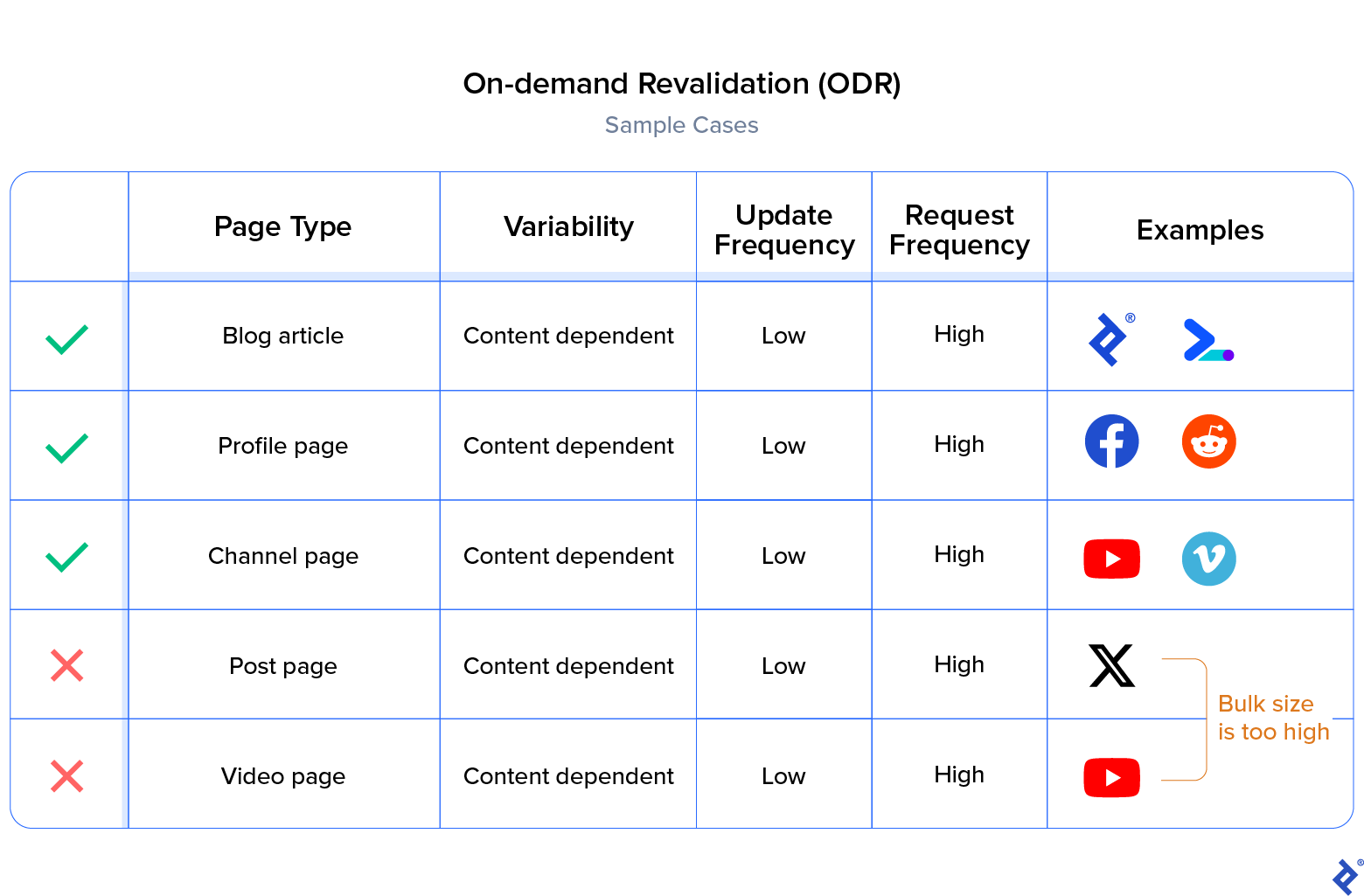

Content material-dependent Variability

On-demand revalidation (ODR) revalidates the webpage at runtime through a webhook. That is fairly helpful for Subsequent.js velocity optimization in circumstances by which the web page must be extra truthful to content material, e.g., if we’re constructing a weblog with a headless CMS that gives webhooks for when the content material is created or up to date. We are able to name the respective API endpoint to revalidate a webpage. The identical is true for REST APIs within the again finish—after we replace or create a doc, we will name a request to revalidate the webpage.

Let’s see an instance of ODR in motion:

// Calling this URL will revalidate an article.

// https://<your-site.com>/api/revalidate?revalidate_path=<article_id>&secret=<token>

// pages/api/revalidate.js

export default async perform handler(req, res) {

if (req.question.secret !== course of.env.MY_SECRET_TOKEN) {

return res.standing(401).json({ message: 'Invalid token' })

}

attempt {

await res.revalidate('https://<your-site.com>/'+req.question.revalidate_path)

return res.json({ revalidated: true })

} catch (err) {

return res.standing(500).ship('Error revalidating')

}

}

If we now have a really massive bulk dimension (~2 million), we’d need to skip web page era on the construct part by passing an empty array of paths:

export async perform getStaticPaths() {

const posts = await fetch(url-endpoints).then((res) => res.json());

// Will attempt to server-render all pages on demand if the trail doesn’t exist.

return {paths: [], fallback: 'blocking'};

}

This prevents the draw back described in ISR. As a substitute, each Person A and Person B will see correct information on revalidation, and the ensuing regeneration occurs within the background and never on request time.

There are eventualities when a content-dependent variability may be pressure switched to a time-dependent variability, i.e., if the majority dimension and replace or request frequency are too excessive.

Let’s use an IMDB film particulars web page for instance. Though new evaluations could also be added or the rating could also be modified, there isn’t a have to replicate the main points inside seconds; even whether it is an hour late, it doesn’t have an effect on the performance of the app. Nonetheless, the server load may be minimized enormously by shifting to ISR, as you do not need to replace the film particulars web page each time a consumer provides a evaluate. Technically, so long as the replace frequency is larger than the request frequency, it may be pressure switched.

With the launch of React server parts in React 18, Layouts RFC is among the most awaited characteristic updates within the Subsequent.js platform that can allow help for single-page purposes, nested layouts, and a brand new routing system. Layouts RFC helps improved information fetching, together with parallel fetching, which permits Subsequent.js to start out rendering earlier than information fetching is full. With sequential information fetching, content-dependent rendering can be doable solely after the earlier fetch was accomplished.

Subsequent.js Hybrid Approaches With CSR

In Subsequent.js, client-side rendering at all times occurs after pre-rendering. It’s typically handled as an add-on rendering sort that’s fairly helpful in these circumstances by which we have to cut back server load, or if the web page has parts that may be lazy loaded. The hybrid strategy of pre-rendering and CSR is advantageous in lots of eventualities.

If the content material is dynamic and doesn’t require Open Graph integration, we must always select client-side rendering. For instance, we will choose SSG/SSR to pre-render an empty structure at construct time and populate the DOM after the element hundreds.

In circumstances like these, the metadata is usually not affected. For instance, the Fb house feed updates each 60 seconds (i.e., variable content material). Nonetheless, the web page metadata stays fixed (e.g., the web page title, house feed), therefore not affecting the Open Graph protocol and search engine optimization visibility.

Dynamic Parts

Consumer-side rendering is acceptable for content material not seen within the window body on the primary load, or parts hidden by default till an motion (e.g., login modals, alerts, dialogues). You may show these parts both by loading that content material after the render (if the element for rendering is already in jsbundle) or by lazy loading the element itself by way of subsequent/dynamic.

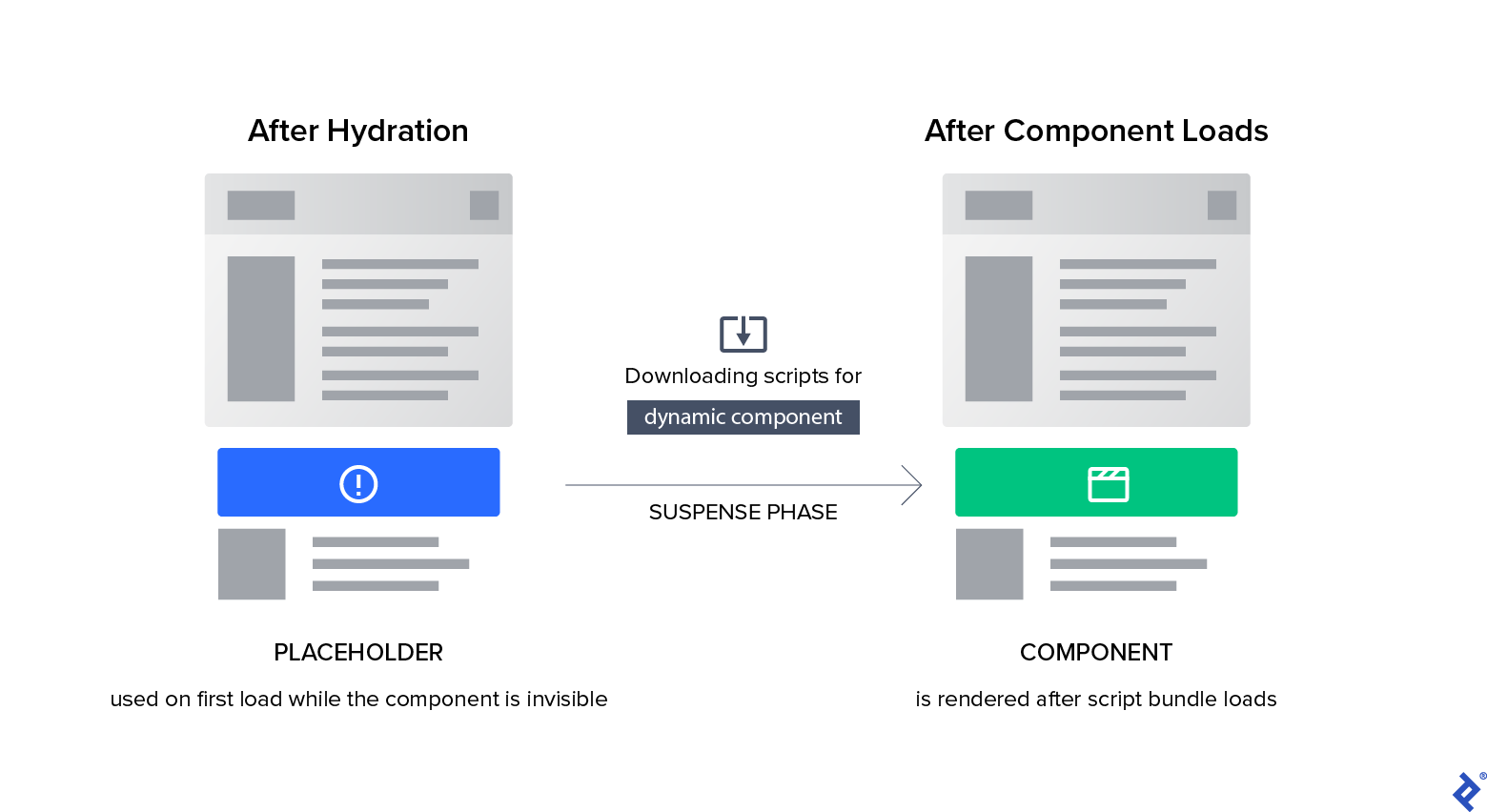

Often, a web site render begins with plain HTML, adopted by the hydration of the web page and client-side rendering strategies equivalent to content material fetching on element hundreds or dynamic parts.

Hydration is a course of by which React makes use of the JSON information and JavaScript directions to make parts interactive (for instance, attaching occasion handlers to a button). This typically makes the consumer really feel as if the web page is loading a bit slower, like in an empty X profile structure by which the profile content material is loading progressively. Generally it’s higher to remove such eventualities by pre-rendering, particularly if the content material is already accessible on the time of pre-render.

The suspense part represents the interval interval for dynamic element loading and rendering. In Subsequent.js, we’re supplied with an choice to render a placeholder or fallback element throughout this part.

An instance of importing a dynamic element in Subsequent.js:

/* hundreds the element on consumer aspect */

const DynamicModal = dynamic(() => import('../parts/modal'), {

ssr: false,

})

You may render a fallback element whereas the dynamic element is loading:

/* prevents hydrations till suspense */

const DynamicModal = dynamic(() => import('../parts/modal'), {

suspense: true,

})

export default perform Residence() {

return (

<Suspense fallback={`Loading...`}>

<DynamicModal />

</Suspense>

)

Observe that subsequent/dynamic comes with a Suspense callback to point out a loader or empty structure till the element hundreds, so the header element is not going to be included within the web page’s preliminary JavaScript bundle (decreasing the preliminary load time). The web page will render the Suspense fallback element first, adopted by the Modal element when the Suspense boundary is resolved.

Subsequent.js Caching: Ideas and Methods

If you should enhance web page efficiency and cut back server load on the similar time, caching is probably the most great tool in your arsenal. In SSR and caching, we’ve mentioned how caching can successfully enhance availability and efficiency for route factors with a big bulk dimension. Often, all Subsequent.js belongings (pages, scripts, photos, movies) have cache configurations that we will add to and tweak to regulate to our necessities. Earlier than we look at this, let’s briefly cowl the core ideas of caching. The caching for a webpage should undergo three totally different checkpoints when a consumer opens any web site in an internet browser:

- The browser cache is the primary checkpoint for all HTTP requests. If there’s a cache hit it is going to be served instantly from the browser cache retailer, whereas a cache miss will go on to the subsequent checkpoint.

- The content material supply community (CDN) cache is the second checkpoint. It’s a cache retailer distributed to totally different proxy servers throughout the globe. That is additionally referred to as caching on the sting.

- The origin server is the third checkpoint, the place the request is served and revalidated if the cache retailer pushes a revalidate request (i.e., the web page within the cache has change into stale).

Caching headers are added to all immutable belongings originating from /_next/static, equivalent to CSS, JavaScript, photos, and so forth:

Cache-Management: public, maxage=31536000, immutable

The caching header for Subsequent.js server-side rendering is configured by the Cache-Management header in getServerSideProps:

res.setHeader('Cache-Management', 'public', 's-maxage=10', 'stale-while-revalidate=59');

Nonetheless, for statically generated pages (SSGs), the caching header is autogenerated by the revalidate choice in getStaticProps.

Understanding and Configuring a Cache Header

Writing a cache header is easy, offered you learn to configure it correctly. Let’s look at what every tag means.

Public vs. Non-public

One necessary choice to make is selecting between non-public and public. public signifies that the response may be saved in a shared cache (CDN, proxy cache, and so on.), whereas non-public signifies that the response may be saved solely within the non-public cache (native cache within the browser).

If the web page is focused to many customers and can look the identical to those customers, then go for public, but when it’s focused to particular person customers, then select non-public.

non-public is never used on the internet as more often than not builders attempt to make the most of the sting community to cache their pages, whereas non-public will primarily forestall that and cache the web page domestically on the consumer finish. non-public ought to be used if the web page is consumer particular and accommodates non-public info, i.e., information we might not need cached on public cache shops:

Cache-Management: non-public, s-maxage=1800

Most Age

s-maxage is the utmost age of a cached web page (i.e., how lengthy it may be thought-about recent), and a revalidation happens if a request crosses the required worth. Whereas there are exceptions, s-maxage ought to be appropriate for many web sites. You may determine its worth primarily based in your analytics and the frequency of content material change. If the identical web page has a thousand hits daily and the content material is simply up to date as soon as a day, then select a s-maxage worth of 24 hours.

Should Revalidate vs. Stale Whereas Revalidate

must-revalidate specifies that the response within the cache retailer may be reused so long as it’s recent, however have to be revalidated whether it is stale. stale-while-revalidate specifies that the response within the cache retailer may be reused even when it’s stale for the required time period (because it revalidates within the background).

If you realize the content material will change at a given interval–making preexisting content material invalid–use must-revalidate. For instance, you’ll use it for a inventory alternate web site the place costs oscillate every day and outdated information shortly turns into invalid.

In distinction, stale-while-revalidate is used after we know content material adjustments at each interval, and previous content material turns into deprecated, however not precisely invalid. Image a prime 10 trending web page on a streaming service. The content material adjustments every day, but it surely’s acceptable to point out the primary few hits as outdated information, as the primary hit will revalidate the web page; technically talking, that is acceptable if the web site visitors will not be too excessive, or the content material is of no main significance. If the visitors could be very excessive, then possibly a thousand customers will see the improper web page within the fraction of a minute that it takes the web page to be revalidated. The rule of thumb is to make sure the content material change will not be a excessive precedence.

Relying on the extent of significance, you’ll be able to select to allow the stale web page for a sure interval. This era is normally 59 seconds, as most pages take as much as a minute to rebuild:

Cache-Management: public, s-maxage=3600, stale-while-revalidate=59

Stale If Error

One other useful configuration is stale-if-error:

Cache-Management: public, s-maxage=3600, stale-while-revalidate=59, stale-if-error=300

Assuming that the web page rebuild failed, and retains failing as a result of a server error, this limits the time that stale information can be utilized.

The Way forward for Subsequent.js Rendering

There isn’t a excellent configuration that fits all wants and functions, and the most effective technique typically will depend on the kind of internet software. Nonetheless, you can begin by figuring out the elements and selecting the correct Subsequent.js rendering sort and method in your wants.

Particular consideration must be paid to cache settings relying on the amount of anticipated customers or web page views per day. A big-scale software with dynamic content material would require a smaller cache interval for higher efficiency and reliability, however the reverse is true for small-scale purposes.

Whereas the strategies demonstrated on this article ought to suffice to cowl practically all eventualities, Vercel often releases Subsequent.js updates and provides new options. Staying updated with the newest additions associated to rendering and efficiency (e.g., the app router characteristic in Subsequent.js 13) can be a necessary a part of efficiency optimization.

The editorial group of the Toptal Engineering Weblog extends its gratitude to Imad Hashmi for reviewing the code samples and different technical content material introduced on this article.

[ad_2]