[ad_1]

Collaboration on complicated growth tasks virtually at all times presents challenges. For conventional software program tasks, these challenges are well-known, and through the years a variety of approaches to addressing them have developed. However as machine studying (ML) turns into a vital part of increasingly more techniques, it poses a brand new set of challenges to growth groups. Chief amongst these challenges is getting information scientists (who make use of an experimental method to system mannequin growth) and software program builders (who depend on the self-discipline imposed by software program engineering rules) to work harmoniously.

On this SEI weblog submit, which is tailored from a lately printed paper to which I contributed, I spotlight the findings of a examine on which I teamed up with colleagues Nadia Nahar (who led this work as a part of her PhD research at Carnegie Mellon College and Christian Kästner (additionally from Carnegie Mellon College) and Shurui Zhou (of the College of Toronto).The examine sought to determine collaboration challenges widespread to the event of ML-enabled techniques. Via interviews performed with quite a few people engaged within the growth of ML-enabled techniques, we sought to reply our main analysis query: What are the collaboration factors and corresponding challenges between information scientists and engineers? We additionally examined the impact of assorted growth environments on these tasks. Primarily based on this evaluation, we developed preliminary suggestions for addressing the collaboration challenges reported by our interviewees. Our findings and proposals knowledgeable the aforementioned paper, Collaboration Challenges in Constructing ML-Enabled Techniques: Communication, Documentation, Engineering, and Course of, which I’m proud to say obtained a Distinguished Paper Award on the forty fourth Worldwide Convention on Software program Engineering (ICSE 2022).

Regardless of the eye ML-enabled techniques have attracted—and the promise of those techniques to exceed human-level cognition and spark nice advances—transferring a machine-learned mannequin to a practical manufacturing system has proved very onerous. The introduction of ML requires better experience and introduces extra collaboration factors when in comparison with conventional software program growth tasks. Whereas the engineering elements of ML have obtained a lot consideration, the adjoining human components in regards to the want for interdisciplinary collaboration haven’t.

The Present State of the Apply and Its Limits

Most software program tasks lengthen past the scope of a single developer, so collaboration is a should. Builders usually divide the work into varied software program system elements, and staff members work largely independently till all of the system elements are prepared for integration. Consequently, the technical intersections of the software program elements themselves (that’s, the part interfaces) largely decide the interplay and collaboration factors amongst growth staff members.

Challenges to collaboration happen, nonetheless, when staff members can’t simply and informally talk or when the work requires interdisciplinary collaboration. Variations in expertise, skilled backgrounds, and expectations in regards to the system may pose challenges to efficient collaboration in conventional top-down, modular growth tasks. To facilitate collaboration, communication, and negotiation round part interfaces, builders have adopted a variety of methods and sometimes make use of casual broadcast instruments to maintain everybody on the identical web page. Software program lifecycle fashions, similar to waterfall, spiral, and Agile, additionally assist builders plan and design steady interfaces.

ML-enabled techniques usually characteristic a basis of conventional growth into which ML part growth is launched. Creating and integrating these elements into the bigger system requires separating and coordinating information science and software program engineering work to develop the realized fashions, negotiate the part interfaces, and plan for the system’s operation and evolution. The realized mannequin might be a minor or main part of the general system, and the system sometimes contains elements for coaching and monitoring the mannequin.

All of those steps imply that, in comparison with conventional techniques, ML-enabled system growth requires experience in information science for mannequin constructing and information administration duties. Software program engineers not educated in information science who, nonetheless, tackle mannequin constructing have a tendency to supply ineffective fashions. Conversely, information scientists are likely to favor to concentrate on modeling duties to the exclusion of engineering work which may affect their fashions. The software program engineering neighborhood has solely lately begun to look at software program engineering for ML-enabled techniques, and far of this work has targeted narrowly on issues similar to testing fashions and ML algorithms, mannequin deployment, and mannequin equity and robustness. Software program engineering analysis on adopting a system-wide scope for ML-enabled techniques has been restricted.

Framing a Analysis Method Round Actual-World Expertise in ML-Enabled System Improvement

Discovering restricted current analysis on collaboration in ML-enabled system growth, we adopted a qualitative technique for our analysis based mostly on 4 steps: (1) establishing scope and conducting a literature assessment, (2) interviewing professionals constructing ML-enabled techniques, (3) triangulating interview findings with our literature assessment, and (4) validating findings with interviewees. Every of those steps is mentioned under:

- Scoping and literature assessment: We examined the prevailing literature on software program engineering for ML-enabled techniques. In so doing, we coded sections of papers that both straight or implicitly addressed collaboration points amongst staff members with totally different expertise or academic backgrounds. We analyzed the codes and derived the collaboration areas that knowledgeable our interview steering.

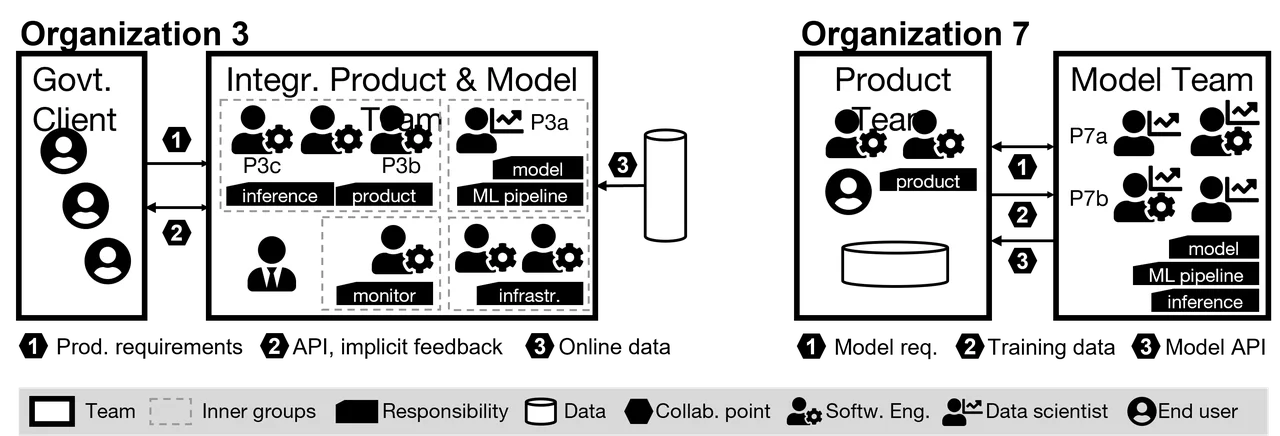

- Interviews: We performed interviews with 45 builders of ML-enabled techniques from 28 totally different organizations which have solely lately adopted ML (see Desk 1 for participant demographics). We transcribed the interviews, after which we created visualizations of organizational construction and tasks to map challenges to collaboration factors (see Determine 1 for pattern visualizations). We additional analyzed the visualizations to find out whether or not we may affiliate collaboration issues with particular organizational constructions.

- Triangulation with literature: We linked interview information with associated discussions recognized in our literature assessment, together with potential options. Out of the 300 papers we learn, we recognized 61 as presumably related and coded them utilizing our codebook.

- Validity test: After making a full draft of our examine, we supplied it to our interviewees together with supplementary materials and questions prompting them to test for correctness, areas of settlement and disagreement, and any insights gained from studying the examine.

Desk 1: Participant and Firm Demographics

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Our interviews with professionals revealed that the quantity and sorts of groups growing ML-enabled techniques, their composition, their tasks, the facility dynamics at play, and the formality of their collaborations different extensively from group to group. Determine 1 presents a simplified illustration of groups in two organizations. Workforce composition and accountability differed for varied artifacts (for example, mannequin, pipeline, information, and accountability for the ultimate product). We discovered that groups usually have a number of tasks and interface with different groups at a number of collaboration factors.

Determine 1: Construction of Two Interviewed Organizations

Some groups we examined have accountability for each mannequin and software program growth. In different instances, software program and mannequin growth are dealt with by totally different groups. We discerned no clear international patterns throughout all of the staff we studied. Nonetheless, patterns did emerge after we narrowed the main target to 3 particular elements of collaboration:

- necessities and planning

- coaching information

- product-model integration

Navigating the Tensions Between Product and Mannequin Necessities

To start, we discovered key variations within the order during which groups determine product and mannequin necessities:

- Mannequin first (13 of 28 organizations): These groups construct the mannequin first after which construct the product across the mannequin. The mannequin shapes product necessities. The place mannequin and product groups are totally different, the mannequin staff most frequently begins the event course of.

- Product first (13 of 28 organizations): These groups begin with product growth after which develop a mannequin to help it. Most frequently, the product already exists, and new ML growth seeks to boost the product’s capabilities. Mannequin necessities are derived from product necessities, which frequently constrain mannequin qualities.

- Parallel (2 of 28 organizations): The mannequin and product groups work in parallel.

No matter which of those three growth trajectories utilized to any given group, our interviews revealed a continuing pressure between product necessities and mannequin necessities. Three key observations arose from these tensions:

- Product necessities require enter from the mannequin staff. It’s onerous to elicit product necessities with no stable understanding of ML capabilities, so the mannequin staff should be concerned within the course of early. Information scientists reported having to take care of unrealistic expectations about mannequin capabilities, and so they steadily needed to educate purchasers and builders about ML strategies to right these expectations. The place a product-first growth trajectory is practiced, it was doable for the product staff to disregard information necessities when negotiating product necessities. Nonetheless, when necessities gathering is left to the mannequin staff, key product necessities, similar to usability, could be ignored.

- Mannequin growth with unclear necessities is widespread. Regardless of an expectation they are going to work independently, mannequin groups hardly ever obtain enough necessities. Typically, they have interaction of their work with no full understanding of the product their mannequin is to help. This omission generally is a thorny downside for groups that apply model-first growth.

- Offered mannequin necessities hardly ever transcend accuracy and information safety. Ignoring different essential necessities, similar to latency or scalability, has prompted integration and operation issues. Equity and explainability necessities are hardly ever thought of.

Suggestions

Necessities and planning type a key collaboration level for product and mannequin groups growing ML-enabled techniques. Primarily based on our interviews and literature assessment, we’ve proposed the next suggestions for this collaboration level:

- Contain information scientists early within the course of.

- Think about adopting a parallel growth trajectory for product and mannequin groups.

- Conduct ML coaching classes to teach purchasers and product groups.

- Undertake extra formal necessities documentation for each mannequin and product.

Addressing Challenges Associated to Coaching Information

Our examine revealed that disagreements over coaching information represented the commonest collaboration challenges. These disagreements usually stem from the truth that the mannequin staff steadily doesn’t personal, gather, or perceive the information. We noticed three organizational constructions that affect the collaboration challenges associated to coaching information:

- Offered information: The product staff offers information to the mannequin staff. Coordination tends to be distant and formal, and the product staff holds extra energy in negotiations over information.

- Exterior information: The mannequin staff depends on an exterior entity for the information. The info usually comes from publicly accessible sources or from a third-party vendor. Within the case of publicly accessible information, the mannequin staff has little negotiating energy. It holds extra negotiating energy when hiring a 3rd get together to supply the information.

- In-house information: Product, mannequin, and information groups all exist throughout the similar group and make use of that group’s inner information. In such instances, each product and mannequin groups want to beat negotiation challenges associated to information use stemming from differing priorities, permissions, and information safety necessities.

Many interviewees famous dissatisfaction with information amount and high quality. One widespread downside is that the product staff usually lacks data about high quality and quantity of knowledge wanted. Different information issues widespread to the organizations we examined included the next:

- Offered and public information are sometimes insufficient. Analysis has raised questions in regards to the representativeness and trustworthiness of such information. Coaching skew is widespread: fashions that present promising outcomes throughout growth fail in manufacturing environments as a result of real-world information differs from the supplied coaching information.

- Information understanding and entry to information consultants usually current bottlenecks. Information documentation is sort of by no means enough. Workforce members usually gather data and hold monitor of the main points of their heads. Mannequin groups who obtain information from product groups wrestle getting assist from the product staff to grasp the information. The identical holds for information obtained from publicly accessible sources. Even inner information usually suffers from evolving and poorly documented information sources.

- Ambiguity arises when hiring an information agency. Problem typically arises when a mannequin staff seeks buy-in from the product staff on hiring an exterior information agency. Members in our examine famous communication vagueness and hidden assumptions as key challenges within the course of. Expectations are communicated verbally, with out clear documentation. Consequently, the information staff usually doesn’t have enough context to grasp what information is required.

- There’s a have to deal with evolving information. Fashions must be frequently retrained with extra information or tailored to adjustments within the surroundings. Nonetheless, in instances the place information is supplied constantly, mannequin groups wrestle to make sure consistency over time, and most organizations lack the infrastructure to observe information high quality and amount.

- In-house priorities and safety considerations usually hinder information entry. Typically, in-house tasks are native initiatives with no less than some administration buy-in however little buy-in from different groups targeted on their very own priorities. These different groups would possibly query the enterprise worth of the challenge, which could not have an effect on their space straight. When information is owned by a special staff throughout the group, safety considerations over information sharing usually come up.

Coaching information of enough high quality and amount is essential for growing ML-enabled techniques. Primarily based on our interviews and literature assessment, we’ve proposed the next suggestions for this collaboration level:

- When planning, funds for information assortment and entry to area consultants (or perhaps a devoted information staff).

- Undertake a proper contract that specifies information high quality and amount expectations.

- When working with a devoted information staff, make expectations very clear.

- Think about using an information validation and monitoring infrastructure early within the challenge.

Challenges Integrating the Product and Mannequin in ML-Enabled Techniques

At this collaboration level, information scientists and software program engineers have to work carefully collectively, steadily throughout a number of groups. Conflicts usually happen at this juncture, nonetheless, stemming from unclear processes and tasks. Differing practices and expectations additionally create tensions, as does the best way during which engineering tasks are assigned for mannequin growth and operation. The challenges confronted at this collaboration level tended to fall into two broad classes: tradition clashes amongst groups with differing tasks and high quality assurance for mannequin and challenge.

Interdisciplinary Collaboration and Cultural Clashes

We noticed the next conflicts stemming from variations in software program engineering and information science cultures, all of which have been amplified by an absence of readability about tasks and limits:

- Workforce tasks usually don’t match capabilities and preferences. Information scientists expressed dissatisfaction when pressed to tackle engineering duties, whereas software program engineers usually had inadequate data of fashions to successfully combine them.

- Siloing information scientists fosters integration issues. Information scientists usually work in isolation with weak necessities and a lack of information of the bigger context.

- Technical jargon challenges communication. The differing terminology utilized in every area results in ambiguity, misunderstanding, and defective assumptions.

- Code high quality, documentation, and versioning expectations differ extensively. Software program engineers asserted that information scientists don’t comply with the identical growth practices or conform to the identical high quality requirements when writing code.

Many conflicts we noticed relate to boundaries of accountability and differing expectations. To handle these challenges, we proposed the next suggestions:

- Outline processes, tasks, and limits extra rigorously.

- Doc APIs at collaboration factors.

- Recruit devoted engineering help for mannequin deployment.

- Don’t silo information scientists.

- Set up widespread terminology.

Interdisciplinary Collaboration and High quality Assurance for Mannequin and Product

Throughout growth and integration, questions of accountability for high quality assurance usually come up. We famous the next challenges:

- Objectives for mannequin adequacy are onerous to ascertain. The mannequin staff virtually at all times evaluates the accuracy of the mannequin, however it has issue deciding whether or not the mannequin is nice sufficient owing to an absence of standards.

- Confidence is restricted with out clear mannequin analysis. Mannequin groups don’t prioritize analysis, in order that they usually don’t have any systematic analysis technique, which in flip results in skepticism in regards to the mannequin from different groups.

- Accountability for system testing is unclear. Groups usually wrestle with testing your entire system after mannequin integration, with mannequin groups steadily assuming no accountability for product high quality.

- Planning for on-line testing and monitoring is uncommon. Although crucial to observe for coaching skew and information drift, such testing requires the coordination of groups liable for product, mannequin, and operation. Moreover, many organizations don’t do on-line testing as a result of lack of an ordinary course of, automation, and even check consciousness.

Primarily based on our interviews and the insights they supplied, we developed the next suggestions to deal with challenges associated to high quality assurance:

- Prioritize and plan for high quality assurance testing.

- The product staff ought to assume accountability for general high quality and system testing, however it ought to have interaction the mannequin staff within the creation of a monitoring and experimentation infrastructure.

- Plan for, funds, and assign structured suggestions from the product engineering staff to the mannequin staff.

- Evangelize the advantages of testing in manufacturing.

- Outline clear high quality necessities for mannequin and product.

Conclusion: 4 Areas for Bettering Collaboration on ML-Enabled System Improvement

Information scientists and software program engineers will not be the primary to understand that interdisciplinary collaboration is difficult, however facilitating such collaboration has not been the main target of organizations growing ML-enabled techniques. Our observations point out that challenges to collaboration on such techniques fall alongside three collaboration factors: necessities and challenge planning, coaching information, and product-model integration. This submit has highlighted our particular findings in these areas, however we see 4 broad areas for bettering collaboration within the growth of ML-enabled techniques:

Communication: To fight issues arising from miscommunication, we advocate ML literacy for software program engineers and managers, and likewise software program engineering literacy for information scientists.

Documentation: Practices for documenting mannequin necessities, information expectations, and warranted mannequin qualities have but to take root. Interface documentation already in use might present an excellent start line, however any method should use a language understood by everybody concerned within the growth effort.

Engineering: Venture managers ought to guarantee enough engineering capabilities for each ML and non-ML elements and foster product and operations considering.

Course of: The experimental, trial-and error strategy of ML mannequin growth doesn’t naturally align with the standard, extra structured software program course of lifecycle. We advocate for additional analysis on built-in course of lifecycles for ML-enabled techniques.

[ad_2]