[ad_1]

You’ve efficiently executed your A/B exams, meticulously analyzed the information, and made strategic choices primarily based on the outcomes. Nevertheless, a puzzling scenario emerges because the outcomes noticed in these subtle A/B testing instruments fail to align with real-world observations.

What provides? Welcome to the world of the discrepancy between A/B testing instruments and real-life observations. It’s a wild trip the place components like statistical variance, sampling bias, contextual variations, technical glitches, timeframe misalignment, and even regression to the imply can throw off your fastidiously calculated outcomes.

Buckle up as we dive into the nitty-gritty of why these discrepancies occur and what you are able to do about them.

Technical Points

A/B testing instruments depend on JavaScript code or different technical implementations to assign customers to completely different variations. Nevertheless, regardless of how strong they’re, these instruments are not resistant to technical points that may impression the accuracy of their outcomes. As an illustration, script errors inside the implementation can happen, stopping correct monitoring of person interactions or resulting in defective project of customers to variations. These errors can disrupt the information assortment course of and introduce inconsistencies within the outcomes obtained. Moreover, compatibility points with completely different net browsers or variations in caching mechanisms can have an effect on the software’s performance, probably resulting in discrepancies between the noticed outcomes and the precise person expertise.

Furthermore, the impression of technical points can range relying on the complexity of the web site or utility being examined. Web sites that includes complicated person pathways or dynamic content material are notably vulnerable to technical challenges that may disrupt the A/B testing course of. The presence of third-party scripts or integrations can additional complicate issues, as conflicts or errors in these parts can intrude with the correct monitoring of person conduct. These technical complexities emphasize the significance of thorough testing and high quality assurance to make sure the right functioning of A/B testing instruments and reduce the potential for discrepancies between the instruments’ outcomes and the precise efficiency of the variations in real-world situations.

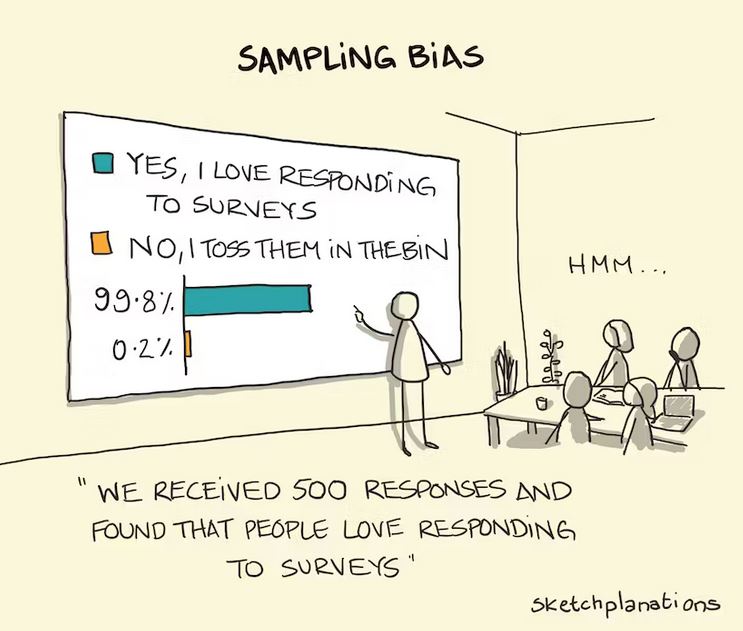

Sampling Bias

A/B testing instruments typically allocate customers to completely different variations randomly. Nevertheless, as a result of random nature of the project, there might be situations the place sure person segments are disproportionately represented in a single variation in comparison with one other. This could introduce bias and impression the outcomes noticed within the software. For instance, if a specific variation is proven extra ceaselessly to customers who’re already inclined to make a purchase order, it could artificially inflate the conversion price for that variation.

Equally, if a sure person section is underrepresented in a variation, the software might not seize their conduct adequately, resulting in inaccurate conclusions concerning the variation’s effectiveness. This sampling bias can create a discrepancy between the outcomes obtained from AB testing instruments and the precise conduct of the broader person base.

Timeframe Misalignment

A/B testing instruments sometimes accumulate information over a specified interval to investigate the outcomes. Nevertheless, the timing of information assortment in relation to the dwell efficiency of the variation can introduce discrepancies. One frequent difficulty is when the software collects information for an extended length than the interval when the variation was really dwell. In such circumstances, the software might inadvertently embrace extra time durations the place the variation’s efficiency differed from the supposed model, thus skewing the general evaluation. This could result in deceptive conclusions and a disconnect between the software’s outcomes and the precise impression of the variation throughout its supposed timeframe.

Conversely, there can be situations the place the information assortment interval of the A/B testing software falls wanting capturing the complete impact of the variation. If the software’s timeframe is shorter than the interval it takes for customers to completely interact with and reply to the variation, the outcomes might not precisely replicate true efficiency. This could happen when the variation requires an extended adaptation interval for customers to regulate their conduct or when the impression of the variation unfolds steadily over time. In such circumstances, the software might prematurely draw conclusions concerning the effectiveness of the variation, resulting in a discrepancy between the software’s findings and the precise long-term efficiency in real-world situations.

To mitigate the impression of timeframe misalignment, it’s essential to fastidiously plan and synchronize the information assortment interval of A/B testing instruments with the dwell deployment of variations. This includes aligning the beginning and finish dates of the testing section with the precise timeframe when the variations are energetic. Moreover, contemplating the potential lag time for customers to adapt and reply to the adjustments can present a extra complete understanding of the variation’s true impression. By making certain a correct alignment of timeframes, companies can cut back the chance of discrepancies and make extra correct data-driven choices primarily based on the outcomes obtained from A/B testing.

Contextual Distinction

A/B testing instruments typically function inside a managed testing atmosphere, the place customers are unaware of the take a look at and would possibly behave otherwise in comparison with when the variation is set dwell in the actual world. One vital issue contributing to the discrepancy between testing software outcomes and dwell efficiency is the novelty impact. When customers encounter a brand new variation in a testing atmosphere, they could exhibit heightened curiosity or engagement merely as a result of it’s completely different from what they’re accustomed to. This could artificially inflate the efficiency metrics recorded by the testing software, as customers might work together with the variation extra enthusiastically than they might of their common searching or buying habits.

Moreover, the attention of being a part of an experiment can affect person conduct. When customers are conscious that they’re a part of a testing course of, they could exhibit acutely aware or unconscious biases that may have an effect on their responses. This phenomenon, often called the Hawthorne impact, refers back to the alteration of conduct as a result of consciousness of being noticed or examined. Customers would possibly change into extra attentive, self-conscious, or inclined to behave in methods they understand as fascinating, probably distorting the outcomes obtained from the testing software. This discrepancy between the managed testing atmosphere and the actual world can result in variations in person engagement and conversion charges when the variation is applied outdoors the testing atmosphere. An individual with a eager eye can sometimes discover the refined cues decide that they’re coming into an A/B take a look at.

Furthermore, the absence of real-world context within the testing atmosphere may impression person conduct and subsequently affect the outcomes. In the actual world, customers encounter variations inside the context of their every day lives, which incorporates a variety of exterior components akin to time constraints, competing distractions, or private circumstances. These contextual components can considerably affect person decision-making and actions. Nevertheless, A/B testing instruments typically isolate customers from these real-world influences, focusing solely on the variation itself. Consequently, the software’s outcomes might not precisely seize how customers would reply to the variation when confronted with the complexity of their on a regular basis experiences. This discrepancy in contextual components can result in variations in person conduct and outcomes between the testing software and the dwell efficiency of the variation.

Regression to the imply

In A/B testing, it’s not unusual to watch excessive outcomes for a variation through the testing section. This could occur as a result of random probability, a selected section of customers being extra responsive to the variation, or different components that won’t maintain true when the variation is uncovered to a bigger, extra various viewers over an prolonged interval. This phenomenon is called regression to the imply.

Regression to the imply happens when excessive or outlier outcomes noticed throughout testing are not sustainable in the long term. For instance, if a variation exhibits a important improve in conversion charges through the testing section, it’s doable that this spike was as a result of a selected group of customers who had been notably receptive to the adjustments. Nevertheless, when the variation is set dwell and uncovered to a bigger and extra various viewers, it’s doubtless that the preliminary spike will diminish, and the efficiency will converge in direction of the common or baseline stage. This could result in completely different outcomes in comparison with what the testing software initially indicated, as the intense outcomes noticed throughout testing might not be indicative of the variation’s long-term impression.

Understanding the idea of regression to the imply is crucial when deciphering A/B testing outcomes. It highlights the significance of not solely counting on the preliminary testing section findings however contemplating the general efficiency of the variation over a extra prolonged interval. By contemplating the potential for regression to the imply, companies can keep away from making faulty conclusions or implementing adjustments primarily based on momentary spikes or dips noticed through the testing section. It underscores the necessity for cautious interpretation of A/B testing outcomes and taking a complete view of the variation’s efficiency in the actual world.

Conclusion

So, there you might have it. The truth of A/B testing instruments doesn’t all the time align with the real-world outcomes you expertise. It’s not a flaw in your evaluation abilities or an indication that A/B testing is unreliable. It’s simply the nature of the beast.

When deciphering A/B testing outcomes, it’s essential to not solely depend on the preliminary findings however think about the general efficiency of the variation over an prolonged interval. By doing so, companies can keep away from making faulty conclusions or implementing adjustments primarily based on momentary spikes or dips noticed through the testing section.

To navigate the fact hole, it’s vital to strategy A/B testing outcomes with a important eye. Concentrate on the limitations of the instruments and account for real-world contexts. Complement your findings with different analysis strategies to achieve a complete understanding of the variation’s efficiency. By taking a holistic strategy, you’ll be well-equipped to make data-driven choices that align with the fact of your customers.

[ad_2]