[ad_1]

Introduction

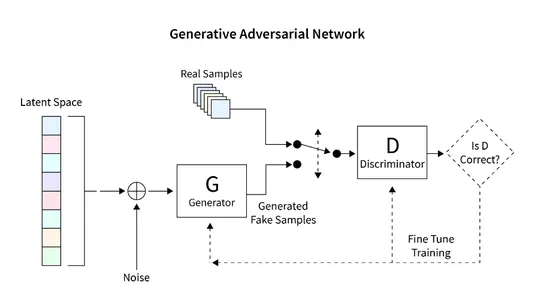

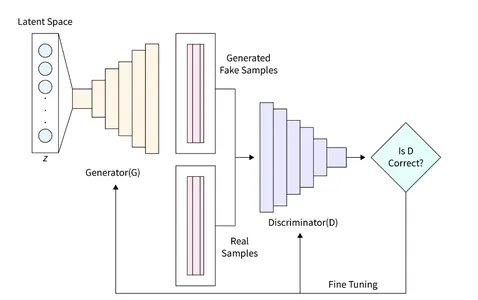

On this article, we discover the applying of GANs in TensorFlow for producing distinctive renditions of handwritten digits. The GAN framework contains two key elements: the generator and the discriminator. The generator generates new pictures in a randomized method, whereas the discriminator is designed to distinguish between genuine and counterfeit pictures. By way of GAN coaching, we get hold of a set of pictures that intently resemble handwritten digits. The first goal of this text is to stipulate the process for establishing and evaluating GANs utilizing the MNIST dataset.

Studying Targets

- This text gives a complete introduction to Generative Adversarial Networks (GANs) and explores their purposes in picture era.

- The principle goal of this tutorial is to information readers by the step-by-step means of establishing a GAN utilizing the TensorFlow library. It covers coaching the GAN on the MNIST dataset to generate new pictures of handwritten digits.

- The article discusses the structure and elements of GANs, together with turbines and discriminators, to boost readers’ understanding of their basic workings.

- To assist studying, the article consists of code examples that reveal varied duties, reminiscent of studying and preprocessing the MNIST dataset, constructing the GAN structure, calculating loss capabilities, coaching the community, and evaluating the outcomes.

- Moreover, the article explores the anticipated final result of GANs, which is a set of pictures that bear a placing resemblance to handwritten digits.

This text was revealed as part of the Knowledge Science Blogathon.

What are we constructing?

Producing novel pictures utilizing preexisting picture databases is a distinguished function of specialised fashions referred to as Generative Adversarial Networks (GANs). GANs excel in producing unsupervised or semi-supervised pictures leveraging numerous picture datasets.

This text harnesses the image-generation potential of GANs to create handwritten digits. The methodology entails coaching the community on a handwritten digit database. On this educational piece, we’ll assemble a rudimentary GAN using the Tensorflow library, conduct coaching on the MNIST dataset, and generate recent pictures of handwritten digits.

How can we set this up?

The first emphasis of this text revolves round harnessing the picture era potential of GANs. The process commences with the loading and preprocessing of the picture database to facilitate the GAN coaching course of. As soon as the information is efficiently loaded, we proceed to assemble the GAN mannequin and develop the mandatory code for coaching and testing. Within the subsequent part, detailed directions are supplied on implementing this performance and producing a recent picture utilizing the MNIST database.

Mannequin Constructing

The GAN mannequin we purpose to construct consists of two necessary elements:

- Generator: This part is answerable for producing new pictures.

- Discriminator: This part evaluates the standard of the generated picture.

The final structure that we are going to develop to generate pictures utilizing GAN is proven within the diagram beneath. The next part gives a quick description of learn how to learn the database, create the required structure, calculate the loss operate, and practice the community. Moreover, code is supplied to examine the community and generate new pictures.

Studying the Dataset

The MNIST dataset holds nice prominence within the subject of laptop imaginative and prescient and contains an enormous assortment of handwritten digits with dimensions of 28×28 pixels. This dataset proves to be very best for our GAN implementation as a result of its grayscale, single-channel picture format.

The following code snippet demonstrates the utilization of a built-in operate in Tensorflow to load the MNIST dataset. Upon profitable loading, we proceed to normalize and reshape the pictures right into a three-dimensional format. This transformation allows environment friendly processing of the 2D picture information inside the GAN structure. Moreover, reminiscence is allotted for each coaching and validation information.

The form of every picture is outlined as a 28x28x1 matrix, the place the final dimension represents the variety of channels within the picture. Because the MNIST dataset contains grayscale pictures, we solely have a single channel.

On this explicit occasion, we set the dimensions of the latent area, denoted as “zsize,” to 100. This worth could be adjusted in keeping with particular necessities or preferences.

from __future__ import print_function, division

from keras.datasets import mnist

from keras.layers import Enter, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D

from keras.layers import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.fashions import Sequential, Mannequin

from keras.optimizers import Adam, SGD

import matplotlib.pyplot as plt

import sys

import numpy as np

num_rows = 28

num_cols = 28

num_channels = 1

input_shape = (num_rows, num_cols, num_channels)

z_size = 100

(train_ims, _), (_, _) = mnist.load_data()

train_ims = train_ims / 127.5 - 1.

train_ims = np.expand_dims(train_ims, axis=3)

legitimate = np.ones((batch_size, 1))

pretend = np.zeros((batch_size, 1))

Defining the Generator

The Generator (D) assumes an important function in GANs as it’s answerable for producing practical pictures that may deceive the discriminator. It serves as the first part for picture formation in GANs. On this examine, we make the most of a selected structure for the Generator, which includes a absolutely linked (FC) layer and employs Leaky ReLU activation. Nevertheless, it’s price noting that the final layer of the Generator makes use of TanH activation as an alternative of LeakyReLU. This adjustment was made to make sure that the generated picture resides inside the identical interval (-1, 1) as the unique MNIST database.

def build_generator():

gen_model = Sequential()

gen_model.add(Dense(256, input_dim=z_size))

gen_model.add(LeakyReLU(alpha=0.2))

gen_model.add(BatchNormalization(momentum=0.8))

gen_model.add(Dense(512))

gen_model.add(LeakyReLU(alpha=0.2))

gen_model.add(BatchNormalization(momentum=0.8))

gen_model.add(Dense(1024))

gen_model.add(LeakyReLU(alpha=0.2))

gen_model.add(BatchNormalization(momentum=0.8))

gen_model.add(Dense(np.prod(input_shape), activation='tanh'))

gen_model.add(Reshape(input_shape))

gen_noise = Enter(form=(z_size,))

gen_img = gen_model(gen_noise)

return Mannequin(gen_noise, gen_img)

Defining the Discriminator

In a Generative Adversarial Community (GAN), the Discriminator (D) performs the crucial activity of differentiating between actual pictures and generated pictures by assessing their authenticity and chance. This part could be seen as a binary classification downside. To deal with this activity, we are able to make use of a simplified community structure comprising Totally Related Layers (FC), Leaky ReLU activation, and Dropout Layers. It is very important point out that the ultimate layer of the Discriminator consists of an FC layer adopted by Sigmoid activation. The Sigmoid activation operate produces the specified classification chance.

def build_discriminator():

disc_model = Sequential()

disc_model.add(Flatten(input_shape=input_shape))

disc_model.add(Dense(512))

disc_model.add(LeakyReLU(alpha=0.2))

disc_model.add(Dense(256))

disc_model.add(LeakyReLU(alpha=0.2))

disc_model.add(Dense(1, activation='sigmoid'))

disc_img = Enter(form=input_shape)

validity = disc_model(disc_img)

return Mannequin(disc_img, validity)

Computing the Loss Operate

With a view to guarantee a great picture era course of in GANs, it is very important decide the suitable metrics to judge its efficiency. Outline this parameter by the loss operate.

The discriminator is answerable for dividing the generated picture into actual or pretend and giving the chance of being actual. To attain this distinction, the Discriminator goals to maximise the operate D(x) when introduced with an actual picture and reduce D(G(z)) when introduced with a false picture.

Then again, the aim of the Generator is to idiot the Discriminator by creating a practical picture that may be misinterpreted. Mathematically, this entails scaling D(G(z)). Nevertheless, solely counting on this part as a loss operate may cause the community to be overconfident with incorrect outcomes. To unravel this downside, we use the log of the loss operate (D(G(z)).

The general value operate of the GAN to generate a picture could be expressed as a minimal recreation:

min_G max_D V(D,G) = E(xp_data(x))(log(D(x))] + E(zp(z))(log(1 – D(G(z)))])

Such GAN coaching requires a high quality steadiness and might take as a match between two opponents. All sides seeks to affect and outdo the opposite by taking part in the MinMax recreation.

We are able to use Binary Cross Entropy Loss to implement Generator and Discriminator.

For the implementation of the Generator and Discriminator, we are able to make the most of the Binary Cross entropy loss.

# discriminator

disc= build_discriminator()

disc.compile(loss="binary_crossentropy",

optimizer="sgd",

metrics=['accuracy'])

z = Enter(form=(z_size,))

# generator

img = generator(z)

disc.trainable = False

validity = disc(img)

# mixed mannequin

mixed = Mannequin(z, validity)

mixed.compile(loss="binary_crossentropy", optimizer="sgd")

Optimizing the Loss

To facilitate the coaching of the community, our goal is to contain the GAN in a MinMax recreation. This studying course of revolves round optimizing the community weights by using Gradient Descent. With a view to speed up the educational course of and stop convergence to suboptimal loss landscapes, Stochastic Gradient Descent (SGD) is employed.

Provided that the Discriminator and Generator have distinct losses, a single loss operate can’t concurrently optimize each methods. Consequently, utlize the separate loss capabilities for every system.

def intialize_model():

disc= build_discriminator()

disc.compile(loss="binary_crossentropy",

optimizer="sgd",

metrics=['accuracy'])

generator = build_generator()

z = Enter(form=(z_size,))

img = generator(z)

disc.trainable = False

validity = disc(img)

mixed = Mannequin(z, validity)

mixed.compile(loss="binary_crossentropy", optimizer="sgd")

return disc, Generator, and mixed

After specifying all of the required options, we are able to practice the system and optimize the loss. The steps to coach a GAN to generate a picture are as follows:

- Load the picture and generate a random sound of the identical dimension because the loaded picture.

- Differentiate between the uploaded picture and the sound produced and contemplate the potential for actual or pretend.

- Produce one other random noise of the identical magnitude and supply as enter to the generator.

- Prepare the generator for a selected interval.

- Repeat these steps till the picture is passable.

def practice(epochs, batch_size=128, sample_interval=50):

# load pictures

(train_ims, _), (_, _) = mnist.load_data()

# preprocess

train_ims = train_ims / 127.5 - 1.

train_ims = np.expand_dims(train_ims, axis=3)

legitimate = np.ones((batch_size, 1))

pretend = np.zeros((batch_size, 1))

# coaching loop

for epoch in vary(epochs):

batch_index = np.random.randint(0, train_ims.form[0], batch_size)

imgs = train_ims[batch_index]

# create noise

noise = np.random.regular(0, 1, (batch_size, z_size))

# predict utilizing a Generator

gen_imgs = gen.predict(noise)

# calculate loss capabilities

real_disc_loss = disc.train_on_batch(imgs, legitimate)

fake_disc_loss = disc.train_on_batch(gen_imgs, pretend)

disc_loss_total = 0.5 * np.add(real_disc_loss, fake_disc_loss)

noise = np.random.regular(0, 1, (batch_size, z_size))

g_loss = full_model.train_on_batch(noise, legitimate)

# save outputs each few epochs

if epoch % sample_interval == 0:

one_batch(epoch)

Producing Handwritten Digits

Utilizing the MNIST dataset, we are able to create a utility operate to generate predictions for a set of pictures utilizing the Generator. This operate generates a random sound, provide it to the generator, run it to show the generated picture and saves it in a particular folder. Advocate to run this utility operate periodically, reminiscent of each 200 cycles, to observe community progress. The implementation is beneath:

def one_batch(epoch):

r, c = 5, 5

noise_model = np.random.regular(0, 1, (r * c, z_size))

gen_images = gen.predict(noise_model)

# Rescale pictures 0 - 1

gen_images = gen_images*(0.5) + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in vary(r):

for j in vary(c):

axs[i,j].imshow(gen_images[cnt, :,:,0], cmap='grey')

axs[i,j].axis('off')

cnt += 1

fig.savefig("pictures/%d.png" % epoch)

plt.shut()

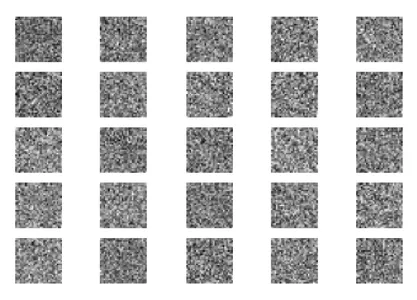

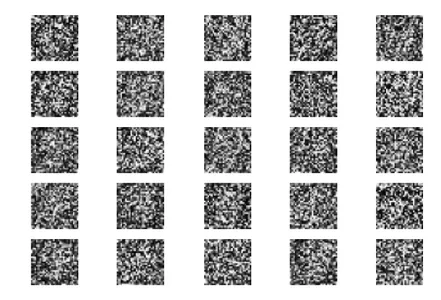

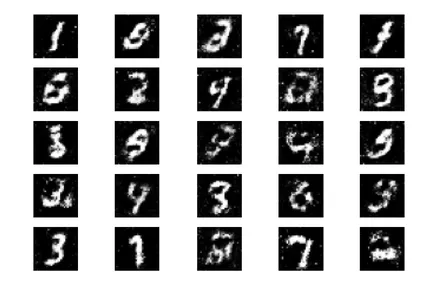

In our experiment, we skilled the GAN for about 10,000 epochs utilizing a batch dimension of 32. To trace the progress of the coaching, we saved the generated pictures each 200 epochs and saved them in a delegated folder referred to as “pictures.”

disc, gen, full_model = intialize_model()

practice(epochs=10000, batch_size=32, sample_interval=200)Now, let’s look at the GAN simulation outcomes at totally different phases: initialization, 400 epochs, 5000 epochs, and the ultimate end result at 10000 epochs.

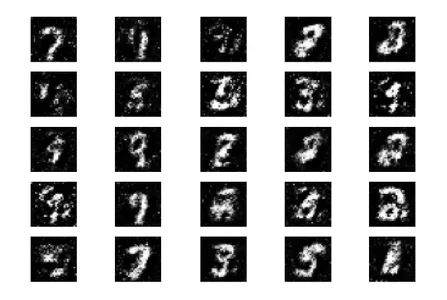

Initially, we begin with random noise because the enter to the Generator.

After 400 epochs of coaching, we are able to observe some progress, though the generated pictures nonetheless differ considerably from actual digits.

After coaching for 5000 epochs, we are able to observe that the generated figures begin to resemble the MNIST dataset.

Full the total 10,000 epochs of coaching, we get hold of the next outputs.

These generated pictures intently resemble the handwritten quantity information to coach the community. It is very important notice that these pictures aren’t a part of the coaching set and completely generated by the community.

Subsequent Steps

Now that we’ve achieved good leads to GAN’s picture era, there are various methods we are able to additional enhance it. Throughout the scope of this dialogue, we might contemplate experimenting with totally different parameters. Listed below are a couple of strategies:

- Discover totally different values for the latent area variable z_size to see if it will increase effectivity.

- Improve the variety of coaching epochs to over 10,000. Doubling or tripling the length of coaching might reveal improved or degraded outcomes.

- Attempt utilizing totally different datasets like style MNIST or shifting MNIST. Since these datasets have the identical construction as MNIST, adapt our present code.

- Contemplate experimenting with various architectures reminiscent of CycleGun, DCGAN, and others. Modifying the generator and discriminator capabilities could also be enough to discover these fashions.

By implementing these modifications, we are able to additional improve the capabilities of GANs and discover new prospects in picture era.

These generated pictures intently resemble the handwritten quantity information that makes use of to coach the community. These pictures aren’t a part of the coaching set and generated totally by the community.

Conclusion

In abstract, GAN is a strong machine studying mannequin able to producing new pictures primarily based on present databases. On this tutorial, we’ve proven learn how to design and practice a easy GAN utilizing the Tensorflow library for instance and the MNIST database.

Key Takeaways

- GAN consists of two necessary elements: a generator, which is answerable for producing new pictures from random enter, and the Discriminator, which goals to tell apart between actual and faux pictures.

- By way of the educational course of, we’ve succeeded in making a set of pictures that intently resemble handwritten digits, as proven within the instance picture.

- To optimize GAN efficiency, we offer matching metrics and loss capabilities that assist distinguish actual and faux pictures. By evaluating GANs on unseen information and utilizing Mills, we are able to generate new, beforehand unseen pictures.

- Total, GANs provide fascinating prospects in picture era and have nice potential for a number of purposes reminiscent of machine studying and laptop imaginative and prescient.

Regularly Requested Questions

A. Generative Adversarial Networks (GAN) is a sort of machine studying framework that may generate new information with statistics just like a given coaching set. Use GANs for a lot of sorts of information, together with pictures, movies, or textual content.

A. A generative mannequin is a machine studying algorithm that generates new information primarily based on a set of enter information. Use these fashions for duties reminiscent of picture era, textual content era, and different types of information synthesis.

A. A loss operate is a mathematical operate to measure the distinction between two units of knowledge. Within the context of GAN, practice the mannequin generator by optimizing the loss operate that defines the distinction between the generated information and the coaching information, sometimes utilizing class information and annotated pictures.

A. CNN (Convolutional Neural Networks) and GAN (Generative Adversarial Networks) are each deep studying architectures however have totally different objectives. GANs are generative fashions that purpose to generate new information that resembles a given coaching set, whereas CNNs are for classification and recognition duties. Though it’s potential to make use of CNN as a generative mannequin by configuring it as a variable autoencoder (VAE), CNN is sweet in discrimination coaching and simpler in picture classification duties in laptop imaginative and prescient.

The media proven on this article shouldn’t be owned by Analytics Vidhya and is used on the Writer’s discretion.

Associated

[ad_2]